Nicholas Weiler

Nicholas Weilerwww.ucsf.edu

Originally posted July 30, 2019

Here is an excerpt:

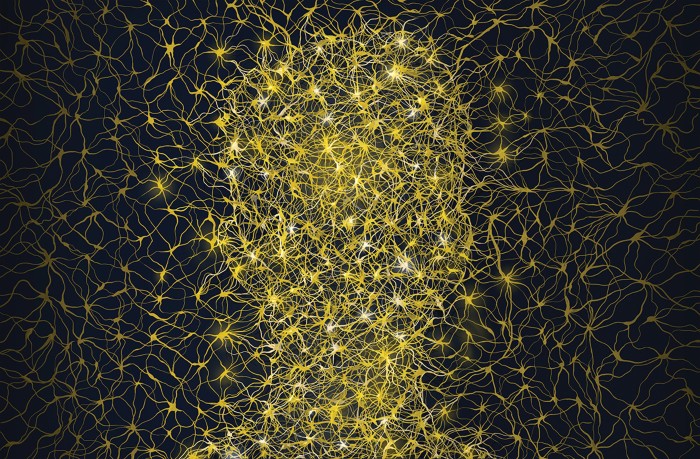

“In unearthing these ethical issues, we try as much as possible to get out of our armchairs and actually observe how people are interacting with these new technologies. We interview everyone from patients and family members to clinicians and researchers,” Chiong said. “We also work with philosophers, lawyers, and others with experience in biomedicine, as well as anthropologists, sociologists and others who can help us understand the clinical challenges people are actually facing as well as their concerns about new technologies.”

Some of the top issues on Chiong’s mind include ensuring patients understand how the data recorded from their brains are being used by researchers; protecting the privacy of this data; and determining what kind of control patients will ultimately have over their brain data.

“As with all technology, ethical questions about neurotechnology are embedded not just in the technology or science itself, but also the social structure in which the technology is used” Chiong added. “These questions are not just the domain of scientists, engineers, or even professional ethicists, but are part of larger societal conversation we’re beginning to have about the appropriate applications of technology, and personal data, and when it's important for people to be able to opt out or say no.”

The info is here.