Journal of Personality and Social Psychology,

Available at SSRN: https://ssrn.com/abstract=3409146

Abstract

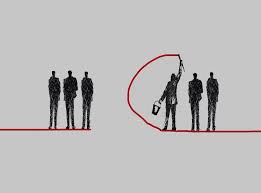

Which social decisions are influenced by intuitive processes? Which by deliberative processes? The dual-process approach to human sociality has emerged in the last decades as a vibrant and exciting area of research. Yet, a perspective that integrates empirical and theoretical work is lacking. This review and meta-analysis synthesizes the existing literature on the cognitive basis of cooperation, altruism, truth-telling, positive and negative reciprocity, and deontology, and develops a framework that organizes the experimental regularities. The meta-analytic results suggest that intuition favours a set of heuristics that are related to the instinct for self-preservation: people avoid being harmed, avoid harming others (especially when there is a risk of harm to themselves), and are averse to disadvantageous inequalities. Finally, this paper highlights some key research questions to further advance our understanding of the cognitive foundations of human sociality.

Here is my summary:

This article proposes a dual-process approach to human sociality. Capraro argues that there are two main systems that govern human social behavior: an intuitive system and a deliberative system. The intuitive system is fast, automatic, and often based on heuristics, or mental shortcuts. The deliberative system is slower, more effortful, and based on a more careful consideration of the evidence.

Capraro argues that the intuitive system plays a key role in cooperation, altruism, truth-telling, positive and negative reciprocity, and deontology. This is because these behaviors are often necessary for self-preservation. For example, in order to avoid being harmed, people are naturally inclined to cooperate with others and avoid harming others. Similarly, in order to maintain positive relationships with others, people are inclined to be truthful and reciprocate favors.

The deliberative system plays a more important role in more complex social situations, such as when people need to make decisions that have long-term consequences or when they need to take into account the needs of others. In these cases, people are more likely to engage in careful consideration of the evidence and to weigh the different options before making a decision. The authors conclude that the dual-process approach to human sociality provides a framework for understanding the complex cognitive basis of human social behavior. This framework can be used to explain a wide range of social phenomena, from cooperation and altruism to truth-telling and deontology.