Cade Metz

The New York Times

Originally posted March 1, 2019

Here is an excerpt:

As companies and governments deploy these A.I. technologies, researchers are also realizing that some systems are woefully biased. Facial recognition services, for instance, can be significantly less accurate when trying to identify women or someone with darker skin. Other systems may include security holes unlike any seen in the past. Researchers have shown that driverless cars can be fooled into seeing things that are not really there.

All this means that building ethical artificial intelligence is an enormously complex task. It gets even harder when stakeholders realize that ethics are in the eye of the beholder.

As some Microsoft employees protest the company’s military contracts, Mr. Smith said that American tech companies had long supported the military and that they must continue to do so. “The U.S. military is charged with protecting the freedoms of this country,” he told the conference. “We have to stand by the people who are risking their lives.”

Though some Clarifai employees draw an ethical line at autonomous weapons, others do not. Mr. Zeiler argued that autonomous weapons will ultimately save lives because they would be more accurate than weapons controlled by human operators. “A.I. is an essential tool in helping weapons become more accurate, reducing collateral damage, minimizing civilian casualties and friendly fire incidents,” he said in a statement.

The info is here.

Welcome to the Nexus of Ethics, Psychology, Morality, Philosophy and Health Care

Welcome to the nexus of ethics, psychology, morality, technology, health care, and philosophy

Sunday, March 31, 2019

Saturday, March 30, 2019

AI Safety Needs Social Scientists

Geoffrey Irving and Amanda Askell

distill.pub

Originally published February 19, 2019

Here is an excerpt:

Learning values by asking humans questions

We start with the premise that human values are too complex to describe with simple rules. By “human values” we mean our full set of detailed preferences, not general goals such as “happiness” or “loyalty”. One source of complexity is that values are entangled with a large number of facts about the world, and we cannot cleanly separate facts from values when building ML models. For example, a rule that refers to “gender” would require an ML model that accurately recognizes this concept, but Buolamwini and Gebru found that several commercial gender classifiers with a 1% error rate on white men failed to recognize black women up to 34% of the time. Even where people have correct intuition about values, we may be unable to specify precise rules behind these intuitions. Finally, our values may vary across cultures, legal systems, or situations: no learned model of human values will be universally applicable.

If humans can’t reliably report the reasoning behind their intuitions about values, perhaps we can make value judgements in specific cases. To realize this approach in an ML context, we ask humans a large number of questions about whether an action or outcome is better or worse, then train on this data. “Better or worse” will include both factual and value-laden components: for an AI system trained to say things, “better” statements might include “rain falls from clouds”, “rain is good for plants”, “many people dislike rain”, etc. If the training works, the resulting ML system will be able to replicate human judgement about particular situations, and thus have the same “fuzzy access to approximate rules” about values as humans. We also train the ML system to come up with proposed actions, so that it knows both how to perform a task and how to judge its performance. This approach works at least in simple cases, such as Atari games and simple robotics tasks and language-specified goals in gridworlds. The questions we ask change as the system learns to perform different types of actions, which is necessary as the model of what is better or worse will only be accurate if we have applicable data to generalize from.

The info is here.

distill.pub

Originally published February 19, 2019

Here is an excerpt:

Learning values by asking humans questions

We start with the premise that human values are too complex to describe with simple rules. By “human values” we mean our full set of detailed preferences, not general goals such as “happiness” or “loyalty”. One source of complexity is that values are entangled with a large number of facts about the world, and we cannot cleanly separate facts from values when building ML models. For example, a rule that refers to “gender” would require an ML model that accurately recognizes this concept, but Buolamwini and Gebru found that several commercial gender classifiers with a 1% error rate on white men failed to recognize black women up to 34% of the time. Even where people have correct intuition about values, we may be unable to specify precise rules behind these intuitions. Finally, our values may vary across cultures, legal systems, or situations: no learned model of human values will be universally applicable.

If humans can’t reliably report the reasoning behind their intuitions about values, perhaps we can make value judgements in specific cases. To realize this approach in an ML context, we ask humans a large number of questions about whether an action or outcome is better or worse, then train on this data. “Better or worse” will include both factual and value-laden components: for an AI system trained to say things, “better” statements might include “rain falls from clouds”, “rain is good for plants”, “many people dislike rain”, etc. If the training works, the resulting ML system will be able to replicate human judgement about particular situations, and thus have the same “fuzzy access to approximate rules” about values as humans. We also train the ML system to come up with proposed actions, so that it knows both how to perform a task and how to judge its performance. This approach works at least in simple cases, such as Atari games and simple robotics tasks and language-specified goals in gridworlds. The questions we ask change as the system learns to perform different types of actions, which is necessary as the model of what is better or worse will only be accurate if we have applicable data to generalize from.

The info is here.

Friday, March 29, 2019

Artificial Morality

Robert Koehler

www.commondreams.org

Originally posted March 14, 2019

Artificial Intelligence is one thing. Artificial morality is another. It may sound something like this:

“First, we believe in the strong defense of the United States and we want the people who defend it to have access to the nation’s best technology, including from Microsoft.”

The words are those of Microsoft president Brad Smith, writing on a corporate blogsite last fall in defense of the company’s new contract with the U.S. Army, worth $479 million, to make augmented reality headsets for use in combat. The headsets, known as the Integrated Visual Augmentation System, or IVAS, are a way to “increase lethality” when the military engages the enemy, according to a Defense Department official. Microsoft’s involvement in this program set off a wave of outrage among the company’s employees, with more than a hundred of them signing a letter to the company’s top executives demanding that the contract be canceled.

“We are a global coalition of Microsoft workers, and we refuse to create technology for warfare and oppression. We are alarmed that Microsoft is working to provide weapons technology to the U.S. Military, helping one country’s government ‘increase lethality’ using tools we built. We did not sign up to develop weapons, and we demand a say in how our work is used.”

The info is here.

www.commondreams.org

Originally posted March 14, 2019

Artificial Intelligence is one thing. Artificial morality is another. It may sound something like this:

“First, we believe in the strong defense of the United States and we want the people who defend it to have access to the nation’s best technology, including from Microsoft.”

The words are those of Microsoft president Brad Smith, writing on a corporate blogsite last fall in defense of the company’s new contract with the U.S. Army, worth $479 million, to make augmented reality headsets for use in combat. The headsets, known as the Integrated Visual Augmentation System, or IVAS, are a way to “increase lethality” when the military engages the enemy, according to a Defense Department official. Microsoft’s involvement in this program set off a wave of outrage among the company’s employees, with more than a hundred of them signing a letter to the company’s top executives demanding that the contract be canceled.

“We are a global coalition of Microsoft workers, and we refuse to create technology for warfare and oppression. We are alarmed that Microsoft is working to provide weapons technology to the U.S. Military, helping one country’s government ‘increase lethality’ using tools we built. We did not sign up to develop weapons, and we demand a say in how our work is used.”

The info is here.

The history and future of digital health in the field of behavioral medicine

Danielle Arigo, Danielle E. Jake-Schoffman, Kathleen Wolin, Ellen Beckjord, & Eric B. Hekler

J Behav Med (2019) 42: 67.

https://doi.org/10.1007/s10865-018-9966-z

Abstract

Since its earliest days, the field of behavioral medicine has leveraged technology to increase the reach and effectiveness of its interventions. Here, we highlight key areas of opportunity and recommend next steps to further advance intervention development, evaluation, and commercialization with a focus on three technologies: mobile applications (apps), social media, and wearable devices. Ultimately, we argue that future of digital health behavioral science research lies in finding ways to advance more robust academic-industry partnerships. These include academics consciously working towards preparing and training the work force of the twenty first century for digital health, actively working towards advancing methods that can balance the needs for efficiency in industry with the desire for rigor and reproducibility in academia, and the need to advance common practices and procedures that support more ethical practices for promoting healthy behavior.

Here is a portion of the Summary

An unknown landscape of privacy and data security

Another relatively new set of challenges centers around the issues of privacy and data security presented by digital health tools. First, some commercially available technologies that were originally produced for purposes other than promoting healthy behavior (e.g., social media) are now being used to study health behavior and deliver interventions. This poses a variety of potential privacy issues depending on the privacy settings used, including the fact that data from non-participants may inadvertently be viewed and collected, and their rights should also be considered as part of study procedures (Arigo et al., 2018). Privacy may be of particular concern as apps begin to incorporate additional smartphone technologies such as GPS location tracking and cameras (Nebeker et al., 2015). Second, for commercial products that were originally designed for health behavior change (e.g., apps), researchers need to carefully read and understand the associated privacy and security agreements, be sure that participants understand these agreements, and include a summary of this information in their applications to ethics review boards.

J Behav Med (2019) 42: 67.

https://doi.org/10.1007/s10865-018-9966-z

Abstract

Since its earliest days, the field of behavioral medicine has leveraged technology to increase the reach and effectiveness of its interventions. Here, we highlight key areas of opportunity and recommend next steps to further advance intervention development, evaluation, and commercialization with a focus on three technologies: mobile applications (apps), social media, and wearable devices. Ultimately, we argue that future of digital health behavioral science research lies in finding ways to advance more robust academic-industry partnerships. These include academics consciously working towards preparing and training the work force of the twenty first century for digital health, actively working towards advancing methods that can balance the needs for efficiency in industry with the desire for rigor and reproducibility in academia, and the need to advance common practices and procedures that support more ethical practices for promoting healthy behavior.

Here is a portion of the Summary

An unknown landscape of privacy and data security

Another relatively new set of challenges centers around the issues of privacy and data security presented by digital health tools. First, some commercially available technologies that were originally produced for purposes other than promoting healthy behavior (e.g., social media) are now being used to study health behavior and deliver interventions. This poses a variety of potential privacy issues depending on the privacy settings used, including the fact that data from non-participants may inadvertently be viewed and collected, and their rights should also be considered as part of study procedures (Arigo et al., 2018). Privacy may be of particular concern as apps begin to incorporate additional smartphone technologies such as GPS location tracking and cameras (Nebeker et al., 2015). Second, for commercial products that were originally designed for health behavior change (e.g., apps), researchers need to carefully read and understand the associated privacy and security agreements, be sure that participants understand these agreements, and include a summary of this information in their applications to ethics review boards.

Thursday, March 28, 2019

An Empirical Evaluation of the Failure of the Strickland Standard to Ensure Adequate Counsel to Defendants with Mental Disabilities Facing the Death Penalty

Michael L. Perlin, Talia Roitberg Harmon, & Sarah Chatt

Social Science Research Network

http://dx.doi.org/10.2139/ssrn.3332730

Abstract

Anyone who has been involved with death penalty litigation in the past four decades knows that one of the most scandalous aspects of that process—in many ways, the most scandalous—is the inadequacy of counsel so often provided to defendants facing execution. By now, virtually anyone with even a passing interest is well versed in the cases and stories about sleeping lawyers, missed deadlines, alcoholic and disoriented lawyers, and, more globally, lawyers who simply failed to vigorously defend their clients. This is not news.

And, in the same vein, anyone who has been so involved with this area of law and policy for the past 35 years knows that it is impossible to make sense of any of these developments without a deep understanding of the Supreme Court’s decision in Strickland v. Washington, 466 U.S. 668 (1984), the case that established a pallid, virtually-impossible-to fail test for adequacy of counsel in such litigation. Again, this is not news.

We also know that some of the most troubling results in Strickland interpretations have come in cases in which the defendant was mentally disabled—either by serious mental illness or by intellectual disability. Some of the decisions in these cases—rejecting Strickland-based appeals—have been shocking, making a mockery out of a constitutionally based standard.

To the best of our knowledge, no one has—prior to this article—undertaken an extensive empirical analysis of how one discrete US federal circuit court of appeals has dealt with a wide array of Strickland-claim cases in cases involving defendants with mental disabilities. We do this here. In this article, we reexamine these issues from the perspective of the 198 state cases decided in the Fifth Circuit from 1984 to 2017 involving death penalty verdicts in which, at some stage of the appellate process, a Strickland claim was made (in which there were only 13 cases in which any relief was even preliminarily granted under Strickland). As we demonstrate subsequently, Strickland is indeed a pallid standard, fostering “tolerance of abysmal lawyering,” and is one that makes a mockery of the most vital of constitutional law protections: the right to adequate counsel.

This article will proceed in this way. First, we discuss the background of the development of counsel adequacy in death penalty cases. Next, we look carefully at Strickland, and the subsequent Supreme Court cases that appear—on the surface—to bolster it in this context. We then consider multiple jurisprudential filters that we believe must be taken seriously if this area of the law is to be given any authentic meaning. Next, we will examine and interpret the data that we have developed, looking carefully at what happened after the Strickland-ordered remand in the 13 Strickland “victories.” Finally, we will look at this entire area of law through the filter of therapeutic jurisprudence, and then explain why and how the charade of adequacy of counsel law fails miserably to meet the standards of this important school of thought.

Social Science Research Network

http://dx.doi.org/10.2139/ssrn.3332730

Abstract

Anyone who has been involved with death penalty litigation in the past four decades knows that one of the most scandalous aspects of that process—in many ways, the most scandalous—is the inadequacy of counsel so often provided to defendants facing execution. By now, virtually anyone with even a passing interest is well versed in the cases and stories about sleeping lawyers, missed deadlines, alcoholic and disoriented lawyers, and, more globally, lawyers who simply failed to vigorously defend their clients. This is not news.

And, in the same vein, anyone who has been so involved with this area of law and policy for the past 35 years knows that it is impossible to make sense of any of these developments without a deep understanding of the Supreme Court’s decision in Strickland v. Washington, 466 U.S. 668 (1984), the case that established a pallid, virtually-impossible-to fail test for adequacy of counsel in such litigation. Again, this is not news.

We also know that some of the most troubling results in Strickland interpretations have come in cases in which the defendant was mentally disabled—either by serious mental illness or by intellectual disability. Some of the decisions in these cases—rejecting Strickland-based appeals—have been shocking, making a mockery out of a constitutionally based standard.

To the best of our knowledge, no one has—prior to this article—undertaken an extensive empirical analysis of how one discrete US federal circuit court of appeals has dealt with a wide array of Strickland-claim cases in cases involving defendants with mental disabilities. We do this here. In this article, we reexamine these issues from the perspective of the 198 state cases decided in the Fifth Circuit from 1984 to 2017 involving death penalty verdicts in which, at some stage of the appellate process, a Strickland claim was made (in which there were only 13 cases in which any relief was even preliminarily granted under Strickland). As we demonstrate subsequently, Strickland is indeed a pallid standard, fostering “tolerance of abysmal lawyering,” and is one that makes a mockery of the most vital of constitutional law protections: the right to adequate counsel.

This article will proceed in this way. First, we discuss the background of the development of counsel adequacy in death penalty cases. Next, we look carefully at Strickland, and the subsequent Supreme Court cases that appear—on the surface—to bolster it in this context. We then consider multiple jurisprudential filters that we believe must be taken seriously if this area of the law is to be given any authentic meaning. Next, we will examine and interpret the data that we have developed, looking carefully at what happened after the Strickland-ordered remand in the 13 Strickland “victories.” Finally, we will look at this entire area of law through the filter of therapeutic jurisprudence, and then explain why and how the charade of adequacy of counsel law fails miserably to meet the standards of this important school of thought.

Behind the Scenes, Health Insurers Use Cash and Gifts to Sway Which Benefits Employers Choose

Marshall Allen

Propublica.org

Originally posted February 20, 2019

Here is an excerpt:

These industry payments can’t help but influence which plans brokers highlight for employers, said Eric Campbell, director of research at the University of Colorado Center for Bioethics and Humanities.

“It’s a classic conflict of interest,” Campbell said.

There’s “a large body of virtually irrefutable evidence,” Campbell said, that shows drug company payments to doctors influence the way they prescribe. “Denying this effect is like denying that gravity exists.” And there’s no reason, he said, to think brokers are any different.

Critics say the setup is akin to a single real estate agent representing both the buyer and seller in a home sale. A buyer would not expect the seller’s agent to negotiate the lowest price or highlight all the clauses and fine print that add unnecessary costs.

“If you want to draw a straight conclusion: It has been in the best interest of a broker, from a financial point of view, to keep that premium moving up,” said Jeffrey Hogan, a regional manager in Connecticut for a national insurance brokerage and one of a band of outliers in the industry pushing for changes in the way brokers are paid.

The info is here.

Propublica.org

Originally posted February 20, 2019

Here is an excerpt:

These industry payments can’t help but influence which plans brokers highlight for employers, said Eric Campbell, director of research at the University of Colorado Center for Bioethics and Humanities.

“It’s a classic conflict of interest,” Campbell said.

There’s “a large body of virtually irrefutable evidence,” Campbell said, that shows drug company payments to doctors influence the way they prescribe. “Denying this effect is like denying that gravity exists.” And there’s no reason, he said, to think brokers are any different.

Critics say the setup is akin to a single real estate agent representing both the buyer and seller in a home sale. A buyer would not expect the seller’s agent to negotiate the lowest price or highlight all the clauses and fine print that add unnecessary costs.

“If you want to draw a straight conclusion: It has been in the best interest of a broker, from a financial point of view, to keep that premium moving up,” said Jeffrey Hogan, a regional manager in Connecticut for a national insurance brokerage and one of a band of outliers in the industry pushing for changes in the way brokers are paid.

The info is here.

Wednesday, March 27, 2019

Language analysis reveals recent and unusual 'moral polarisation' in Anglophone world

Andrew Masterson

Cosmos Magazine

Originally published March 4, 2019

Here is an excerpt:

Words conveying moral values in more specific domains, however, did not always accord to a similar pattern – revealing, say the researchers, the changing prominence of differing sets of concerns surrounding concepts such as loyalty and betrayal, individualism, and notions of authority.

Remarkably, perhaps, the study is only the second in the academic literature that uses big data to examine shifts in moral values over time. The first, by psychologists Pelin and Selin Kesibir, and published in The Journal of Positive Psychology in 2012, used two approaches to track the frequency of morally-loaded words in a corpus of US books across the twentieth century.

The results revealed a “decline in the use of general moral terms”, and significant downturns in the use of words such as honesty, patience, and compassion.

Haslam and colleagues found that at headline level their results, using a larger dataset, reflected the earlier findings. However, fine-grain investigations revealed a more complex picture. Nevertheless, they say, the changes in the frequency of use for particular types of moral terms is sufficient to allow the twentieth century to be divided into five distinct historical periods.

The words used in the search were taken from lists collated under what is known as Moral Foundations Theory (MFT), a generally supported framework that rejects the idea that morality is monolithic. Instead, the researchers explain, MFT aims to “categorise the automatic and intuitive emotional reactions that commonly occur in moral evaluation across cultures, and [identifies] five psychological systems (or foundations): Harm, Fairness, Ingroup, Authority, and Purity.”

The info is here.

Cosmos Magazine

Originally published March 4, 2019

Here is an excerpt:

Words conveying moral values in more specific domains, however, did not always accord to a similar pattern – revealing, say the researchers, the changing prominence of differing sets of concerns surrounding concepts such as loyalty and betrayal, individualism, and notions of authority.

Remarkably, perhaps, the study is only the second in the academic literature that uses big data to examine shifts in moral values over time. The first, by psychologists Pelin and Selin Kesibir, and published in The Journal of Positive Psychology in 2012, used two approaches to track the frequency of morally-loaded words in a corpus of US books across the twentieth century.

The results revealed a “decline in the use of general moral terms”, and significant downturns in the use of words such as honesty, patience, and compassion.

Haslam and colleagues found that at headline level their results, using a larger dataset, reflected the earlier findings. However, fine-grain investigations revealed a more complex picture. Nevertheless, they say, the changes in the frequency of use for particular types of moral terms is sufficient to allow the twentieth century to be divided into five distinct historical periods.

The words used in the search were taken from lists collated under what is known as Moral Foundations Theory (MFT), a generally supported framework that rejects the idea that morality is monolithic. Instead, the researchers explain, MFT aims to “categorise the automatic and intuitive emotional reactions that commonly occur in moral evaluation across cultures, and [identifies] five psychological systems (or foundations): Harm, Fairness, Ingroup, Authority, and Purity.”

The info is here.

The Value Of Ethics And Trust In Business.. With Artificial Intelligence

Stephen Ibaraki

Forbes.com

Originally posted March 2, 2019

Here is an excerpt:

Increasingly contributing positively to society and driving positive change are a growing discourse around the world and hitting all sectors and disruptive technologies such as Artificial Intelligence (AI).

With more than $20 Trillion USD wealth transfer from baby boomers to millennials, and their focus on the environment and social impact, this trend will accelerate. Business is aware and and taking the lead in this movement of advancing the human condition in a responsible and ethical manner. Values-based leadership, diversity, inclusion, investment and long-term commitment are the multi-stakeholder commitments going forward.

“Over the last 12 years, we have repeatedly seen that those companies who focus on transparency and authenticity are rewarded with the trust of their employees, their customers and their investors. While negative headlines might grab attention, the companies who support the rule of law and operate with decency and fair play around the globe will always succeed in the long term,” explained Ethisphere CEO, Timothy Erblich. “Congratulations to all of the 2018 honorees.”

The info is here.

Forbes.com

Originally posted March 2, 2019

Here is an excerpt:

Increasingly contributing positively to society and driving positive change are a growing discourse around the world and hitting all sectors and disruptive technologies such as Artificial Intelligence (AI).

With more than $20 Trillion USD wealth transfer from baby boomers to millennials, and their focus on the environment and social impact, this trend will accelerate. Business is aware and and taking the lead in this movement of advancing the human condition in a responsible and ethical manner. Values-based leadership, diversity, inclusion, investment and long-term commitment are the multi-stakeholder commitments going forward.

“Over the last 12 years, we have repeatedly seen that those companies who focus on transparency and authenticity are rewarded with the trust of their employees, their customers and their investors. While negative headlines might grab attention, the companies who support the rule of law and operate with decency and fair play around the globe will always succeed in the long term,” explained Ethisphere CEO, Timothy Erblich. “Congratulations to all of the 2018 honorees.”

The info is here.

Tuesday, March 26, 2019

Does AI Ethics Have a Bad Name?

Calum Chace

Here is an excerpt:

Artificial intelligence is a technology, and a very powerful one, like nuclear fission. It will become increasingly pervasive, like electricity. Some say that its arrival may even turn out to be as significant as the discovery of fire. Like nuclear fission, electricity and fire, AI can have positive impacts and negative impacts, and given how powerful it is and it will become, it is vital that we figure out how to promote the positive outcomes and avoid the negative ones.

It's the bias that concerns people in the AI ethics community. They want to minimise the amount of bias in the data which informs the AI systems that help us to make decisions – and ideally, to eliminate the bias altogether. They want to ensure that tech giants and governments respect our privacy at the same time as they develop and deliver compelling products and services. They want the people who deploy AI to make their systems as transparent as possible so that in advance or in retrospect, we can check for sources of bias and other forms of harm.

But if AI is a technology like fire or electricity, why is the field called “AI ethics”? We don’t have “fire ethics” or “electricity ethics,” so why should we have AI ethics? There may be a terminological confusion here, and it could have negative consequences.

One possible downside is that people outside the field may get the impression that some sort of moral agency is being attributed to the AI, rather than to the humans who develop AI systems. The AI we have today is narrow AI: superhuman in certain narrow domains, like playing chess and Go, but useless at anything else. It makes no more sense to attribute moral agency to these systems than it does to a car or a rock. It will probably be many years before we create an AI which can reasonably be described as a moral agent.

The info is here.

Forbes.com

Originally posted March 7, 2019

Here is an excerpt:

Artificial intelligence is a technology, and a very powerful one, like nuclear fission. It will become increasingly pervasive, like electricity. Some say that its arrival may even turn out to be as significant as the discovery of fire. Like nuclear fission, electricity and fire, AI can have positive impacts and negative impacts, and given how powerful it is and it will become, it is vital that we figure out how to promote the positive outcomes and avoid the negative ones.

It's the bias that concerns people in the AI ethics community. They want to minimise the amount of bias in the data which informs the AI systems that help us to make decisions – and ideally, to eliminate the bias altogether. They want to ensure that tech giants and governments respect our privacy at the same time as they develop and deliver compelling products and services. They want the people who deploy AI to make their systems as transparent as possible so that in advance or in retrospect, we can check for sources of bias and other forms of harm.

But if AI is a technology like fire or electricity, why is the field called “AI ethics”? We don’t have “fire ethics” or “electricity ethics,” so why should we have AI ethics? There may be a terminological confusion here, and it could have negative consequences.

One possible downside is that people outside the field may get the impression that some sort of moral agency is being attributed to the AI, rather than to the humans who develop AI systems. The AI we have today is narrow AI: superhuman in certain narrow domains, like playing chess and Go, but useless at anything else. It makes no more sense to attribute moral agency to these systems than it does to a car or a rock. It will probably be many years before we create an AI which can reasonably be described as a moral agent.

The info is here.

Should doctors cry at work?

Fran Robinson

BMJ 2019;364:l690

Many doctors admit to crying at work, whether openly empathising with a patient or on their own behind closed doors. Common reasons for crying are compassion for a dying patient, identifying with a patient’s situation, or feeling overwhelmed by stress and emotion.

Probably still more doctors have done so but been unwilling to admit it for fear that it could be considered unprofessional—a sign of weakness, lack of control, or incompetence. However, it’s increasingly recognised as unhealthy for doctors to bottle up their emotions.

Unexpected tragic events

Psychiatry is a specialty in which doctors might view crying as acceptable, says Annabel Price, visiting researcher at the Department of Psychiatry, University of Cambridge, and a consultant in liaison psychiatry for older adults.

Having discussed the issue with colleagues before being interviewed for this article, she says that none of them would think less of a colleague for crying at work: “There are very few doctors who haven’t felt like crying at work now and again.”

A situation that may move psychiatrists to tears is finding that a patient they’ve been closely involved with has died by suicide. “This is often an unexpected tragic event: it’s very human to become upset, and sometimes it’s hard not to cry when you hear difficult news,” says Price.

The info is here.

BMJ 2019;364:l690

Many doctors admit to crying at work, whether openly empathising with a patient or on their own behind closed doors. Common reasons for crying are compassion for a dying patient, identifying with a patient’s situation, or feeling overwhelmed by stress and emotion.

Probably still more doctors have done so but been unwilling to admit it for fear that it could be considered unprofessional—a sign of weakness, lack of control, or incompetence. However, it’s increasingly recognised as unhealthy for doctors to bottle up their emotions.

Unexpected tragic events

Psychiatry is a specialty in which doctors might view crying as acceptable, says Annabel Price, visiting researcher at the Department of Psychiatry, University of Cambridge, and a consultant in liaison psychiatry for older adults.

Having discussed the issue with colleagues before being interviewed for this article, she says that none of them would think less of a colleague for crying at work: “There are very few doctors who haven’t felt like crying at work now and again.”

A situation that may move psychiatrists to tears is finding that a patient they’ve been closely involved with has died by suicide. “This is often an unexpected tragic event: it’s very human to become upset, and sometimes it’s hard not to cry when you hear difficult news,” says Price.

The info is here.

Monday, March 25, 2019

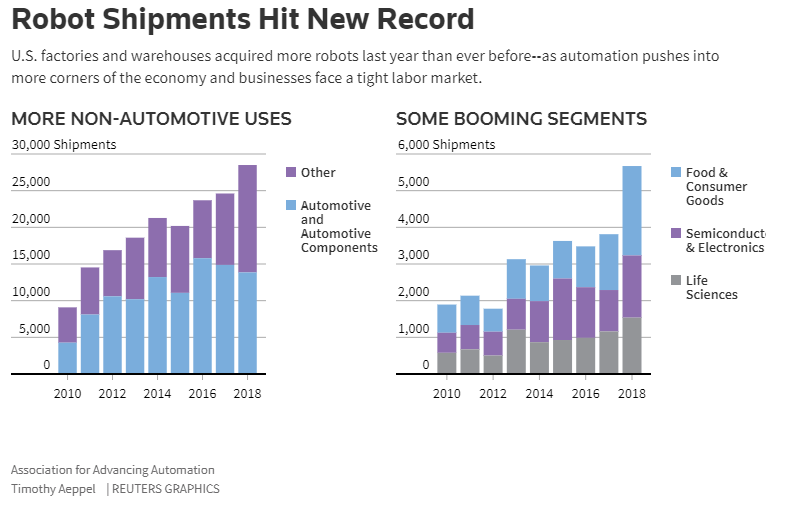

U.S. companies put record number of robots to work in 2018

Reuters

ReutersOriginally published February 28, 2019

U.S. companies installed more robots last year than ever before, as cheaper and more flexible machines put them within reach of businesses of all sizes and in more corners of the economy beyond their traditional foothold in car plants.

Shipments hit 28,478, nearly 16 percent more than in 2017, according to data seen by Reuters that was set for release on Thursday by the Association for Advancing Automation, an industry group based in Ann Arbor, Michigan.

Shipments increased in every sector the group tracks, except automotive, where carmakers cut back after finishing a major round of tooling up for new truck models.

The info is here.

Artificial Intelligence and Black‐Box Medical Decisions: Accuracy versus Explainability

Alex John London

The Hastings Center Report

Volume49, Issue1, January/February 2019, Pages 15-21

Abstract

Although decision‐making algorithms are not new to medicine, the availability of vast stores of medical data, gains in computing power, and breakthroughs in machine learning are accelerating the pace of their development, expanding the range of questions they can address, and increasing their predictive power. In many cases, however, the most powerful machine learning techniques purchase diagnostic or predictive accuracy at the expense of our ability to access “the knowledge within the machine.” Without an explanation in terms of reasons or a rationale for particular decisions in individual cases, some commentators regard ceding medical decision‐making to black box systems as contravening the profound moral responsibilities of clinicians. I argue, however, that opaque decisions are more common in medicine than critics realize. Moreover, as Aristotle noted over two millennia ago, when our knowledge of causal systems is incomplete and precarious—as it often is in medicine—the ability to explain how results are produced can be less important than the ability to produce such results and empirically verify their accuracy.

The info is here.

The Hastings Center Report

Volume49, Issue1, January/February 2019, Pages 15-21

Abstract

Although decision‐making algorithms are not new to medicine, the availability of vast stores of medical data, gains in computing power, and breakthroughs in machine learning are accelerating the pace of their development, expanding the range of questions they can address, and increasing their predictive power. In many cases, however, the most powerful machine learning techniques purchase diagnostic or predictive accuracy at the expense of our ability to access “the knowledge within the machine.” Without an explanation in terms of reasons or a rationale for particular decisions in individual cases, some commentators regard ceding medical decision‐making to black box systems as contravening the profound moral responsibilities of clinicians. I argue, however, that opaque decisions are more common in medicine than critics realize. Moreover, as Aristotle noted over two millennia ago, when our knowledge of causal systems is incomplete and precarious—as it often is in medicine—the ability to explain how results are produced can be less important than the ability to produce such results and empirically verify their accuracy.

The info is here.

Sunday, March 24, 2019

An Ethical Obligation for Bioethicists to Utilize Social Media

Herron, PD

Hastings Cent Rep. 2019 Jan;49(1):39-40.

doi: 10.1002/hast.978.

Here is an excerpt:

Unfortunately, it appears that bioethicists are no better informed than other health professionals, policy experts, or (even) elected officials, and they are sometimes resistant to becoming informed. But bioethicists have a duty to develop our knowledge and usefulness with respect to social media; many of our skills can and should be adapted to this area. There is growing evidence of the power of social media to foster dissemination of misinformation. The harms associated with misinformation or “fake news” are not new threats. Historically, there have always been individuals or organized efforts to propagate false information or to deceive others. Social media and other technologies have provided the ability to rapidly and expansively share both information and misinformation. Bioethics serves society by offering guidance about ethical issues associated with advances in medicine, science, and technology. Much of the public’s conversation about and exposure to these emerging issues occurs online. If we bioethicists are not part of the mix, we risk yielding to alternative and less authoritative sources of information. Social media’s transformative impact has led some to view it as not just a personal tool but the equivalent to a public utility, which, as such, should be publicly regulated. Bioethicists can also play a significant part in this dialogue. But to do so, we need to engage with social media. We need to ensure that our understanding of social media is based on experiential use, not just abstract theory.

Bioethics has expanded over the past few decades, extending beyond the academy to include, for example, clinical ethics consultants and leadership positions in public affairs and public health policy. These varied roles bring weighty responsibilities and impose a need for critical reflection on how bioethicists can best serve the public interest in a way that reflects and is accountable to the public’s needs.

Hastings Cent Rep. 2019 Jan;49(1):39-40.

doi: 10.1002/hast.978.

Here is an excerpt:

Unfortunately, it appears that bioethicists are no better informed than other health professionals, policy experts, or (even) elected officials, and they are sometimes resistant to becoming informed. But bioethicists have a duty to develop our knowledge and usefulness with respect to social media; many of our skills can and should be adapted to this area. There is growing evidence of the power of social media to foster dissemination of misinformation. The harms associated with misinformation or “fake news” are not new threats. Historically, there have always been individuals or organized efforts to propagate false information or to deceive others. Social media and other technologies have provided the ability to rapidly and expansively share both information and misinformation. Bioethics serves society by offering guidance about ethical issues associated with advances in medicine, science, and technology. Much of the public’s conversation about and exposure to these emerging issues occurs online. If we bioethicists are not part of the mix, we risk yielding to alternative and less authoritative sources of information. Social media’s transformative impact has led some to view it as not just a personal tool but the equivalent to a public utility, which, as such, should be publicly regulated. Bioethicists can also play a significant part in this dialogue. But to do so, we need to engage with social media. We need to ensure that our understanding of social media is based on experiential use, not just abstract theory.

Bioethics has expanded over the past few decades, extending beyond the academy to include, for example, clinical ethics consultants and leadership positions in public affairs and public health policy. These varied roles bring weighty responsibilities and impose a need for critical reflection on how bioethicists can best serve the public interest in a way that reflects and is accountable to the public’s needs.

Saturday, March 23, 2019

The Fake Sex Doctor Who Conned the Media Into Publicizing His Bizarre Research on Suicide, Butt-Fisting, and Bestiality

Jennings Brown

Jennings Brownwww.gizmodo.com

Originally published March 1, 2019

Here is an excerpt:

Despite Sendler’s claims that he is a doctor, and despite the stethoscope in his headshot, he is not a licensed doctor of medicine in the U.S. Two employees of the Harvard Medical School registrar confirmed to me that Sendler was never enrolled and never received a MD from the medical school. A Harvard spokesperson told me Sendler never received a PhD or any degree from Harvard University.

“I got into Harvard Medical School for MD, PhD, and Masters degree combined,” Sendler told me. I asked if he was able to get a PhD in sexual behavior from Harvard Medical School (Harvard Medical School does not provide any sexual health focuses) and he said “Yes. Yes,” without hesitation, then doubled-down: “I assume that there’s still some kind of sense of wonder on campus [about me]. Because I can see it when I go and visit [Harvard], that people are like, ‘Wow you had the balls, because no one else did that,’” presumably referring to his academic path.

Sendler told me one of his mentors when he was at Harvard Medical School was Yi Zhang, a professor of genetics at the school. Sendler said Zhang didn’t believe in him when he was studying at Harvard. But, Sendler said, he met with Zhang in Boston just a month prior to our interview. And Zhang was now impressed by Sendler’s accomplishments.

Sendler said Zhang told him in January, “Congrats. You did what you felt was right... Turns out, wow, you have way more power in research now than I do. And I’m just very proud of you, because I have people that I really put a lot of effort, after you left, into making them the best and they didn’t turn out that well.”

The info is here.

This is a fairly bizarre story and worth the long read.

Friday, March 22, 2019

We need to talk about systematic fraud

Jennifer Byrne

Nature 566, 9 (2019)

doi: 10.1038/d41586-019-00439-9

Here is an excerpt:

Some might argue that my efforts are inconsequential, and that the publication of potentially fraudulent papers in low-impact journals doesn’t matter. In my view, we can’t afford to accept this argument. Such papers claim to uncover mechanisms behind a swathe of cancers and rare diseases. They could derail efforts to identify easily measurable biomarkers for use in predicting disease outcomes or whether a drug will work. Anyone trying to build on any aspect of this sort of work would be wasting time, specimens and grant money. Yet, when I have raised the issue, I have had comments such as “ah yes, you’re working on that fraud business”, almost as a way of closing down discussion. Occasionally, people’s reactions suggest that ferreting out problems in the literature is a frivolous activity, done for personal amusement, or that it is vindictive, pursued to bring down papers and their authors.

Why is there such enthusiasm for talking about faulty research practices, yet such reluctance to discuss deliberate deception? An analysis of the Diederik Stapel fraud case that rocked the psychology community in 2011 has given me some ideas (W. Stroebe et al. Perspect. Psychol. Sci. 7, 670–688; 2012). Fraud departs from community norms, so scientists do not want to think about it, let alone talk about it. It is even more uncomfortable to think about organized fraud that is so frequently associated with one country. This becomes a vicious cycle: because fraud is not discussed, people don’t learn about it, so they don’t consider it, or they think it’s so rare that it’s unlikely to affect them, and so papers are less likely to come under scrutiny. Thinking and talking about systematic fraud is essential to solving this problem. Raising awareness and the risk of detection may well prompt new ways to identify papers produced by systematic fraud.

Last year, China announced sweeping plans to curb research misconduct. That’s a great first step. Next should be a review of publication quotas and cash rewards, and the closure of ‘paper factories’.

The info is here.

Nature 566, 9 (2019)

doi: 10.1038/d41586-019-00439-9

Here is an excerpt:

Some might argue that my efforts are inconsequential, and that the publication of potentially fraudulent papers in low-impact journals doesn’t matter. In my view, we can’t afford to accept this argument. Such papers claim to uncover mechanisms behind a swathe of cancers and rare diseases. They could derail efforts to identify easily measurable biomarkers for use in predicting disease outcomes or whether a drug will work. Anyone trying to build on any aspect of this sort of work would be wasting time, specimens and grant money. Yet, when I have raised the issue, I have had comments such as “ah yes, you’re working on that fraud business”, almost as a way of closing down discussion. Occasionally, people’s reactions suggest that ferreting out problems in the literature is a frivolous activity, done for personal amusement, or that it is vindictive, pursued to bring down papers and their authors.

Why is there such enthusiasm for talking about faulty research practices, yet such reluctance to discuss deliberate deception? An analysis of the Diederik Stapel fraud case that rocked the psychology community in 2011 has given me some ideas (W. Stroebe et al. Perspect. Psychol. Sci. 7, 670–688; 2012). Fraud departs from community norms, so scientists do not want to think about it, let alone talk about it. It is even more uncomfortable to think about organized fraud that is so frequently associated with one country. This becomes a vicious cycle: because fraud is not discussed, people don’t learn about it, so they don’t consider it, or they think it’s so rare that it’s unlikely to affect them, and so papers are less likely to come under scrutiny. Thinking and talking about systematic fraud is essential to solving this problem. Raising awareness and the risk of detection may well prompt new ways to identify papers produced by systematic fraud.

Last year, China announced sweeping plans to curb research misconduct. That’s a great first step. Next should be a review of publication quotas and cash rewards, and the closure of ‘paper factories’.

The info is here.

Pop Culture, AI And Ethics

Phaedra Boinodiris

Phaedra BoinodirisForbes.com

Originally published February 24, 2019

Here is an excerpt:

5 Areas of Ethical Focus

The guide goes on to outline five areas of ethical focus or consideration:

Accountability – there is a group responsible for ensuring that REAL guests in the hotel are interviewed to determine their needs. When feedback is negative this group implements a feedback loop to better understand preferences. They ensure that at any point in time, a guest can turn the AI off.

Fairness – If there is bias in the system, the accountable team must take the time to train with a larger, more diverse set of data.Ensure that the data collected about a user's race, gender, etc. in combination with their usage of the AI, will not be used to market to or exclude certain demographics.

Explainability and Enforced Transparency – if a guest doesn’t like the AI’s answer, she can ask how it made that recommendation using which dataset. A user must explicitly opt in to use the assistant and provide the guest options to consent on what information to gather.

User Data Rights – The hotel does not own a guest’s data and a guest has the right to have the system purges at any time. Upon request, a guest can receive a summary of what information was gathered by the Ai assistant.

Value Alignment – Align the experience to the values of the hotel. The hotel values privacy and ensuring that guests feel respected and valued. Make it clear that the AI assistant is not designed to keep data or monitor guests. Relay how often guest data is auto deleted. Ensure that the AI can speak in the guest’s respective language.

The info is here.

Thursday, March 21, 2019

Anger as a moral emotion: A 'bird's eye view' systematic review

Tim Lomas

Counseling Psychology Quarterly

Anger is common problem for which counseling/psychotherapy clients seek help, and is typically regarded as an invidious negative emotion to be ameliorated. However, it may be possible to reframe anger as a moral emotion, arising in response to perceived transgressions, thereby endowing it with meaning. In that respect, the current paper offers a ‘bird’s eye’ systematic review of empirical research on anger as a moral emotion (i.e., one focusing broadly on the terrain as a whole, rather than on specific areas). Three databases were reviewed from the start of their records to January 2019. Eligibility criteria included empirical research, published in English in peer-reviewed journals, on anger specifically as a moral emotion. 175 papers met the criteria, and fell into four broad classes of study: survey-based; experimental; physiological; and qualitative. In reviewing the articles, this paper pays particular attention to: how/whether anger can be differentiated from other moral emotions; antecedent causes and triggers; contextual factors that influence or mitigate anger; and outcomes arising from moral anger. Together, the paper offers a comprehensive overview of current knowledge into this prominent and problematic emotion. The results may be of use to counsellors and psychotherapists helping to address anger issues in their clients.

Download the paper here.

Note: Other "symptoms" in mental health can also be reframed as moral issues. PTSD is similar to Moral Injury. OCD is highly correlated with scrupulosity, excessive concern about moral purity. Unhealthy guilt is found in many depressed individuals. And, psychologists used forgiveness of self and others as a goal in treatment.

Counseling Psychology Quarterly

Anger is common problem for which counseling/psychotherapy clients seek help, and is typically regarded as an invidious negative emotion to be ameliorated. However, it may be possible to reframe anger as a moral emotion, arising in response to perceived transgressions, thereby endowing it with meaning. In that respect, the current paper offers a ‘bird’s eye’ systematic review of empirical research on anger as a moral emotion (i.e., one focusing broadly on the terrain as a whole, rather than on specific areas). Three databases were reviewed from the start of their records to January 2019. Eligibility criteria included empirical research, published in English in peer-reviewed journals, on anger specifically as a moral emotion. 175 papers met the criteria, and fell into four broad classes of study: survey-based; experimental; physiological; and qualitative. In reviewing the articles, this paper pays particular attention to: how/whether anger can be differentiated from other moral emotions; antecedent causes and triggers; contextual factors that influence or mitigate anger; and outcomes arising from moral anger. Together, the paper offers a comprehensive overview of current knowledge into this prominent and problematic emotion. The results may be of use to counsellors and psychotherapists helping to address anger issues in their clients.

Download the paper here.

Note: Other "symptoms" in mental health can also be reframed as moral issues. PTSD is similar to Moral Injury. OCD is highly correlated with scrupulosity, excessive concern about moral purity. Unhealthy guilt is found in many depressed individuals. And, psychologists used forgiveness of self and others as a goal in treatment.

China’s CRISPR twins might have had their brains inadvertently enhanced

Antonio Regalado

MIT Technology Review

Originally posted February 21, 2019

The brains of two genetically edited girls born in China last year may have been changed in ways that enhance cognition and memory, scientists say.

The twins, called Lulu and Nana, reportedly had their genes modified before birth by a Chinese scientific team using the new editing tool CRISPR. The goal was to make the girls immune to infection by HIV, the virus that causes AIDS.

Now, new research shows that the same alteration introduced into the girls’ DNA, deletion of a gene called CCR5, not only makes mice smarter but also improves human brain recovery after stroke, and could be linked to greater success in school.

“The answer is likely yes, it did affect their brains,” says Alcino J. Silva, a neurobiologist at the University of California, Los Angeles, whose lab uncovered a major new role for the CCR5 gene in memory and the brain’s ability to form new connections.

“The simplest interpretation is that those mutations will probably have an impact on cognitive function in the twins,” says Silva. He says the exact effect on the girls’ cognition is impossible to predict, and “that is why it should not be done.”

The info is here.

MIT Technology Review

Originally posted February 21, 2019

The brains of two genetically edited girls born in China last year may have been changed in ways that enhance cognition and memory, scientists say.

The twins, called Lulu and Nana, reportedly had their genes modified before birth by a Chinese scientific team using the new editing tool CRISPR. The goal was to make the girls immune to infection by HIV, the virus that causes AIDS.

Now, new research shows that the same alteration introduced into the girls’ DNA, deletion of a gene called CCR5, not only makes mice smarter but also improves human brain recovery after stroke, and could be linked to greater success in school.

“The answer is likely yes, it did affect their brains,” says Alcino J. Silva, a neurobiologist at the University of California, Los Angeles, whose lab uncovered a major new role for the CCR5 gene in memory and the brain’s ability to form new connections.

“The simplest interpretation is that those mutations will probably have an impact on cognitive function in the twins,” says Silva. He says the exact effect on the girls’ cognition is impossible to predict, and “that is why it should not be done.”

The info is here.

Wednesday, March 20, 2019

Israel Approves Compassionate Use of MDMA to Treat PTSD

Ido Efrati

www.haaretz.com

Originally posted February 10, 2019

MDMA, popularly known as ecstasy, is a drug more commonly associated with raves and nightclubs than a therapist’s office.

Emerging research has shown promising results in using this “party drug” to treat patients suffering from post-traumatic stress disorder, and Israel’s Health Ministry has just approved the use of MDMA to treat dozens of patients.

MDMA is classified in Israel as a “dangerous drug”, recreational use is illegal, and therapeutic use of MDMA has yet to be formally approved and is still in clinical trials.

However, this treatment is deemed as “compassionate use,” which allows drugs that are still in development to be made available to patients outside of a clinical trial due to the lack of effective alternatives.

The info is here.

www.haaretz.com

Originally posted February 10, 2019

MDMA, popularly known as ecstasy, is a drug more commonly associated with raves and nightclubs than a therapist’s office.

Emerging research has shown promising results in using this “party drug” to treat patients suffering from post-traumatic stress disorder, and Israel’s Health Ministry has just approved the use of MDMA to treat dozens of patients.

MDMA is classified in Israel as a “dangerous drug”, recreational use is illegal, and therapeutic use of MDMA has yet to be formally approved and is still in clinical trials.

However, this treatment is deemed as “compassionate use,” which allows drugs that are still in development to be made available to patients outside of a clinical trial due to the lack of effective alternatives.

The info is here.

Should This Exist? The Ethics Of New Technology

Lulu Garcia-Navarro

www.NPR.org

Originally posted March 3, 2019

Not every new technology product hits the shelves.

Tech companies kill products and ideas all the time — sometimes it's because they don't work, sometimes there's no market.

Or maybe, it might be too dangerous.

Recently, the research firm OpenAI announced that it would not be releasing a version of a text generator they developed, because of fears that it could be misused to create fake news. The text generator was designed to improve dialogue and speech recognition in artificial intelligence technologies.

The organization's GPT-2 text generator can generate paragraphs of coherent, continuing text based off of a prompt from a human. For example, when inputted with the claim, "John F. Kennedy was just elected President of the United States after rising from the grave decades after his assassination," the generator spit out the transcript of "his acceptance speech" that read in part:

The info is here.

www.NPR.org

Originally posted March 3, 2019

Not every new technology product hits the shelves.

Tech companies kill products and ideas all the time — sometimes it's because they don't work, sometimes there's no market.

Or maybe, it might be too dangerous.

Recently, the research firm OpenAI announced that it would not be releasing a version of a text generator they developed, because of fears that it could be misused to create fake news. The text generator was designed to improve dialogue and speech recognition in artificial intelligence technologies.

The organization's GPT-2 text generator can generate paragraphs of coherent, continuing text based off of a prompt from a human. For example, when inputted with the claim, "John F. Kennedy was just elected President of the United States after rising from the grave decades after his assassination," the generator spit out the transcript of "his acceptance speech" that read in part:

It is time once again. I believe this nation can do great things if the people make their voices heard. The men and women of America must once more summon our best elements, all our ingenuity, and find a way to turn such overwhelming tragedy into the opportunity for a greater good and the fulfillment of all our dreams.Considering the serious issues around fake news and online propaganda that came to light during the 2016 elections, it's easy to see how this tool could be used for harm.

The info is here.

Tuesday, March 19, 2019

Treasury Secretary Steven Mnuchin's Hollywood ties spark ethics questions in China trade talks

Emma Newburger

CNBC.com

Originally posted March 15, 2019

Treasury Secretary Steven Mnuchin, one of President Donald Trump's key negotiators in the U.S.-China trade talks, has pushed Beijing to grant the American film industry greater access to its markets.

But now, Mnuchin’s ties to Hollywood are raising ethical questions about his role in those negotiations. Mnuchin had been a producer in a raft of successful films prior to joining the Trump administration.

In 2017, he divested his stake in a film production company after joining the White House. But he sold that position to his wife, filmmaker and actress Louise Linton, for between $1 million and $2 million, The New York Times reported on Thursday. At the time, she was his fiancée.

That company, StormChaser Partners, helped produce the mega-hit movie “Wonder Woman,” which grossed $90 million in China, according to the Times. Yet, because of China’s restrictions on foreign films, the producers received a small portion of that money. Mnuchin has been personally engaged in trying to ease those rules, which could be a boon to the industry, according to the Times.

The info is here.

CNBC.com

Originally posted March 15, 2019

Treasury Secretary Steven Mnuchin, one of President Donald Trump's key negotiators in the U.S.-China trade talks, has pushed Beijing to grant the American film industry greater access to its markets.

But now, Mnuchin’s ties to Hollywood are raising ethical questions about his role in those negotiations. Mnuchin had been a producer in a raft of successful films prior to joining the Trump administration.

In 2017, he divested his stake in a film production company after joining the White House. But he sold that position to his wife, filmmaker and actress Louise Linton, for between $1 million and $2 million, The New York Times reported on Thursday. At the time, she was his fiancée.

That company, StormChaser Partners, helped produce the mega-hit movie “Wonder Woman,” which grossed $90 million in China, according to the Times. Yet, because of China’s restrictions on foreign films, the producers received a small portion of that money. Mnuchin has been personally engaged in trying to ease those rules, which could be a boon to the industry, according to the Times.

The info is here.

We're Teaching Consent All Wrong

Sarah Sparks

www.edweek.org

Originally published January 8, 2019

Here is an excerpt:

Instead, researchers and educators offer an alternative: Teach consent as a life skill—not just a sex skill—beginning in early childhood, and begin discussing consent and communication in the context of relationships by 5th or 6th grades, before kids start seriously thinking about sex. (Think that's too young? In yet another study, the CDC found 8 in 10 teenagers didn't get sex education until after they'd already had sex.)

Educators and parents often balk at discussing strategies for and examples of consent because "they incorrectly believe that if you teach consent, students will become more sexually active," said Mike Domitrz, founder of the Date Safe Project, a Milwaukee-based sexual-assault prevention program that focuses on consent education and bystander interventions. "It's a myth. Students of both genders are pretty consistent that a lot of the sexual activity that is going on is occurring under pressure."

Studies suggest young women are more likely to judge consent on verbal communication and young men relied more on nonverbal cues, though both groups said nonverbal signals are often misinterpreted. And teenagers can be particularly bad at making decisions about risky behavior, including sexual situations, while under social pressure. Brain studies have found adolescents are more likely to take risks and less likely to think about negative consequences when they are in emotionally arousing, or "hot," situations, and that bad decision-making tends to get even worse when they feel they are being judged by their friends.

Making understanding and negotiating consent a life skill gives children and adolescents ways to understand and respect both their own desires and those of other people. And it can help educators frame instruction about consent without sinking into the morass of long-running arguments and anxiety over gender roles, cultural values, and teen sexuality.

The info is here.

www.edweek.org

Originally published January 8, 2019

Here is an excerpt:

Instead, researchers and educators offer an alternative: Teach consent as a life skill—not just a sex skill—beginning in early childhood, and begin discussing consent and communication in the context of relationships by 5th or 6th grades, before kids start seriously thinking about sex. (Think that's too young? In yet another study, the CDC found 8 in 10 teenagers didn't get sex education until after they'd already had sex.)

Educators and parents often balk at discussing strategies for and examples of consent because "they incorrectly believe that if you teach consent, students will become more sexually active," said Mike Domitrz, founder of the Date Safe Project, a Milwaukee-based sexual-assault prevention program that focuses on consent education and bystander interventions. "It's a myth. Students of both genders are pretty consistent that a lot of the sexual activity that is going on is occurring under pressure."

Studies suggest young women are more likely to judge consent on verbal communication and young men relied more on nonverbal cues, though both groups said nonverbal signals are often misinterpreted. And teenagers can be particularly bad at making decisions about risky behavior, including sexual situations, while under social pressure. Brain studies have found adolescents are more likely to take risks and less likely to think about negative consequences when they are in emotionally arousing, or "hot," situations, and that bad decision-making tends to get even worse when they feel they are being judged by their friends.

Making understanding and negotiating consent a life skill gives children and adolescents ways to understand and respect both their own desires and those of other people. And it can help educators frame instruction about consent without sinking into the morass of long-running arguments and anxiety over gender roles, cultural values, and teen sexuality.

The info is here.

Monday, March 18, 2019

The college admissions scandal is a morality play

Elaine Ayala

San Antonio Express-News

Originally posted March 16, 2019

The college admission cheating scandal that raced through social media and dominated news cycles this week wasn’t exactly shocking: Wealthy parents rigged the system for their underachieving children.

It’s an ancient morality play set at elite universities with an unseemly cast of characters: spoiled teens and shameless parents; corrupt test proctors and paid test takers; as well as college sports officials willing to be bribed and a ring leader who ultimately turned on all of them.

William “Rick” Singer, who went to college in San Antonio, wore a wire to cooperate with FBI investigators.

(cut)

Yet even though they were arrested, the 50 people involved managed to secure the best possible outcome under the circumstances. Unlike many caught shoplifting or possessing small amounts of marijuana and who lack the lawyers and resources to help them navigate the legal system, the accused parents and coaches quickly posted bond and were promptly released without spending much time in custody.

The info is here.

San Antonio Express-News

Originally posted March 16, 2019

The college admission cheating scandal that raced through social media and dominated news cycles this week wasn’t exactly shocking: Wealthy parents rigged the system for their underachieving children.

It’s an ancient morality play set at elite universities with an unseemly cast of characters: spoiled teens and shameless parents; corrupt test proctors and paid test takers; as well as college sports officials willing to be bribed and a ring leader who ultimately turned on all of them.

William “Rick” Singer, who went to college in San Antonio, wore a wire to cooperate with FBI investigators.

(cut)

Yet even though they were arrested, the 50 people involved managed to secure the best possible outcome under the circumstances. Unlike many caught shoplifting or possessing small amounts of marijuana and who lack the lawyers and resources to help them navigate the legal system, the accused parents and coaches quickly posted bond and were promptly released without spending much time in custody.

The info is here.

OpenAI's Realistic Text-Generating AI Triggers Ethics Concerns

William Falcon

Forbes.com

Originally posted February 18, 2019

Here is an excerpt:

Why you should care.

GPT-2 is the closest AI we have to make conversational AI a possibility. Although conversational AI is far from solved, chatbots powered by this technology could help doctors scale advice over chats, scale advice for potential suicide victims, improve translation systems, and improve speech recognition across applications.

Although OpenAI acknowledges these potential benefits, it also acknowledges the potential risks of releasing the technology. Misuse could include, impersonate others online, generate misleading news headlines, or automate the automation of fake posts to social media.

But I argue these malicious applications are already possible without this AI. There exist other public models which can already be used for these purposes. Thus, I think not releasing this code is more harmful to the community because A) it sets a bad precedent for open research, B) keeps companies from improving their services, C) unnecessarily hypes these results and D) may trigger unnecessary fears about AI in the general public.

The info is here.

Forbes.com

Originally posted February 18, 2019

Here is an excerpt:

Why you should care.

GPT-2 is the closest AI we have to make conversational AI a possibility. Although conversational AI is far from solved, chatbots powered by this technology could help doctors scale advice over chats, scale advice for potential suicide victims, improve translation systems, and improve speech recognition across applications.

Although OpenAI acknowledges these potential benefits, it also acknowledges the potential risks of releasing the technology. Misuse could include, impersonate others online, generate misleading news headlines, or automate the automation of fake posts to social media.

But I argue these malicious applications are already possible without this AI. There exist other public models which can already be used for these purposes. Thus, I think not releasing this code is more harmful to the community because A) it sets a bad precedent for open research, B) keeps companies from improving their services, C) unnecessarily hypes these results and D) may trigger unnecessary fears about AI in the general public.

The info is here.

Sunday, March 17, 2019

Actions Speak Louder Than Outcomes in Judgments of Prosocial Behavior

Daniel A. Yudkin, Annayah M. B. Prosser, and Molly J. Crockett

Emotion (2018).

Recently proposed models of moral cognition suggest that people's judgments of harmful acts are influenced by their consideration both of those acts' consequences ("outcome value"), and of the feeling associated with their enactment ("action value"). Here we apply this framework to judgments of prosocial behavior, suggesting that people's judgments of the praiseworthiness of good deeds are determined both by the benefit those deeds confer to others and by how good they feel to perform. Three experiments confirm this prediction. After developing a new measure to assess the extent to which praiseworthiness is influenced by action and outcome values, we show how these factors make significant and independent contributions to praiseworthiness. We also find that people are consistently more sensitive to action than to outcome value in judging the praiseworthiness of good deeds, but not harmful deeds. This observation echoes the finding that people are often insensitive to outcomes in their giving behavior. Overall, this research tests and validates a novel framework for understanding moral judgment, with implications for the motivations that underlie human altruism.

Here is an excerpt:

On a broader level, past work has suggested that judging the wrongness of harmful actions involves a process of “evaluative simulation,” whereby we evaluate the moral status of another’s action by simulating the affective response that we would experience performing the action ourselves (Miller et al., 2014). Our results are consistent with the possibility that evaluative simulation also plays a role in judging the praiseworthiness of helpful actions. If people evaluate helpful actions by simulating what it feels like to perform the action, then we would expect to see similar biases in moral evaluation as those that exist for moral action. Previous work has shown that individuals often do not act to maximize the benefits that others receive, but instead to maximize the good feelings associated with performing good deeds (Berman et al., 2018; Gesiarz & Crockett, 2015; Ribar & Wilhelm, 2002). Thus, the asymmetry in moral evaluation seen in the present studies may reflect a correspondence between first-person moral decision-making and third-person moral evaluation.

Download the pdf here.

Emotion (2018).

Recently proposed models of moral cognition suggest that people's judgments of harmful acts are influenced by their consideration both of those acts' consequences ("outcome value"), and of the feeling associated with their enactment ("action value"). Here we apply this framework to judgments of prosocial behavior, suggesting that people's judgments of the praiseworthiness of good deeds are determined both by the benefit those deeds confer to others and by how good they feel to perform. Three experiments confirm this prediction. After developing a new measure to assess the extent to which praiseworthiness is influenced by action and outcome values, we show how these factors make significant and independent contributions to praiseworthiness. We also find that people are consistently more sensitive to action than to outcome value in judging the praiseworthiness of good deeds, but not harmful deeds. This observation echoes the finding that people are often insensitive to outcomes in their giving behavior. Overall, this research tests and validates a novel framework for understanding moral judgment, with implications for the motivations that underlie human altruism.

Here is an excerpt:

On a broader level, past work has suggested that judging the wrongness of harmful actions involves a process of “evaluative simulation,” whereby we evaluate the moral status of another’s action by simulating the affective response that we would experience performing the action ourselves (Miller et al., 2014). Our results are consistent with the possibility that evaluative simulation also plays a role in judging the praiseworthiness of helpful actions. If people evaluate helpful actions by simulating what it feels like to perform the action, then we would expect to see similar biases in moral evaluation as those that exist for moral action. Previous work has shown that individuals often do not act to maximize the benefits that others receive, but instead to maximize the good feelings associated with performing good deeds (Berman et al., 2018; Gesiarz & Crockett, 2015; Ribar & Wilhelm, 2002). Thus, the asymmetry in moral evaluation seen in the present studies may reflect a correspondence between first-person moral decision-making and third-person moral evaluation.

Download the pdf here.

Saturday, March 16, 2019

How Should AI Be Developed, Validated, and Implemented in Patient Care?

Michael Anderson and Susan Leigh Anderson

AMA J Ethics. 2019;21(2):E125-130.

doi: 10.1001/amajethics.2019.125.

Abstract