Muralidharan, A., Savulescu, J. & Schaefer, G.O.

Ethics Inf Technol 26, 16 (2024).

Abstract

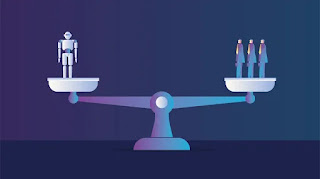

This paper argues that one problem that besets black-box AI is that it lacks algorithmic justifiability. We argue that the norm of shared decision making in medical care presupposes that treatment decisions ought to be justifiable to the patient. Medical decisions are justifiable to the patient only if they are compatible with the patient’s values and preferences and the patient is able to see that this is so. Patient-directed justifiability is threatened by black-box AIs because the lack of rationale provided for the decision makes it difficult for patients to ascertain whether there is adequate fit between the decision and the patient’s values. This paper argues that achieving algorithmic transparency does not help patients bridge the gap between their medical decisions and values. We introduce a hypothetical model we call Justifiable AI to illustrate this argument. Justifiable AI aims at modelling normative and evaluative considerations in an explicit way so as to provide a stepping stone for patient and physician to jointly decide on a course of treatment. If our argument succeeds, we should prefer these justifiable models over alternatives if the former are available and aim to develop said models if not.

Here is my summary:

The article argues that a certain type of AI technology, known as "black box" AI, poses a problem in medicine because it lacks transparency. This lack of transparency makes it difficult for doctors to explain the AI's recommendations to patients. In order to make shared decisions about treatment, patients need to understand the reasoning behind those decisions, and how the AI factored in their individual values and preferences.

The article proposes an alternative type of AI, called "Justifiable AI" which would address this problem. Justifiable AI would be designed to make its reasoning process clear, allowing doctors to explain to patients why the AI is recommending a particular course of treatment. This would allow patients to see how the AI's recommendation aligns with their own values, and make informed decisions about their care.