Eric Lipton

The New York Times

Originally posted 21 Nov 23

Here is an excerpt:

Rapid advances in artificial intelligence and the intense use of drones in conflicts in Ukraine and the Middle East have combined to make the issue that much more urgent. So far, drones generally rely on human operators to carry out lethal missions, but software is being developed that soon will allow them to find and select targets more on their own.

The intense jamming of radio communications and GPS in Ukraine has only accelerated the shift, as autonomous drones can often keep operating even when communications are cut off.

“This isn’t the plot of a dystopian novel, but a looming reality,” Gaston Browne, the prime minister of Antigua and Barbuda, told officials at a recent U.N. meeting.

Pentagon officials have made it clear that they are preparing to deploy autonomous weapons in a big way.

Deputy Defense Secretary Kathleen Hicks announced this summer that the U.S. military would “field attritable, autonomous systems at scale of multiple thousands” in the coming two years, saying that the push to compete with China’s own investment in advanced weapons necessitated that the United States “leverage platforms that are small, smart, cheap and many.”

The concept of an autonomous weapon is not entirely new. Land mines — which detonate automatically — have been used since the Civil War. The United States has missile systems that rely on radar sensors to autonomously lock on to and hit targets.

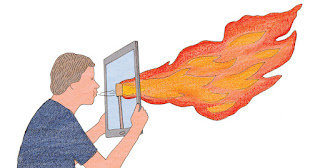

What is changing is the introduction of artificial intelligence that could give weapons systems the capability to make decisions themselves after taking in and processing information.

Here is a summary:

This article discusses the debate at the UN regarding Lethal Autonomous Weapons (LAW) - essentially autonomous drones with AI that can choose and attack targets without human intervention. There are concerns that this technology could lead to unintended casualties, make wars more likely, and remove the human element from the decision to take a life.

- Many countries are worried about the development and deployment of LAWs.

- Austria and other countries are proposing a total ban on LAWs or at least strict regulations requiring human control and limitations on how they can be used.

- The US, Russia, and China are opposed to a ban and argue that LAWs could potentially reduce civilian casualties in wars.

- The US prefers non-binding guidelines over new international laws.

- The UN is currently deadlocked on the issue with no clear path forward for creating regulations.