Charles Piller

Science

Originally published 26 Sept 24

In 2016, when the U.S. Congress unleashed a flood of new funding for Alzheimer’s disease research, the National Institute on Aging (NIA) tapped veteran brain researcher Eliezer Masliah as a key leader for the effort. He took the helm at the agency’s Division of Neuroscience, whose budget—$2.6 billion in the last fiscal year—dwarfs the rest of NIA combined.

As a leading federal ambassador to the research community and a chief adviser to NIA Director Richard Hodes, Masliah would gain tremendous influence over the study and treatment of neurological conditions in the United States and beyond. He saw the appointment as his career capstone. Masliah told the online discussion site Alzforum that “the golden era of Alzheimer’s research” was coming and he was eager to help NIA direct its bounty. “I am fully committed to this effort. It is a historical moment.”

Masliah appeared an ideal selection. The physician and neuropathologist conducted research at the University of California San Diego (UCSD) for decades, and his drive, curiosity, and productivity propelled him into the top ranks of scholars on Alzheimer’s and Parkinson’s disease. His roughly 800 research papers, many on how those conditions damage synapses, the junctions between neurons, have made him one of the most cited scientists in his field. His work on topics including alpha-synuclein—a protein linked to both diseases—continues to influence basic and clinical science.

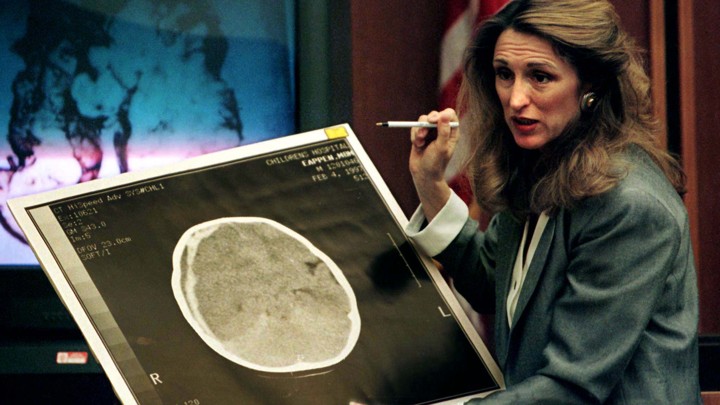

But over the past 2 years questions have arisen about some of Masliah’s research. A Science investigation has now found that scores of his lab studies at UCSD and NIA are riddled with apparently falsified Western blots—images used to show the presence of proteins—and micrographs of brain tissue. Numerous images seem to have been inappropriately reused within and across papers, sometimes published years apart in different journals, describing divergent experimental conditions.

Here are some thoughts:

In 2016, Eliezer Masliah was appointed as the director of the National Institute on Aging's (NIA) Division of Neuroscience. This appointment came at a time when Congress significantly increased funding for Alzheimer's disease research. Masliah, a veteran brain researcher, was seen as an ideal choice due to his extensive experience and prolific research output, with approximately 800 published papers.

A recent investigation by Science has uncovered potential research misconduct in Masliah's work. The investigation found issues with images in 132 of his published research papers between 1997 and 2023. These issues include apparently falsified Western blots and micrographs of brain tissue, as well as inappropriate reuse of images across different papers.

The National Institutes of Health (NIH) conducted its own investigation and found evidence of research misconduct by Masliah. The NIH investigation specifically identified issues with images in two studies co-authored by Masliah. As a result, Masliah is no longer serving as the director of NIA's neuroscience division.

Masliah's research has been influential in the development and testing of experimental drugs for neurodegenerative diseases. For example, his work contributed to the approval of clinical trials for prasinezumab, an antibody targeting alpha-synuclein in Parkinson's disease. However, the drug showed no benefit compared to placebo in a trial of 316 Parkinson's patients.

The allegations of misconduct have shocked the neuroscience community. Eleven neuroscientists who reviewed the dossier of suspect work for Science expressed disbelief at the scale of the apparent problems. Many called for thorough investigations by NIH, scholarly journals, funders, and the University of California San Diego, where Masliah conducted much of his research.

The questions raised about Masliah's research have potentially far-reaching implications for the fields of Alzheimer's and Parkinson's disease research. Given Masliah's influential position and the large number of citations his work has received, the integrity of a significant body of research in these areas may now be called into question.