Donovan Slack

USA Today

Originally published December 3, 2017

Here is an excerpt:

A VA hospital in Oklahoma knowingly hired a psychiatrist previously sanctioned for sexual misconduct who went on to sleep with a VA patient, according to internal documents. A Louisiana VA clinic hired a psychologist with felony convictions. The VA ended up firing him after they determined he was a “direct threat to others” and the VA’s mission.

As a result of USA TODAY’s investigation of Schneider, VA officials determined his hiring — and potentially that of an unknown number of other doctors — was illegal.

Federal law bars the agency from hiring physicians whose license has been revoked by a state board, even if they still hold an active license in another state. Schneider still has a license in Montana, even though his Wyoming license was revoked.

VA spokesman Curt Cashour said agency officials provided hospital officials in Iowa City with “incorrect guidance” green-lighting Schneider’s hire. The VA moved to fire Schneider last Wednesday. He resigned instead.

The article is here.

Welcome to the Nexus of Ethics, Psychology, Morality, Philosophy and Health Care

Welcome to the nexus of ethics, psychology, morality, technology, health care, and philosophy

Sunday, December 31, 2017

Saturday, December 30, 2017

Are There Non-human Persons? Are There Non-person Humans?

Glenn Cohen | TEDxCambridge

Published October 24, 2017

If we want to live a moral life, how should we treat animals or complex artificial intelligence? What kinds of rights should non-humans have? Harvard Law Professor and world-renowned bioethics expert Glenn Cohen shares how our current moral vocabulary may be leading us into fundamental errors and how to face the complex moral world around us. Glenn Cohen is one of the world’s leading experts on the intersection of bioethics and the law, as well as health law. He is an award-winning speaker and writer having authored more than 98 articles and chapters appearing in countless journals and gaining coverage on ABC, CNN, MSNBC, PBS, the New York Times and more. He recently finished his role as one of the project leads on the multi-million dollar Football Players Health Study at Harvard aimed at improving NFL player health.

Published October 24, 2017

If we want to live a moral life, how should we treat animals or complex artificial intelligence? What kinds of rights should non-humans have? Harvard Law Professor and world-renowned bioethics expert Glenn Cohen shares how our current moral vocabulary may be leading us into fundamental errors and how to face the complex moral world around us. Glenn Cohen is one of the world’s leading experts on the intersection of bioethics and the law, as well as health law. He is an award-winning speaker and writer having authored more than 98 articles and chapters appearing in countless journals and gaining coverage on ABC, CNN, MSNBC, PBS, the New York Times and more. He recently finished his role as one of the project leads on the multi-million dollar Football Players Health Study at Harvard aimed at improving NFL player health.

Friday, December 29, 2017

Freud in the scanner

M. M. Owen

aeon.co

Originally published December 7, 2017

Here is an excerpt:

This is why Freud is less important to the field than what Freud represents. Researching this piece, I kept wondering: why hang on to Freud? He is an intensely polarising figure, so polarising that through the 1980s and ’90s there raged the so-called Freud Wars, fighting on one side of which were a whole team of authors driven (as the historian of science John Forrester put it in 1997) by the ‘heartfelt wish that Freud might never have been born or, failing to achieve that end, that all his works and influence be made as nothing’. Indeed, a basic inability to track down anyone with a dispassionate take on psychoanalysis was a frustration of researching this essay. The certitude that whatever I write here will enrage some readers hovers at the back of my mind as I think ahead to skimming the comments section. Preserve subjectivity, I thought, fine, I’m onboard. But why not eschew the heavily contested Freudianism for the psychotherapy of Irvin D Yalom, which takes an existentialist view of the basic challenges of life? Why not embrace Viktor Frankl’s logotherapy, which prioritises our fundamental desire to give life meaning, or the philosophical tradition of phenomenology, whose first principle is that subjectivity precedes all else?

Within neuropsychoanalysis, though, Freud symbolises the fact that, to quote the neuroscientist Ramachandran’s Phantoms in the Brain (1998), you can ‘look for laws of mental life in much the same way that a cardiologist might study the heart or an astronomer study planetary motion’. And on the clinical side, it is simply a fact that before Freud there was really no such thing as therapy, as we understand that word today. In Yalom’s novel When Nietzsche Wept (1992), Josef Breuer, Freud’s mentor, is at a loss for how to counsel the titular German philosopher out of his despair: ‘There is no medicine for despair, no doctor for the soul,’ he says. All Breuer can recommend are therapeutic spas, ‘or perhaps a talk with a priest’.

The article is here.

aeon.co

Originally published December 7, 2017

Here is an excerpt:

This is why Freud is less important to the field than what Freud represents. Researching this piece, I kept wondering: why hang on to Freud? He is an intensely polarising figure, so polarising that through the 1980s and ’90s there raged the so-called Freud Wars, fighting on one side of which were a whole team of authors driven (as the historian of science John Forrester put it in 1997) by the ‘heartfelt wish that Freud might never have been born or, failing to achieve that end, that all his works and influence be made as nothing’. Indeed, a basic inability to track down anyone with a dispassionate take on psychoanalysis was a frustration of researching this essay. The certitude that whatever I write here will enrage some readers hovers at the back of my mind as I think ahead to skimming the comments section. Preserve subjectivity, I thought, fine, I’m onboard. But why not eschew the heavily contested Freudianism for the psychotherapy of Irvin D Yalom, which takes an existentialist view of the basic challenges of life? Why not embrace Viktor Frankl’s logotherapy, which prioritises our fundamental desire to give life meaning, or the philosophical tradition of phenomenology, whose first principle is that subjectivity precedes all else?

Within neuropsychoanalysis, though, Freud symbolises the fact that, to quote the neuroscientist Ramachandran’s Phantoms in the Brain (1998), you can ‘look for laws of mental life in much the same way that a cardiologist might study the heart or an astronomer study planetary motion’. And on the clinical side, it is simply a fact that before Freud there was really no such thing as therapy, as we understand that word today. In Yalom’s novel When Nietzsche Wept (1992), Josef Breuer, Freud’s mentor, is at a loss for how to counsel the titular German philosopher out of his despair: ‘There is no medicine for despair, no doctor for the soul,’ he says. All Breuer can recommend are therapeutic spas, ‘or perhaps a talk with a priest’.

The article is here.

Leadership and Counseling Psychology: Dilemmas, Ambiguities, and Possibilities

Sandra Shullman

The Counseling Psychologist

First published November 28, 2017

Abstract

In this article, I introduce the scientist–practitioner–advocate–leader model as a strategy for addressing the rapidly changing context for psychologists and psychology. The concept of counseling psychologists as learning leaders is derived from the foundations and values of the profession. Incorporating leadership as a core identity for counseling psychologists may create new directions for science and practice as we increasingly integrate multicultural identities, training, and diverse personal backgrounds into social justice initiatives. The article presents six dilemmas faced by counseling psychologists in assuming leadership as part of professional identity, as well as eight learning leader behaviors that counseling psychologists could integrate in their management of ambiguity and uncertainty across various levels of human organization. The article concludes with a discussion of future possibilities that may arise by adopting leadership as part of the role and core identity of counseling psychology.

The article is here.

The Counseling Psychologist

First published November 28, 2017

Abstract

In this article, I introduce the scientist–practitioner–advocate–leader model as a strategy for addressing the rapidly changing context for psychologists and psychology. The concept of counseling psychologists as learning leaders is derived from the foundations and values of the profession. Incorporating leadership as a core identity for counseling psychologists may create new directions for science and practice as we increasingly integrate multicultural identities, training, and diverse personal backgrounds into social justice initiatives. The article presents six dilemmas faced by counseling psychologists in assuming leadership as part of professional identity, as well as eight learning leader behaviors that counseling psychologists could integrate in their management of ambiguity and uncertainty across various levels of human organization. The article concludes with a discussion of future possibilities that may arise by adopting leadership as part of the role and core identity of counseling psychology.

The article is here.

Thursday, December 28, 2017

‘Politicians want us to be fearful. They’re manipulating us for their own interest'

Decca Aitkenhead

The Guardian

Originally published December 8, 2017

Here are two excerpts:

“Yes, I hate to say it, but yes. Democracy is an advance past the tribal nature of our being, the tribal nature of society, which was there for hundreds of thousands, if not millions, of years. It’s very easy for us to fall back into our tribal, evolutionary nature – tribe against tribe, us against them. It’s a very powerful motivator.” Because it speaks to our most primitive self? “Yes, and we don’t realise how powerful it is.” Until we have understood its power, Bargh argues, we have no hope of overcoming it. “So that’s what we have to do.” As he writes: “Refusing to believe the evidence, just to maintain one’s belief in free will, actually reduces the amount of free will that person has.”

(cut)

Participants were asked to fill out an anonymous questionnaire devised to reveal their willingness to use power over a woman to extract sexual favours if guaranteed to get away with it. Some were asked to rate a female participant’s attractiveness. Others were first primed by a word-association technique, using words such as “boss”, “authority”, “status” and “power”, and then asked to rate her. Bargh found the power-priming made no difference whatsoever to men who had scored low on sexual harassment and aggression tendencies. Among men who had scored highly, however, it was a very different case. Without the notion of power being activated in their brains, they found her unattractive. She only became attractive to them once the idea of power was active in their minds.

This, Bargh suggests, might explain how sexual harassers can genuinely tell themselves: “‘I’m behaving like anybody does when they’re attracted to somebody else. I’m flirting. I’m asking her out. I want to date her. I’m doing everything that you do if you’re attracted to somebody.’ What they don’t realise is the reason they’re attracted to her is because of their power over her. That’s what they don’t get.”

The article is here.

The Guardian

Originally published December 8, 2017

Here are two excerpts:

“Yes, I hate to say it, but yes. Democracy is an advance past the tribal nature of our being, the tribal nature of society, which was there for hundreds of thousands, if not millions, of years. It’s very easy for us to fall back into our tribal, evolutionary nature – tribe against tribe, us against them. It’s a very powerful motivator.” Because it speaks to our most primitive self? “Yes, and we don’t realise how powerful it is.” Until we have understood its power, Bargh argues, we have no hope of overcoming it. “So that’s what we have to do.” As he writes: “Refusing to believe the evidence, just to maintain one’s belief in free will, actually reduces the amount of free will that person has.”

(cut)

Participants were asked to fill out an anonymous questionnaire devised to reveal their willingness to use power over a woman to extract sexual favours if guaranteed to get away with it. Some were asked to rate a female participant’s attractiveness. Others were first primed by a word-association technique, using words such as “boss”, “authority”, “status” and “power”, and then asked to rate her. Bargh found the power-priming made no difference whatsoever to men who had scored low on sexual harassment and aggression tendencies. Among men who had scored highly, however, it was a very different case. Without the notion of power being activated in their brains, they found her unattractive. She only became attractive to them once the idea of power was active in their minds.

This, Bargh suggests, might explain how sexual harassers can genuinely tell themselves: “‘I’m behaving like anybody does when they’re attracted to somebody else. I’m flirting. I’m asking her out. I want to date her. I’m doing everything that you do if you’re attracted to somebody.’ What they don’t realise is the reason they’re attracted to her is because of their power over her. That’s what they don’t get.”

The article is here.

Why are America's farmers killing themselves in record numbers?

Debbie Weingarten

The Guardian

Originally published December 6, 2017

Here is an excerpt:

“Farming has always been a stressful occupation because many of the factors that affect agricultural production are largely beyond the control of the producers,” wrote Rosmann in the journal Behavioral Healthcare. “The emotional wellbeing of family farmers and ranchers is intimately intertwined with these changes.”

Last year, a study by the Centers for Disease Control and Prevention (CDC) found that people working in agriculture – including farmers, farm laborers, ranchers, fishers, and lumber harvesters – take their lives at a rate higher than any other occupation. The data suggested that the suicide rate for agricultural workers in 17 states was nearly five times higher compared with that in the general population.

After the study was released, Newsweek reported that the suicide death rate for farmers was more than double that of military veterans. This, however, could be an underestimate, as the data collected skipped several major agricultural states, including Iowa. Rosmann and other experts add that the farmer suicide rate might be higher, because an unknown number of farmers disguise their suicides as farm accidents.

The US farmer suicide crisis echoes a much larger farmer suicide crisis happening globally: an Australian farmer dies by suicide every four days; in the UK, one farmer a week takes his or her own life; in France, one farmer dies by suicide every two days; in India, more than 270,000 farmers have died by suicide since 1995.

The article is here.

The Guardian

Originally published December 6, 2017

Here is an excerpt:

“Farming has always been a stressful occupation because many of the factors that affect agricultural production are largely beyond the control of the producers,” wrote Rosmann in the journal Behavioral Healthcare. “The emotional wellbeing of family farmers and ranchers is intimately intertwined with these changes.”

Last year, a study by the Centers for Disease Control and Prevention (CDC) found that people working in agriculture – including farmers, farm laborers, ranchers, fishers, and lumber harvesters – take their lives at a rate higher than any other occupation. The data suggested that the suicide rate for agricultural workers in 17 states was nearly five times higher compared with that in the general population.

After the study was released, Newsweek reported that the suicide death rate for farmers was more than double that of military veterans. This, however, could be an underestimate, as the data collected skipped several major agricultural states, including Iowa. Rosmann and other experts add that the farmer suicide rate might be higher, because an unknown number of farmers disguise their suicides as farm accidents.

The US farmer suicide crisis echoes a much larger farmer suicide crisis happening globally: an Australian farmer dies by suicide every four days; in the UK, one farmer a week takes his or her own life; in France, one farmer dies by suicide every two days; in India, more than 270,000 farmers have died by suicide since 1995.

The article is here.

Wednesday, December 27, 2017

The Phenomenon of ‘Bud Sex’ Between Straight Rural Men

Jesse Singal

thecut.com

Originally posted December 18, 2016

A lot of men have sex with other men but don’t identify as gay or bisexual. A subset of these men who have sex with men, or MSM, live lives that are, in all respects other than their occasional homosexual encounters, quite straight and traditionally masculine — they have wives and families, they embrace various masculine norms, and so on. They are able to, in effect, compartmentalize an aspect of their sex lives in a way that prevents it from blurring into or complicating their more public identities. Sociologists are quite interested in this phenomenon because it can tell us a lot about how humans interpret thorny questions of identity and sexual desire and cultural expectations.

(cut)

Specifically, Silva was trying to understand better the interplay between “normative rural masculinity” — the set of mores and norms that defines what it means to be a rural man — and these men’s sexual encounters. In doing so, he introduces a really interesting and catchy concept, “bud-sex”...

The article is here.

thecut.com

Originally posted December 18, 2016

A lot of men have sex with other men but don’t identify as gay or bisexual. A subset of these men who have sex with men, or MSM, live lives that are, in all respects other than their occasional homosexual encounters, quite straight and traditionally masculine — they have wives and families, they embrace various masculine norms, and so on. They are able to, in effect, compartmentalize an aspect of their sex lives in a way that prevents it from blurring into or complicating their more public identities. Sociologists are quite interested in this phenomenon because it can tell us a lot about how humans interpret thorny questions of identity and sexual desire and cultural expectations.

(cut)

Specifically, Silva was trying to understand better the interplay between “normative rural masculinity” — the set of mores and norms that defines what it means to be a rural man — and these men’s sexual encounters. In doing so, he introduces a really interesting and catchy concept, “bud-sex”...

The article is here.

The Desirability of Storytellers

Ed Young

The Atlantic

Originally posted December 5, 2017

Here are several excerpts:

Storytelling is a universal human trait. It emerges spontaneously in childhood, and exists in all cultures thus far studied. It’s also ancient: Some specific stories have roots that stretch back for around 6,000 years. As I’ve written before, these tales aren’t quite as old as time, but perhaps as old as wheels and writing. Because of its antiquity and ubiquity, some scholars have portrayed storytelling as an important human adaptation—and that’s certainly how Migliano sees it. Among the Agta, her team found evidence that stories—and the very act of storytelling—arose partly as a way of cementing social bonds, and instilling an ethic of cooperation.

(cut)

In fact, the Agta seemed to value storytelling above all else. Good storytellers were twice as likely to be named as ideal living companions as more pedestrian tale spinners, and storytelling acumen mattered far more all the other skills. “It was highly valued, twice as much as being a good hunter,” says Migliano. “We were puzzled.”

(cut)

Skilled Agta storytellers are more likely to receive gifts, and they’re not only more desirable as living companions—but also as mates. On average, they have 0.5 more children than their peers. That’s a crucial result. Stories might help to knit communities together, but evolution doesn’t operate for the good of the group. If storytelling is truly an adaptation, as Migliano suggests, it has to benefit individuals who are good at it—and it clearly does.

“It’s often said that telling stories, and other cultural practices such as singing and dancing, help group cooperation, but real-world tests of this idea are not common,” says Michael Chwe, a political scientist at the University of California, Los Angeles, who studies human cooperation. “The team’s attempt to do this is admirable.”

The article is here.

The Atlantic

Originally posted December 5, 2017

Here are several excerpts:

Storytelling is a universal human trait. It emerges spontaneously in childhood, and exists in all cultures thus far studied. It’s also ancient: Some specific stories have roots that stretch back for around 6,000 years. As I’ve written before, these tales aren’t quite as old as time, but perhaps as old as wheels and writing. Because of its antiquity and ubiquity, some scholars have portrayed storytelling as an important human adaptation—and that’s certainly how Migliano sees it. Among the Agta, her team found evidence that stories—and the very act of storytelling—arose partly as a way of cementing social bonds, and instilling an ethic of cooperation.

(cut)

In fact, the Agta seemed to value storytelling above all else. Good storytellers were twice as likely to be named as ideal living companions as more pedestrian tale spinners, and storytelling acumen mattered far more all the other skills. “It was highly valued, twice as much as being a good hunter,” says Migliano. “We were puzzled.”

(cut)

Skilled Agta storytellers are more likely to receive gifts, and they’re not only more desirable as living companions—but also as mates. On average, they have 0.5 more children than their peers. That’s a crucial result. Stories might help to knit communities together, but evolution doesn’t operate for the good of the group. If storytelling is truly an adaptation, as Migliano suggests, it has to benefit individuals who are good at it—and it clearly does.

“It’s often said that telling stories, and other cultural practices such as singing and dancing, help group cooperation, but real-world tests of this idea are not common,” says Michael Chwe, a political scientist at the University of California, Los Angeles, who studies human cooperation. “The team’s attempt to do this is admirable.”

The article is here.

Tuesday, December 26, 2017

When Morals Ain’t Enough: Robots, Ethics, and the Rules of the Law

Pagallo, U.

Minds & Machines (2017) 27: 625.

https://doi.org/10.1007/s11023-017-9418-5

Abstract

No single moral theory can instruct us as to whether and to what extent we are confronted with legal loopholes, e.g. whether or not new legal rules should be added to the system in the criminal law field. This question on the primary rules of the law appears crucial for today’s debate on roboethics and still, goes beyond the expertise of robo-ethicists. On the other hand, attention should be drawn to the secondary rules of the law: The unpredictability of robotic behaviour and the lack of data on the probability of events, their consequences and costs, make hard to determine the levels of risk and hence, the amount of insurance premiums and other mechanisms on which new forms of accountability for the behaviour of robots may hinge. By following Japanese thinking, the aim is to show why legally de-regulated, or special, zones for robotics, i.e. the secondary rules of the system, pave the way to understand what kind of primary rules we may want for our robots.

The article is here.

Minds & Machines (2017) 27: 625.

https://doi.org/10.1007/s11023-017-9418-5

Abstract

No single moral theory can instruct us as to whether and to what extent we are confronted with legal loopholes, e.g. whether or not new legal rules should be added to the system in the criminal law field. This question on the primary rules of the law appears crucial for today’s debate on roboethics and still, goes beyond the expertise of robo-ethicists. On the other hand, attention should be drawn to the secondary rules of the law: The unpredictability of robotic behaviour and the lack of data on the probability of events, their consequences and costs, make hard to determine the levels of risk and hence, the amount of insurance premiums and other mechanisms on which new forms of accountability for the behaviour of robots may hinge. By following Japanese thinking, the aim is to show why legally de-regulated, or special, zones for robotics, i.e. the secondary rules of the system, pave the way to understand what kind of primary rules we may want for our robots.

The article is here.

Should Robots Have Rights? Four Perspectives

John Danaher

Philosophical Disquisitions

Originally published October 31. 2017

Here is an excerpt:

The Four Positions on Robot Rights

Before I get into the four perspectives that Gunkel reviews, I’m going to start by asking a question that he does not raise (in this paper), namely: what would it mean to say that a robot has a ‘right’ to something? This is an inquiry into the nature of rights in the first place. I think it is important to start with this question because it is worth having some sense of the practical meaning of robot rights before we consider their entitlement to them.

I’m not going to say anything particularly ground-breaking. I’m going to follow the standard Hohfeldian account of rights — one that has been used for over 100 years. According to this account, rights claims — e.g. the claim that you have a right to privacy — can be broken down into a set of four possible ‘incidents’: (i) a privilege; (ii) a claim; (iii) a power; and (iv) an immunity. So, in the case of a right to privacy, you could be claiming one or more of the following four things:

The blog post is here.

Philosophical Disquisitions

Originally published October 31. 2017

Here is an excerpt:

The Four Positions on Robot Rights

Before I get into the four perspectives that Gunkel reviews, I’m going to start by asking a question that he does not raise (in this paper), namely: what would it mean to say that a robot has a ‘right’ to something? This is an inquiry into the nature of rights in the first place. I think it is important to start with this question because it is worth having some sense of the practical meaning of robot rights before we consider their entitlement to them.

I’m not going to say anything particularly ground-breaking. I’m going to follow the standard Hohfeldian account of rights — one that has been used for over 100 years. According to this account, rights claims — e.g. the claim that you have a right to privacy — can be broken down into a set of four possible ‘incidents’: (i) a privilege; (ii) a claim; (iii) a power; and (iv) an immunity. So, in the case of a right to privacy, you could be claiming one or more of the following four things:

- Privilege: That you have a liberty or privilege to do as you please within a certain zone of privacy.

- Claim: That others have a duty not to encroach upon you in that zone of privacy.

- Power: That you have the power to waive your claim-right not to be interfered with in that zone of privacy.

- Immunity: That you are legally protected against others trying to waive your claim-right on your behalf

The blog post is here.

Monday, December 25, 2017

First Baby Born To U.S. Uterus Transplant Patient Raises Ethics Questions

Greta Jochem

NPR.org

Originally published December 5, 2017

Here is an excerpt:

We mention that not everyone is celebrating this. It raises some ethical questions. Is it possible with a procedure that is so experimental, so risky, to get informed consent from women who desperately want to have a baby?

Dr. Testa: I doubt it is possible for lay people to have informed consent about anything we do in medicine, if you ask me. This is even more complicated because we are going into uncharted waters. ... I think that we go through years of studying to understand what we do, and to achieve mastering the things we do. And then we pretend that in ten minutes we can explain something to anybody. ... I don't think it's really possible.

... We try to use the simplest terms we can think about and then we leave it to the autonomy of the patients, in this case not even patients, these women, to make the decisions. I think we really refrain, and it was really important for us, from any pressure of any kind from our side but then of course, the inner pressure of this woman to have a child I think drove the entire process and their decision at the end.

The article is here.

NPR.org

Originally published December 5, 2017

Here is an excerpt:

We mention that not everyone is celebrating this. It raises some ethical questions. Is it possible with a procedure that is so experimental, so risky, to get informed consent from women who desperately want to have a baby?

Dr. Testa: I doubt it is possible for lay people to have informed consent about anything we do in medicine, if you ask me. This is even more complicated because we are going into uncharted waters. ... I think that we go through years of studying to understand what we do, and to achieve mastering the things we do. And then we pretend that in ten minutes we can explain something to anybody. ... I don't think it's really possible.

... We try to use the simplest terms we can think about and then we leave it to the autonomy of the patients, in this case not even patients, these women, to make the decisions. I think we really refrain, and it was really important for us, from any pressure of any kind from our side but then of course, the inner pressure of this woman to have a child I think drove the entire process and their decision at the end.

The article is here.

Sunday, December 24, 2017

Moral Choices for Today’s Physician

Donald M. Berwick

JAMA. 2017;318(21):2081-2082.

Here is an excerpt:

Hospitals today play the games afforded by an opaque and fragmented payment system and by the concentration of market share to near-monopoly levels that allow them to elevate costs and prices nearly at will, confiscating resources from other badly needed enterprises, both inside health (like prevention) and outside (like schools, housing, and jobs).

And this unfairness—this self-interest—this defense of local stakes at the expense of fragile communities and disadvantaged populations goes far, far beyond health care itself. So does the physician’s ethical duty. Two examples help make the point.

In my view, the biggest travesty in current US social policy is not the failure to fund health care properly or the pricing games of health care companies. It is the nation’s criminal justice system, incarcerating and then stealing the spirit and hope of by far a larger proportion of our population than in any other developed nation on earth. If taking the life-years and self-respect of millions of youth (with black individuals being imprisoned at more than five times the rate of whites), leaving them without choice, freedom, or the hope of growth is not a health problem, then what is?

The article is here.

JAMA. 2017;318(21):2081-2082.

Here is an excerpt:

Hospitals today play the games afforded by an opaque and fragmented payment system and by the concentration of market share to near-monopoly levels that allow them to elevate costs and prices nearly at will, confiscating resources from other badly needed enterprises, both inside health (like prevention) and outside (like schools, housing, and jobs).

And this unfairness—this self-interest—this defense of local stakes at the expense of fragile communities and disadvantaged populations goes far, far beyond health care itself. So does the physician’s ethical duty. Two examples help make the point.

In my view, the biggest travesty in current US social policy is not the failure to fund health care properly or the pricing games of health care companies. It is the nation’s criminal justice system, incarcerating and then stealing the spirit and hope of by far a larger proportion of our population than in any other developed nation on earth. If taking the life-years and self-respect of millions of youth (with black individuals being imprisoned at more than five times the rate of whites), leaving them without choice, freedom, or the hope of growth is not a health problem, then what is?

The article is here.

Saturday, December 23, 2017

What Makes Moral Disgust Special? An Integrative Functional Review

Giner-Sorolla, Roger and Kupfer, Tom R. and Sabo, John S. (2018)

Advances in Experimental Social Psychology. Advances in Experimental Social Psychology, 57

The role of disgust in moral psychology has been a matter of much controversy and experimentation over the past 20 or so years. We present here an integrative look at the literature, organized according to the four functions of emotion proposed by integrative functional theory: appraisal, associative, self-regulation, and communicative. Regarding appraisals, we review experimental, personality, and neuroscientific work that has shown differences between elicitors of disgust and anger in moral contexts, with disgust responding more to bodily moral violations such as incest, and anger responding more to sociomoral violations such as theft. We also present new evidence for interpreting the phenomenon of sociomoral disgust as an appraisal of bad character in a person. The associative nature of disgust is shown by evidence for “unreasoning disgust,” in which associations to bodily moral violations are not accompanied by elaborated reasons, and not modified by appraisals such as harm or intent. We also critically examine the literature about the ability of incidental disgust to intensify moral judgments associatively. For disgust's self-regulation function, we consider the possibility that disgust serves as an existential defense, regulating avoidance of thoughts that might threaten our basic self-image as living humans. Finally, we discuss new evidence from our lab that moral disgust serves a communicative function, implying that expressions of disgust serve to signal one's own moral intentions even when a different emotion is felt internally on the basis of appraisal. Within the scope of the literature, there is evidence that all four functions of Giner-Sorolla’s (2012) integrative functional theory of emotion may be operating, and that their variety can help explain some of the paradoxes of disgust.

The information is here.

Advances in Experimental Social Psychology. Advances in Experimental Social Psychology, 57

The role of disgust in moral psychology has been a matter of much controversy and experimentation over the past 20 or so years. We present here an integrative look at the literature, organized according to the four functions of emotion proposed by integrative functional theory: appraisal, associative, self-regulation, and communicative. Regarding appraisals, we review experimental, personality, and neuroscientific work that has shown differences between elicitors of disgust and anger in moral contexts, with disgust responding more to bodily moral violations such as incest, and anger responding more to sociomoral violations such as theft. We also present new evidence for interpreting the phenomenon of sociomoral disgust as an appraisal of bad character in a person. The associative nature of disgust is shown by evidence for “unreasoning disgust,” in which associations to bodily moral violations are not accompanied by elaborated reasons, and not modified by appraisals such as harm or intent. We also critically examine the literature about the ability of incidental disgust to intensify moral judgments associatively. For disgust's self-regulation function, we consider the possibility that disgust serves as an existential defense, regulating avoidance of thoughts that might threaten our basic self-image as living humans. Finally, we discuss new evidence from our lab that moral disgust serves a communicative function, implying that expressions of disgust serve to signal one's own moral intentions even when a different emotion is felt internally on the basis of appraisal. Within the scope of the literature, there is evidence that all four functions of Giner-Sorolla’s (2012) integrative functional theory of emotion may be operating, and that their variety can help explain some of the paradoxes of disgust.

The information is here.

Friday, December 22, 2017

Is Technology Value-Neutral? New Technologies and Collective Action Problems

John Danaher

Philosophical Disquisitions

Originally published December 3, 2017

Here is an excerpt:

Value-neutrality is a seductive position. For most of human history, technology has been the product of human agency. In order for a technology to come into existence, and have any effect on the world, it must have been conceived, created and utilised by a human being. There has been a necessary dyadic relationship between humans and technology. This has meant that whenever it comes time to evaluate the impacts of a particular technology on the world, there is always some human to share in the praise or blame. And since we are so comfortable with praising and blaming our fellow human beings, it’s very easy to suppose that they share all the praise and blame.

Note how I said that this has been true for ‘most of human history’. There is one obvious way in which technology could cease to be value-neutral: if technology itself has agency. In other words, if technology develops its own preferences and values, and acts to pursue them in the world. The great promise (and fear) about artificial intelligence is that it will result in forms of technology that do exactly that (and that can create other forms of technology that do exactly that). Once we have full-blown artificial agents, the value-neutrality thesis may no longer be so seductive.

We are almost there, but not quite. For the time being, it is still possible to view all technologies in terms of the dyadic relationship that makes value-neutrality more plausible.

The article is here.

Philosophical Disquisitions

Originally published December 3, 2017

Here is an excerpt:

Value-neutrality is a seductive position. For most of human history, technology has been the product of human agency. In order for a technology to come into existence, and have any effect on the world, it must have been conceived, created and utilised by a human being. There has been a necessary dyadic relationship between humans and technology. This has meant that whenever it comes time to evaluate the impacts of a particular technology on the world, there is always some human to share in the praise or blame. And since we are so comfortable with praising and blaming our fellow human beings, it’s very easy to suppose that they share all the praise and blame.

Note how I said that this has been true for ‘most of human history’. There is one obvious way in which technology could cease to be value-neutral: if technology itself has agency. In other words, if technology develops its own preferences and values, and acts to pursue them in the world. The great promise (and fear) about artificial intelligence is that it will result in forms of technology that do exactly that (and that can create other forms of technology that do exactly that). Once we have full-blown artificial agents, the value-neutrality thesis may no longer be so seductive.

We are almost there, but not quite. For the time being, it is still possible to view all technologies in terms of the dyadic relationship that makes value-neutrality more plausible.

The article is here.

Professional Self-Care to Prevent Ethics Violations

Claire Zilber

The Ethical Professor

Originally published December 4, 2017

Here is an excerpt:

Although there are many variables that lead a professional to violate an ethics rule, one frequent contributing factor is impairment from stress caused by a family member's illness (sick child, dying parent, spouse's chronic health condition, etc.). Some health care providers who have been punished by their licensing board, hospital board or practice group for an ethics violation tell similar stories of being under unusual levels of stress because of a family member who was ill. In that context, they deviated from their usual behavior.

For example, a surgeon whose son was mentally ill prescribed psychotropic medications to him because he refused to go to a psychiatrist. This surgeon was entering into a dual relationship with her child and prescribing outside of her area of competence, but felt desperate to help her son. Another physician, deeply unsettled by his wife’s diagnosis with and treatment for breast cancer, had an extramarital affair with a nurse who was also his employee. This physician sought comfort without thinking about the boundaries he was violating at work, the risk he was creating for his practice, or the harm he was causing to his marriage.

Physicians cannot avoid stressful events at work and in their personal lives, but they can exert some control over how they adapt to or manage that stress. Physician self-care begins with self-awareness, which can be supported by such practices as mindfulness meditation, reflective writing, supervision, or psychotherapy. Self-awareness increases compassion for the self and for others, and reduces burnout.

The article is here.

The Ethical Professor

Originally published December 4, 2017

Here is an excerpt:

Although there are many variables that lead a professional to violate an ethics rule, one frequent contributing factor is impairment from stress caused by a family member's illness (sick child, dying parent, spouse's chronic health condition, etc.). Some health care providers who have been punished by their licensing board, hospital board or practice group for an ethics violation tell similar stories of being under unusual levels of stress because of a family member who was ill. In that context, they deviated from their usual behavior.

For example, a surgeon whose son was mentally ill prescribed psychotropic medications to him because he refused to go to a psychiatrist. This surgeon was entering into a dual relationship with her child and prescribing outside of her area of competence, but felt desperate to help her son. Another physician, deeply unsettled by his wife’s diagnosis with and treatment for breast cancer, had an extramarital affair with a nurse who was also his employee. This physician sought comfort without thinking about the boundaries he was violating at work, the risk he was creating for his practice, or the harm he was causing to his marriage.

Physicians cannot avoid stressful events at work and in their personal lives, but they can exert some control over how they adapt to or manage that stress. Physician self-care begins with self-awareness, which can be supported by such practices as mindfulness meditation, reflective writing, supervision, or psychotherapy. Self-awareness increases compassion for the self and for others, and reduces burnout.

The article is here.

Thursday, December 21, 2017

An AI That Can Build AI

Dom Galeon and Kristin Houser

Futurism.com

Originally published on December 1, 2017

Here is an excerpt:

Thankfully, world leaders are working fast to ensure such systems don’t lead to any sort of dystopian future.

Amazon, Facebook, Apple, and several others are all members of the Partnership on AI to Benefit People and Society, an organization focused on the responsible development of AI. The Institute of Electrical and Electronics Engineers (IEE) has proposed ethical standards for AI, and DeepMind, a research company owned by Google’s parent company Alphabet, recently announced the creation of group focused on the moral and ethical implications of AI.

Various governments are also working on regulations to prevent the use of AI for dangerous purposes, such as autonomous weapons, and so long as humans maintain control of the overall direction of AI development, the benefits of having an AI that can build AI should far outweigh any potential pitfalls.

The information is here.

Futurism.com

Originally published on December 1, 2017

Here is an excerpt:

Thankfully, world leaders are working fast to ensure such systems don’t lead to any sort of dystopian future.

Amazon, Facebook, Apple, and several others are all members of the Partnership on AI to Benefit People and Society, an organization focused on the responsible development of AI. The Institute of Electrical and Electronics Engineers (IEE) has proposed ethical standards for AI, and DeepMind, a research company owned by Google’s parent company Alphabet, recently announced the creation of group focused on the moral and ethical implications of AI.

Various governments are also working on regulations to prevent the use of AI for dangerous purposes, such as autonomous weapons, and so long as humans maintain control of the overall direction of AI development, the benefits of having an AI that can build AI should far outweigh any potential pitfalls.

The information is here.

The Sex Robots Are Coming – an intriguing report into the mind-boggling world of adult dolls

Jasper Rees

The Telegraph

Originally posted November 30, 2017

Sex robots: where do you start? In a Californian laboratory, obviously, where a hot next generation of Frankenstein’s monster is being conjured into existence. The latest prototype is a buxom object called Harmony who talks dirty in (for some reason) a Scottish accent. We made her acquaintance in The Sex Robots Are Coming (Channel 4) which, for reasons one needn’t explain, was not necessarily an accurate title.

Sexbots are the next big thing in Artificial Intelligence. We met James, a gentle lantern-jawed man from Atlanta whose current harem of life-size dolls uncomplainingly submit to a regime of two to four couplings a week in a host of positions. The only drawback, it seemed, was they couldn’t tell him they love him like a sexbot would.

These things were being fixed in the lab, which looked like a charnel house of serried butts and decapitated manikins. The task of chief engineer Matt was to turn all this plasticated form into a set of mechanised emotions. He was developing a range of personalities, he said, though the array of demeaning stereotypes didn’t seem to include the harridan or the hysteric.

The article is here.

The Telegraph

Originally posted November 30, 2017

Sex robots: where do you start? In a Californian laboratory, obviously, where a hot next generation of Frankenstein’s monster is being conjured into existence. The latest prototype is a buxom object called Harmony who talks dirty in (for some reason) a Scottish accent. We made her acquaintance in The Sex Robots Are Coming (Channel 4) which, for reasons one needn’t explain, was not necessarily an accurate title.

Sexbots are the next big thing in Artificial Intelligence. We met James, a gentle lantern-jawed man from Atlanta whose current harem of life-size dolls uncomplainingly submit to a regime of two to four couplings a week in a host of positions. The only drawback, it seemed, was they couldn’t tell him they love him like a sexbot would.

These things were being fixed in the lab, which looked like a charnel house of serried butts and decapitated manikins. The task of chief engineer Matt was to turn all this plasticated form into a set of mechanised emotions. He was developing a range of personalities, he said, though the array of demeaning stereotypes didn’t seem to include the harridan or the hysteric.

The article is here.

Wednesday, December 20, 2017

Americans have always been divided over morality, politics and religion

Andrew Fiala

The Fresno Bee

Originally published December 1, 2017

Our country seems more divided than ever. Recent polls from the Pew Center and the Washington Post make this clear. The Post concludes that seven in 10 Americans say we have “reached a dangerous low point” of divisiveness. A significant majority of Americans think our divisions are as bad as they were during the Vietnam War.

But let’s be honest, we have always been divided. Free people always disagree about morality, politics and religion. We disagree about abortion, euthanasia, gay marriage, drug legalization, pornography, the death penalty and a host of other issues. We also disagree about taxation, inequality, government regulation, race, poverty, immigration, national security, environmental protection, gun control and so on.

Beneath our moral and political disagreements are deep religious differences. Atheists want religious superstitions to die out. Theists think we need God’s guidance. And religious people disagree among themselves about God, morality and politics.

The post is here.

The Fresno Bee

Originally published December 1, 2017

Our country seems more divided than ever. Recent polls from the Pew Center and the Washington Post make this clear. The Post concludes that seven in 10 Americans say we have “reached a dangerous low point” of divisiveness. A significant majority of Americans think our divisions are as bad as they were during the Vietnam War.

But let’s be honest, we have always been divided. Free people always disagree about morality, politics and religion. We disagree about abortion, euthanasia, gay marriage, drug legalization, pornography, the death penalty and a host of other issues. We also disagree about taxation, inequality, government regulation, race, poverty, immigration, national security, environmental protection, gun control and so on.

Beneath our moral and political disagreements are deep religious differences. Atheists want religious superstitions to die out. Theists think we need God’s guidance. And religious people disagree among themselves about God, morality and politics.

The post is here.

Can psychopathic offenders discern moral wrongs? A new look at the moral/conventional distinction.

Aharoni, E., Sinnott-Armstrong, W., & Kiehl, K. A.

Journal of Abnormal Psychology, 121(2), 484-497. (2012)

Abstract

A prominent view of psychopathic moral reasoning suggests that psychopathic individuals cannot properly distinguish between moral wrongs and other types of wrongs. The present study evaluated this view by examining the extent to which 109 incarcerated offenders with varying degrees of psychopathy could distinguish between moral and conventional transgressions relative to each other and to nonincarcerated healthy controls. Using a modified version of the classic Moral/Conventional Transgressions task that uses a forced-choice format to minimize strategic responding, the present study found that total psychopathy score did not predict performance on the task. Task performance was explained by some individual subfacets of psychopathy and by other variables unrelated to psychopathy, such as IQ. The authors conclude that, contrary to earlier claims, insufficient data exist to infer that psychopathic individuals cannot know what is morally wrong.

The article is here.

Journal of Abnormal Psychology, 121(2), 484-497. (2012)

Abstract

A prominent view of psychopathic moral reasoning suggests that psychopathic individuals cannot properly distinguish between moral wrongs and other types of wrongs. The present study evaluated this view by examining the extent to which 109 incarcerated offenders with varying degrees of psychopathy could distinguish between moral and conventional transgressions relative to each other and to nonincarcerated healthy controls. Using a modified version of the classic Moral/Conventional Transgressions task that uses a forced-choice format to minimize strategic responding, the present study found that total psychopathy score did not predict performance on the task. Task performance was explained by some individual subfacets of psychopathy and by other variables unrelated to psychopathy, such as IQ. The authors conclude that, contrary to earlier claims, insufficient data exist to infer that psychopathic individuals cannot know what is morally wrong.

The article is here.

Tuesday, December 19, 2017

Beyond Blaming the Victim: Toward a More Progressive Understanding of Workplace Mistreatment

Lilia M. Cortina, Verónica Caridad Rabelo, & Kathryn J. Holland

Industrial and Organizational Psychology

Published online: 21 November 2017

Theories of human aggression can inform research, policy, and practice in organizations. One such theory, victim precipitation, originated in the field of criminology. According to this perspective, some victims invite abuse through their personalities, styles of speech or dress, actions, and even their inactions. That is, they are partly at fault for the wrongdoing of others. This notion is gaining purchase in industrial and organizational (I-O) psychology as an explanation for workplace mistreatment. The first half of our article provides an overview and critique of the victim precipitation hypothesis. After tracing its history, we review the flaws of victim precipitation as catalogued by scientists and practitioners over several decades. We also consider real-world implications of victim precipitation thinking, such as the exoneration of violent criminals. Confident that I-O can do better, the second half of this article highlights alternative frameworks for researching and redressing hostile work behavior. In addition, we discuss a broad analytic paradigm—perpetrator predation—as a way to understand workplace abuse without blaming the abused. We take the position that these alternative perspectives offer stronger, more practical, and more progressive explanations for workplace mistreatment. Victim precipitation, we conclude, is an archaic ideology. Criminologists have long since abandoned it, and so should we.

The article is here.

Industrial and Organizational Psychology

Published online: 21 November 2017

Theories of human aggression can inform research, policy, and practice in organizations. One such theory, victim precipitation, originated in the field of criminology. According to this perspective, some victims invite abuse through their personalities, styles of speech or dress, actions, and even their inactions. That is, they are partly at fault for the wrongdoing of others. This notion is gaining purchase in industrial and organizational (I-O) psychology as an explanation for workplace mistreatment. The first half of our article provides an overview and critique of the victim precipitation hypothesis. After tracing its history, we review the flaws of victim precipitation as catalogued by scientists and practitioners over several decades. We also consider real-world implications of victim precipitation thinking, such as the exoneration of violent criminals. Confident that I-O can do better, the second half of this article highlights alternative frameworks for researching and redressing hostile work behavior. In addition, we discuss a broad analytic paradigm—perpetrator predation—as a way to understand workplace abuse without blaming the abused. We take the position that these alternative perspectives offer stronger, more practical, and more progressive explanations for workplace mistreatment. Victim precipitation, we conclude, is an archaic ideology. Criminologists have long since abandoned it, and so should we.

The article is here.

Health Insurers Are Still Skimping On Mental Health Coverage

Jenny Gold

Kaiser Health News/NPR

Originally published November 30, 2017

It has been nearly a decade since Congress passed the Mental Health Parity And Addiction Equity Act, with its promise to make mental health and substance abuse treatment just as easy to get as care for any other condition. Yet today, amid an opioid epidemic and a spike in the suicide rate, patients are still struggling to get access to treatment.

That is the conclusion of a national study published Thursday by Milliman, a risk management and health care consulting company. The report was released by a coalition of mental health and addiction advocacy organizations.

Among the findings:

The article is here.

Kaiser Health News/NPR

Originally published November 30, 2017

It has been nearly a decade since Congress passed the Mental Health Parity And Addiction Equity Act, with its promise to make mental health and substance abuse treatment just as easy to get as care for any other condition. Yet today, amid an opioid epidemic and a spike in the suicide rate, patients are still struggling to get access to treatment.

That is the conclusion of a national study published Thursday by Milliman, a risk management and health care consulting company. The report was released by a coalition of mental health and addiction advocacy organizations.

Among the findings:

- In 2015, behavioral care was four to six times more likely to be provided out-of-network than medical or surgical care.

- Insurers paid primary care providers 20 percent more for the same types of care than they paid addiction and mental health care specialists, including psychiatrists.

- State statistics vary widely. In New Jersey, 45 percent of office visits for behavioral health care were out-of-network. In Washington, D.C., it was 63 percent.

The article is here.

Monday, December 18, 2017

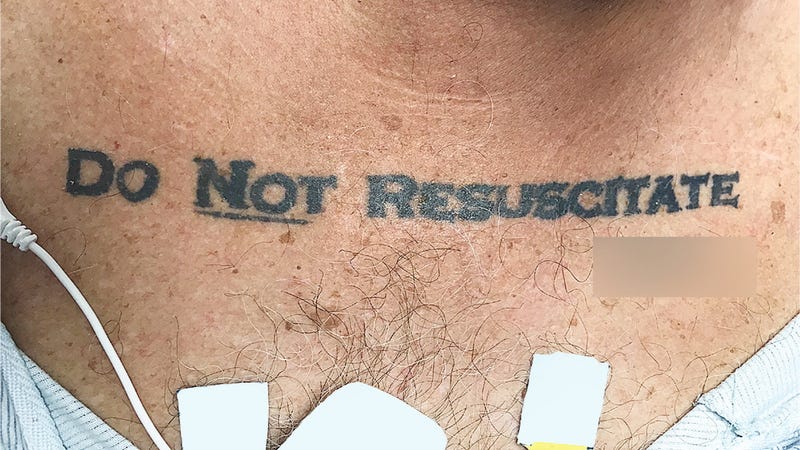

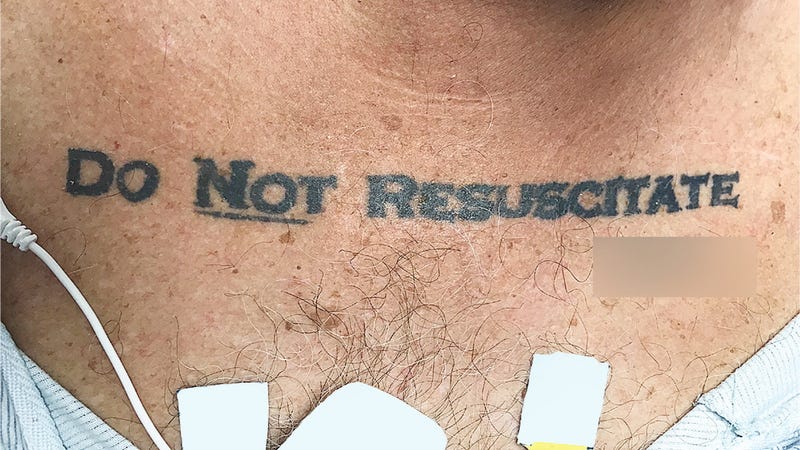

Unconscious Patient With 'Do Not Resuscitate' Tattoo Causes Ethical Conundrum at Hospital

George Dvorsky

Gizmodo

Originally published November 30, 2017

When an unresponsive patient arrived at a Florida hospital ER, the medical staff was taken aback upon discovering the words “DO NOT RESUSCITATE” tattooed onto the man’s chest—with the word “NOT” underlined and with his signature beneath it. Confused and alarmed, the medical staff chose to ignore the apparent DNR request—but not without alerting the hospital’s ethics team, who had a different take on the matter.

When an unresponsive patient arrived at a Florida hospital ER, the medical staff was taken aback upon discovering the words “DO NOT RESUSCITATE” tattooed onto the man’s chest—with the word “NOT” underlined and with his signature beneath it. Confused and alarmed, the medical staff chose to ignore the apparent DNR request—but not without alerting the hospital’s ethics team, who had a different take on the matter.

But with the “DO NOT RESUSCITATE” tattoo glaring back at them, the ICU team was suddenly confronted with a serious dilemma. The patient arrived at the hospital without ID, the medical staff was unable to contact next of kin, and efforts to revive or communicate with the patient were futile. The medical staff had no way of knowing if the tattoo was representative of the man’s true end-of-life wishes, so they decided to play it safe and ignore it.

The article is here.

Gizmodo

Originally published November 30, 2017

When an unresponsive patient arrived at a Florida hospital ER, the medical staff was taken aback upon discovering the words “DO NOT RESUSCITATE” tattooed onto the man’s chest—with the word “NOT” underlined and with his signature beneath it. Confused and alarmed, the medical staff chose to ignore the apparent DNR request—but not without alerting the hospital’s ethics team, who had a different take on the matter.

When an unresponsive patient arrived at a Florida hospital ER, the medical staff was taken aback upon discovering the words “DO NOT RESUSCITATE” tattooed onto the man’s chest—with the word “NOT” underlined and with his signature beneath it. Confused and alarmed, the medical staff chose to ignore the apparent DNR request—but not without alerting the hospital’s ethics team, who had a different take on the matter.But with the “DO NOT RESUSCITATE” tattoo glaring back at them, the ICU team was suddenly confronted with a serious dilemma. The patient arrived at the hospital without ID, the medical staff was unable to contact next of kin, and efforts to revive or communicate with the patient were futile. The medical staff had no way of knowing if the tattoo was representative of the man’s true end-of-life wishes, so they decided to play it safe and ignore it.

The article is here.

Is Pulling the Lever Sexy? Deontology as a Downstream Cue to Long-Term Mate Quality

Mitch Brown and Donald Sacco

Journal of Social and Personal Relationships

November 2017

Abstract

Deontological and utilitarian moral decisions have unique communicative functions within the context of group living. Deontology more strongly communicates prosocial intentions, fostering greater perceptions of trust and desirability in general affiliative contexts. This general trustworthiness may extend to perceptions of fidelity in romantic relationships, leading to perceptions of deontological persons as better long-term mates, relative to utilitarians. In two studies, participants indicated desirability of both deontologists and utilitarians in long- and short-term mating contexts. In Study 1 (n = 102), women perceived a deontological man as more interested in long-term bonds, more desirable for long-term mating, and less prone to infidelity, relative to a utilitarian man. However, utilitarian men were undesirable as short-term mates. Study 2 (n = 112) had both men and women rate opposite sex targets’ desirability after learning of their moral decisions in a trolley problem. We replicated women’s preference for deontological men as long-term mates. Interestingly, both men and women reporting personal deontological motives were particularly sensitive to deontology communicating long-term desirability and fidelity, which could be a product of the general affiliative signal from deontology. Thus, one’s moral basis for decision-making, particularly deontologically-motivated moral decisions, may communicate traits valuable in long-term mating contexts.

The research is here.

Journal of Social and Personal Relationships

November 2017

Abstract

Deontological and utilitarian moral decisions have unique communicative functions within the context of group living. Deontology more strongly communicates prosocial intentions, fostering greater perceptions of trust and desirability in general affiliative contexts. This general trustworthiness may extend to perceptions of fidelity in romantic relationships, leading to perceptions of deontological persons as better long-term mates, relative to utilitarians. In two studies, participants indicated desirability of both deontologists and utilitarians in long- and short-term mating contexts. In Study 1 (n = 102), women perceived a deontological man as more interested in long-term bonds, more desirable for long-term mating, and less prone to infidelity, relative to a utilitarian man. However, utilitarian men were undesirable as short-term mates. Study 2 (n = 112) had both men and women rate opposite sex targets’ desirability after learning of their moral decisions in a trolley problem. We replicated women’s preference for deontological men as long-term mates. Interestingly, both men and women reporting personal deontological motives were particularly sensitive to deontology communicating long-term desirability and fidelity, which could be a product of the general affiliative signal from deontology. Thus, one’s moral basis for decision-making, particularly deontologically-motivated moral decisions, may communicate traits valuable in long-term mating contexts.

The research is here.

Sunday, December 17, 2017

The Impenetrable Program Transforming How Courts Treat DNA

Jessica Pishko

wired.com

Originally posted November 29, 2017

Here is an excerpt:

But now legal experts, along with Johnson’s advocates, are joining forces to argue to a California court that TrueAllele—the seemingly magic software that helped law enforcement analyze the evidence that tied Johnson to the crimes—should be forced to reveal the code that sent Johnson to prison. This code, they say, is necessary in order to properly evaluate the technology. In fact, they say, justice from an unknown algorithm is no justice at all.

As technology progresses forward, the law lags behind. As John Oliver commented last month, law enforcement and lawyers rarely understand the science behind detective work. Over the years, various types of “junk science” have been discredited. Arson burn patterns, bite marks, hair analysis, and even fingerprints have all been found to be more inaccurate than previously thought. A September 2016 report by President Obama’s Council of Advisors on Science and Technology found that many of the common techniques law enforcement historically rely on lack common standards.

In this climate, DNA evidence has been a modern miracle. DNA remains the gold standard for solving crimes, bolstered by academics, verified scientific studies, and experts around the world. Since the advent of DNA testing, nearly 200 people have been exonerated using newly tested evidence; in some places, courts will only consider exonerations with DNA evidence. Juries, too, have become more trusting of DNA, a response known popularly as the “CSI Effect.” A number of studies suggest that the presence of DNA evidence increases the likelihood of conviction or a plea agreement.

The article is here.

wired.com

Originally posted November 29, 2017

Here is an excerpt:

But now legal experts, along with Johnson’s advocates, are joining forces to argue to a California court that TrueAllele—the seemingly magic software that helped law enforcement analyze the evidence that tied Johnson to the crimes—should be forced to reveal the code that sent Johnson to prison. This code, they say, is necessary in order to properly evaluate the technology. In fact, they say, justice from an unknown algorithm is no justice at all.

As technology progresses forward, the law lags behind. As John Oliver commented last month, law enforcement and lawyers rarely understand the science behind detective work. Over the years, various types of “junk science” have been discredited. Arson burn patterns, bite marks, hair analysis, and even fingerprints have all been found to be more inaccurate than previously thought. A September 2016 report by President Obama’s Council of Advisors on Science and Technology found that many of the common techniques law enforcement historically rely on lack common standards.

In this climate, DNA evidence has been a modern miracle. DNA remains the gold standard for solving crimes, bolstered by academics, verified scientific studies, and experts around the world. Since the advent of DNA testing, nearly 200 people have been exonerated using newly tested evidence; in some places, courts will only consider exonerations with DNA evidence. Juries, too, have become more trusting of DNA, a response known popularly as the “CSI Effect.” A number of studies suggest that the presence of DNA evidence increases the likelihood of conviction or a plea agreement.

The article is here.

Punish the Perpetrator or Compensate the Victim?

Yingjie Liu, Lin Li, Li Zheng, and Xiuyan Guo

Front. Psychol., 28 November 2017

Abstract

Third-party punishment and third-party compensation are primary responses to observed norms violations. Previous studies mostly investigated these behaviors in gain rather than loss context, and few study made direct comparison between these two behaviors. We conducted three experiments to investigate third-party punishment and third-party compensation in the gain and loss context. Participants observed two persons playing Dictator Game to share an amount of gain or loss, and the proposer would propose unfair distribution sometimes. In Study 1A, participants should decide whether they wanted to punish proposer. In Study 1B, participants decided to compensate the recipient or to do nothing. This two experiments explored how gain and loss contexts might affect the willingness to altruistically punish a perpetrator, or to compensate a victim of unfairness. Results suggested that both third-party punishment and compensation were stronger in the loss context. Study 2 directly compare third-party punishment and third-party compensation in the both contexts, by allowing participants choosing between punishment, compensation and keeping. Participants chose compensation more often than punishment in the loss context, and chose more punishments in the gain context. Empathic concern partly explained between-context differences of altruistic compensation and punishment. Our findings provide insights on modulating effect of context on third-party altruistic decisions.

The research is here.

Front. Psychol., 28 November 2017

Abstract

Third-party punishment and third-party compensation are primary responses to observed norms violations. Previous studies mostly investigated these behaviors in gain rather than loss context, and few study made direct comparison between these two behaviors. We conducted three experiments to investigate third-party punishment and third-party compensation in the gain and loss context. Participants observed two persons playing Dictator Game to share an amount of gain or loss, and the proposer would propose unfair distribution sometimes. In Study 1A, participants should decide whether they wanted to punish proposer. In Study 1B, participants decided to compensate the recipient or to do nothing. This two experiments explored how gain and loss contexts might affect the willingness to altruistically punish a perpetrator, or to compensate a victim of unfairness. Results suggested that both third-party punishment and compensation were stronger in the loss context. Study 2 directly compare third-party punishment and third-party compensation in the both contexts, by allowing participants choosing between punishment, compensation and keeping. Participants chose compensation more often than punishment in the loss context, and chose more punishments in the gain context. Empathic concern partly explained between-context differences of altruistic compensation and punishment. Our findings provide insights on modulating effect of context on third-party altruistic decisions.

The research is here.

Saturday, December 16, 2017

Does Religion Make People Moral?

Mustafa Akyol

The New York Times

Originally published November 28, 2017

Does religion really make people more moral human beings? Or does the gap between morality and the moralists — a gap evident in Turkey today and in many other societies around the world — reveal an ugly hypocrisy behind all religion?

My humble answer is: It depends. Religion can work in two fundamentally different ways: It can be a source of self-education, or it can be a source of self-glorification. Self-education can make people more moral, while self-glorification can make them considerably less moral.

Religion can be a source of self-education, because religious texts often have moral teachings with which people can question and instruct themselves. The Quran, just like the Bible, has such pearls of wisdom. It tells believers to “uphold justice” “even against yourselves or your parents and relatives.” It praises “those who control their wrath and are forgiving toward mankind.” It counsels: “Repel evil with what is better so your enemy will become a bosom friend.” A person who follows such virtuous teachings will likely develop a moral character, just as a person who follows similar teachings in the Bible will.

The article is here.

The New York Times

Originally published November 28, 2017

Here is an excerpt:

Does religion really make people more moral human beings? Or does the gap between morality and the moralists — a gap evident in Turkey today and in many other societies around the world — reveal an ugly hypocrisy behind all religion?

My humble answer is: It depends. Religion can work in two fundamentally different ways: It can be a source of self-education, or it can be a source of self-glorification. Self-education can make people more moral, while self-glorification can make them considerably less moral.

Religion can be a source of self-education, because religious texts often have moral teachings with which people can question and instruct themselves. The Quran, just like the Bible, has such pearls of wisdom. It tells believers to “uphold justice” “even against yourselves or your parents and relatives.” It praises “those who control their wrath and are forgiving toward mankind.” It counsels: “Repel evil with what is better so your enemy will become a bosom friend.” A person who follows such virtuous teachings will likely develop a moral character, just as a person who follows similar teachings in the Bible will.

The article is here.

Friday, December 15, 2017

The Vortex

Oliver Burkeman

The Guardian

Originally posted November 30, 2017

Here is an excerpt:

I realise you don’t need me to tell you that something has gone badly wrong with how we discuss controversial topics online. Fake news is rampant; facts don’t seem to change the minds of those in thrall to falsehood; confirmation bias drives people to seek out only the information that bolsters their views, while dismissing whatever challenges them. (In the final three months of the 2016 presidential election campaign, according to one analysis by Buzzfeed, the top 20 fake stories were shared more online than the top 20 real ones: to a terrifying extent, news is now more fake than not.) Yet, to be honest, I’d always assumed that the problem rested solely on the shoulders of other, stupider, nastier people. If you’re not the kind of person who makes death threats, or uses misogynistic slurs, or thinks Hillary Clinton’s campaign manager ran a child sex ring from a Washington pizzeria – if you’re a basically decent and undeluded sort, in other words – it’s easy to assume you’re doing nothing wrong.

But this, I am reluctantly beginning to understand, is self-flattery. One important feature of being trapped in the Vortex, it turns out, is the way it looks like everyone else is trapped in the Vortex, enslaved by their anger and delusions, obsessed with point-scoring and insult-hurling instead of with establishing the facts – whereas you’re just speaking truth to power. Yet in reality, when it comes to the divisive, depressing, energy-sapping nightmare that is modern online political debate, it’s like the old line about road congestion: you’re not “stuck in traffic”. You are the traffic.

The article is here.

The Guardian

Originally posted November 30, 2017

Here is an excerpt:

I realise you don’t need me to tell you that something has gone badly wrong with how we discuss controversial topics online. Fake news is rampant; facts don’t seem to change the minds of those in thrall to falsehood; confirmation bias drives people to seek out only the information that bolsters their views, while dismissing whatever challenges them. (In the final three months of the 2016 presidential election campaign, according to one analysis by Buzzfeed, the top 20 fake stories were shared more online than the top 20 real ones: to a terrifying extent, news is now more fake than not.) Yet, to be honest, I’d always assumed that the problem rested solely on the shoulders of other, stupider, nastier people. If you’re not the kind of person who makes death threats, or uses misogynistic slurs, or thinks Hillary Clinton’s campaign manager ran a child sex ring from a Washington pizzeria – if you’re a basically decent and undeluded sort, in other words – it’s easy to assume you’re doing nothing wrong.

But this, I am reluctantly beginning to understand, is self-flattery. One important feature of being trapped in the Vortex, it turns out, is the way it looks like everyone else is trapped in the Vortex, enslaved by their anger and delusions, obsessed with point-scoring and insult-hurling instead of with establishing the facts – whereas you’re just speaking truth to power. Yet in reality, when it comes to the divisive, depressing, energy-sapping nightmare that is modern online political debate, it’s like the old line about road congestion: you’re not “stuck in traffic”. You are the traffic.

The article is here.

Loneliness Might Be a Killer, but What’s the Best Way to Protect Against It?

Rita Rubin

JAMA. 2017;318(19):1853-1855.

Here is an excerpt:

“I think that it’s clearly a [health] risk factor,” first author Nancy Donovan, MD, said of loneliness. “Various types of psychosocial stress appear to be bad for the human body and brain and are clearly associated with lots of adverse health consequences.”

Though the findings overall are mixed, the best current evidence suggests that loneliness may cause adverse health effects by promoting inflammation, said Donovan, a geriatric psychiatrist at the Center for Alzheimer Research and Treatment at Brigham and Women’s Hospital in Boston.

Loneliness might also be an early, relatively easy-to-detect marker for preclinical Alzheimer disease, suggests an article Donovan coauthored. She and her collaborators recently reported in JAMA Psychiatry that loneliness was associated with a higher cortical amyloid burden in 79 cognitively normal elderly adults. Cortical amyloid burden is being investigated as a potential biomarker for identifying asymptomatic adults with the greatest risk of Alzheimer disease. However, large-scale population screening for amyloid burden is unlikely to be practical.