Sarah Franklin

Nature.com

Originally posted October 29, 2019

Here is an excerpt:

Beyond bewilderment

Just as the ramifications of the birth of modern biology were hard to delineate in the late nineteenth century, so there is a sense of ethical bewilderment today. The feeling of being overwhelmed is exacerbated by a lack of regulatory infrastructure or adequate policy precedents. Bioethics, once a beacon of principled pathways to policy, is increasingly lost, like Simba, in a sea of thundering wildebeest. Many of the ethical challenges arising from today’s turbocharged research culture involve rapidly evolving fields that are pursued by globally competitive projects and teams, spanning disparate national regulatory systems and cultural norms. The unknown unknowns grow by the day.

The bar for proper scrutiny has not so much been lowered as sawn to pieces: dispersed, area-specific ethical oversight now exists in a range of forms for every acronym from AI (artificial intelligence) to GM organisms. A single, Belmont-style umbrella no longer seems likely, or even feasible. Much basic science is privately funded and therefore secretive. And the mergers between machine learning and biological synthesis raise additional concerns. Instances of enduring and successful international regulation are rare. The stereotype of bureaucratic, box-ticking ethical compliance is no longer fit for purpose in a world of CRISPR twins, synthetic neurons and self-driving cars.

Bioethics evolves, as does any other branch of knowledge. The post-millennial trend has been to become more global, less canonical and more reflexive. The field no longer relies on philosophically derived mandates codified into textbook formulas. Instead, it functions as a dashboard of pragmatic instruments, and is less expert-driven, more interdisciplinary, less multipurpose and more bespoke. In the wake of the ‘turn to dialogue’ in science, bioethics often looks more like public engagement — and vice versa. Policymakers, polling companies and government quangos tasked with organizing ethical consultations on questions such as mitochondrial donation (‘three-parent embryos’, as the media would have it) now perform the evaluations formerly assigned to bioethicists.

The info is here.

Welcome to the Nexus of Ethics, Psychology, Morality, Philosophy and Health Care

Welcome to the nexus of ethics, psychology, morality, technology, health care, and philosophy

Showing posts with label Progress. Show all posts

Showing posts with label Progress. Show all posts

Friday, December 6, 2019

Wednesday, November 20, 2019

Super-precise new CRISPR tool could tackle a plethora of genetic diseases

Heidi Ledford

Heidi Ledfordnature.com

Originally posted October 21, 2019

For all the ease with which the wildly popular CRISPR–Cas9 gene-editing tool alters genomes, it’s still somewhat clunky and prone to errors and unintended effects. Now, a recently developed alternative offers greater control over genome edits — an advance that could be particularly important for developing gene therapies.

The alternative method, called prime editing, improves the chances that researchers will end up with only the edits they want, instead of a mix of changes that they can’t predict. The tool, described in a study published on 21 October in Nature1, also reduces the ‘off-target’ effects that are a key challenge for some applications of the standard CRISPR–Cas9 system. That could make prime-editing-based gene therapies safer for use in people.

The tool also seems capable of making a wider variety of edits, which might one day allow it to be used to treat the many genetic diseases that have so far stymied gene-editors. David Liu, a chemical biologist at the Broad Institute of MIT and Harvard in Cambridge, Massachusetts and lead study author, estimates that prime editing might help researchers tackle nearly 90% of the more than 75,000 disease-associated DNA variants listed in ClinVar, a public database developed by the US National Institutes of Health.

The specificity of the changes that this latest tool is capable of could also make it easier for researchers to develop models of disease in the laboratory, or to study the function of specific genes, says Liu.

The info is here.

Thursday, October 31, 2019

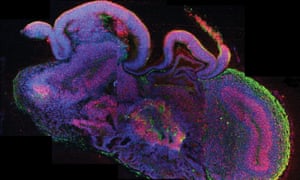

Scientists 'may have crossed ethical line' in growing human brains

Ian Sample

Ian SampleThe Guardian

Originally posted October 20, 2019

Neuroscientists may have crossed an “ethical rubicon” by growing lumps of human brain in the lab, and in some cases transplanting the tissue into animals, researchers warn.

The creation of mini-brains or brain “organoids” has become one of the hottest fields in modern neuroscience. The blobs of tissue are made from stem cells and, while they are only the size of a pea, some have developed spontaneous brain waves, similar to those seen in premature babies.

Many scientists believe that organoids have the potential to transform medicine by allowing them to probe the living brain like never before. But the work is controversial because it is unclear where it may cross the line into human experimentation.

On Monday, researchers will tell the world’s largest annual meeting of neuroscientists that some scientists working on organoids are “perilously close” to crossing the ethical line, while others may already have done so by creating sentient lumps of brain in the lab.

“If there’s even a possibility of the organoid being sentient, we could be crossing that line,” said Elan Ohayon, the director of the Green Neuroscience Laboratory in San Diego, California. “We don’t want people doing research where there is potential for something to suffer.”

The info is here.

Sunday, October 13, 2019

A Successful Artificial Memory Has Been Created

Robert Martone

Scientific American

Scientific American

Originally posted August27, 2019

Here is the conclusion:

There are legitimate motives underlying these efforts. Memory has been called “the scribe of the soul,” and it is the source of one’s personal history. Some people may seek to recover lost or partially lost memories. Others, such as those afflicted with post-traumatic stress disorder or chronic pain, might seek relief from traumatic memories by trying to erase them.

The methods used here to create artificial memories will not be employed in humans anytime soon: none of us are transgenic like the animals used in the experiment, nor are we likely to accept multiple implanted fiber-optic cables and viral injections. Nevertheless, as technologies and strategies evolve, the possibility of manipulating human memories becomes all the more real. And the involvement of military agencies such as DARPA invariably renders the motivations behind these efforts suspect. Are there things we all need to be afraid of or that we must or must not do? The dystopian possibilities are obvious.

Creating artificial memories brings us closer to learning how memories form and could ultimately help us understand and treat dreadful diseases such as Alzheimer’s. Memories, however, cut to the core of our humanity, and we need to be vigilant that any manipulations are approached ethically.

The info is here.

Scientific American

Scientific AmericanOriginally posted August27, 2019

Here is the conclusion:

There are legitimate motives underlying these efforts. Memory has been called “the scribe of the soul,” and it is the source of one’s personal history. Some people may seek to recover lost or partially lost memories. Others, such as those afflicted with post-traumatic stress disorder or chronic pain, might seek relief from traumatic memories by trying to erase them.

The methods used here to create artificial memories will not be employed in humans anytime soon: none of us are transgenic like the animals used in the experiment, nor are we likely to accept multiple implanted fiber-optic cables and viral injections. Nevertheless, as technologies and strategies evolve, the possibility of manipulating human memories becomes all the more real. And the involvement of military agencies such as DARPA invariably renders the motivations behind these efforts suspect. Are there things we all need to be afraid of or that we must or must not do? The dystopian possibilities are obvious.

Creating artificial memories brings us closer to learning how memories form and could ultimately help us understand and treat dreadful diseases such as Alzheimer’s. Memories, however, cut to the core of our humanity, and we need to be vigilant that any manipulations are approached ethically.

The info is here.

Sunday, September 29, 2019

The brain, the criminal and the courts

knowablemagazine.org

Originally posted August 30, 2019

Here is an excerpt:

It remains to be seen if all this research will yield actionable results. In 2018, Hoffman, who has been a leader in neurolaw research, wrote a paper discussing potential breakthroughs and dividing them into three categories: near term, long term and “never happening.” He predicted that neuroscientists are likely to improve existing tools for chronic pain detection in the near future, and in the next 10 to 50 years he believes they’ll reliably be able to detect memories and lies, and to determine brain maturity.

But brain science will never gain a full understanding of addiction, he suggested, or lead courts to abandon notions of responsibility or free will (a prospect that gives many philosophers and legal scholars pause).

Many realize that no matter how good neuroscientists get at teasing out the links between brain biology and human behavior, applying neuroscientific evidence to the law will always be tricky. One concern is that brain studies ordered after the fact may not shed light on a defendant’s motivations and behavior at the time a crime was committed — which is what matters in court. Another concern is that studies of how an average brain works do not always provide reliable information on how a specific individual’s brain works.

“The most important question is whether the evidence is legally relevant. That is, does it help answer a precise legal question?” says Stephen J. Morse, a scholar of law and psychiatry at the University of Pennsylvania. He is in the camp who believe that neuroscience will never revolutionize the law, because “actions speak louder than images,” and that in a legal setting, “if there is a disjunct between what the neuroscience shows and what the behavior shows, you’ve got to believe the behavior.” He worries about the prospect of “neurohype,” and attorneys who overstate the scientific evidence.

The info is here.

Sunday, September 22, 2019

The Ethics Of Hiding Your Data From the Machines

Molly Wood

Molly Woodwired.com

Originally posted August 22, 2019

Here is an excerpt:

There’s also a real and reasonable fear that companies or individuals will take ethical liberties in the name of pushing hard toward a good solution, like curing a disease or saving lives. This is not an abstract problem: The co-founder of Google’s artificial intelligence lab, DeepMind, was placed on leave earlier this week after some controversial decisions—one of which involved the illegal use of over 1.5 million hospital patient records in 2017.

So sticking with the medical kick I’m on here, I propose that companies work a little harder to imagine the worst-case scenario surrounding the data they’re collecting. Study the side effects like you would a drug for restless leg syndrome or acne or hepatitis, and offer us consumers a nice, long, terrifying list of potential outcomes so we actually know what we’re getting into.

And for we consumers, well, a blanket refusal to offer up our data to the AI gods isn’t necessarily the good choice either. I don’t want to be the person who refuses to contribute my genetic data via 23andMe to a massive research study that could, and I actually believe this is possible, lead to cures and treatments for diseases like Parkinson’s and Alzheimer’s and who knows what else.

I also think I deserve a realistic assessment of the potential for harm to find its way back to me, because I didn’t think through or wasn’t told all the potential implications of that choice—like how, let’s be honest, we all felt a little stung when we realized the 23andMe research would be through a partnership with drugmaker (and reliable drug price-hiker) GlaxoSmithKline. Drug companies, like targeted ads, are easy villains—even though this partnership actually could produce a Parkinson’s drug. But do we know what GSK’s privacy policy looks like? That deal was a level of sharing we didn’t necessarily expect.

The info is here.

Sunday, September 15, 2019

To Study the Brain, a Doctor Puts Himself Under the Knife

Adam Piore

Adam Piore

MIT Technology Review

Originally published November 9, 2015

Here are two excerpts:

Kennedy became convinced that the way to take his research to the next level was to find a volunteer who could still speak. For almost a year he searched for a volunteer with ALS who still retained some vocal abilities, hoping to take the patient offshore for surgery. “I couldn’t get one. So after much thinking and pondering I decided to do it on myself,” he says. “I tried to talk myself out of it for years.”

The surgery took place in June 2014 at a 13-bed Belize City hospital a thousand miles south of his Georgia-based neurology practice and also far from the reach of the FDA. Prior to boarding his flight, Kennedy did all he could to prepare. At his small company, Neural Signals, he fabricated the electrodes the neurosurgeon would implant into his motor cortex—even chose the spot where he wanted them buried. He put aside enough money to support himself for a few months if the surgery went wrong. He had made sure his living will was in order and that his older son knew where he was.

(cut)

To some researchers, Kennedy’s decisions could be seen as unwise, even unethical. Yet there are cases where self-experiments have paid off. In 1984, an Australian doctor named Barry Marshall drank a beaker filled with bacteria in order to prove they caused stomach ulcers. He later won the Nobel Prize. “There’s been a long tradition of medical scientists experimenting on themselves, sometimes with good results and sometimes without such good results,” says Jonathan Wolpaw, a brain-computer interface researcher at the Wadsworth Center in New York. “It’s in that tradition. That’s probably all I should say without more information.”

The info is here.

Thursday, August 29, 2019

Why Businesses Need Ethics to Survive Disruption

Mathew Donald

HR Technologist

HR Technologist

Originally posted July 29, 2019

Here is an excerpt:

Using Ethics as the Guideline

An alternative model for an organization in disruption may be to connect staff and their organization to society values. Whilst these standards may not all be written, the staff will generally know right from wrong, where they live in harmony with the broad rule of society. People do not normally steal, drive on the wrong side of the road or take advantage of the poor. Whilst written laws may prevail and guide society, it is clear that most people follow unwritten society values. People make decisions on moral grounds daily, each based on their beliefs, refraining from actions that may be frowned upon by their friends and neighbors.

Ethics may be a key ingredient to add to your organization in a disruptive environment, as it may guide your staff through new situations without the necessity for a written rule or government law. It would seem that ethics based on a sense of fair play, not taking undue advantage, not overusing power and control, alignment with everyday society values may address some of this heightened risk in the disruption. Once the set of ethics is agreed upon and imbibed by the staff, it may be possible for them to review new transactions, new situations, and potential opportunities without necessarily needing to see written guidelines.

The info is here.

HR Technologist

HR TechnologistOriginally posted July 29, 2019

Here is an excerpt:

Using Ethics as the Guideline

An alternative model for an organization in disruption may be to connect staff and their organization to society values. Whilst these standards may not all be written, the staff will generally know right from wrong, where they live in harmony with the broad rule of society. People do not normally steal, drive on the wrong side of the road or take advantage of the poor. Whilst written laws may prevail and guide society, it is clear that most people follow unwritten society values. People make decisions on moral grounds daily, each based on their beliefs, refraining from actions that may be frowned upon by their friends and neighbors.

Ethics may be a key ingredient to add to your organization in a disruptive environment, as it may guide your staff through new situations without the necessity for a written rule or government law. It would seem that ethics based on a sense of fair play, not taking undue advantage, not overusing power and control, alignment with everyday society values may address some of this heightened risk in the disruption. Once the set of ethics is agreed upon and imbibed by the staff, it may be possible for them to review new transactions, new situations, and potential opportunities without necessarily needing to see written guidelines.

The info is here.

Monday, July 29, 2019

Experts question the morality of creating human-monkey ‘chimeras’

Kay Vandette

www.earth.com

Originally published July 5, 2019

Earlier this year, scientists at the Kunming Institute of Zoology of the Chinese Academy of Sciences announced they had inserted a human gene into embryos that would become rhesus macaques, monkeys that share about 93 percent of their DNA with humans. The research, which was designed to give experts a better understanding of human brain development, has sparked controversy over whether this type of experimentation is ethical.

Some scientists believe that it is time to use human-monkey animals to pursue new insights into the progression of diseases such as Alzheimer’s. These genetically modified monkeys are referred to as chimeras, which are named after a mythical animal that consists of parts taken from various animals.

A resource guide on the science and ethics of chimeras written by Yale University researchers suggests that it is time to “cautiously” explore the creation of human-monkey chimeras.

“The search for a better animal model to stimulate human disease has been a ‘holy grail’ of biomedical research for decades,” the Yale team wrote in Chimera Research: Ethics and Protocols. “Realizing the promise of human-monkey chimera research in an ethically and scientifically appropriate manner will require a coordinated approach.”

A team of experts led by Dr. Douglas Munoz of Queen’s University has been studying the onset of Alzheimer’s disease in monkeys by using injections of beta-amyloid. The accumulation of this protein in the brain is believed to kill nerve cells and initiate the degenerative process.

The info is here.

www.earth.com

Originally published July 5, 2019

Earlier this year, scientists at the Kunming Institute of Zoology of the Chinese Academy of Sciences announced they had inserted a human gene into embryos that would become rhesus macaques, monkeys that share about 93 percent of their DNA with humans. The research, which was designed to give experts a better understanding of human brain development, has sparked controversy over whether this type of experimentation is ethical.

Some scientists believe that it is time to use human-monkey animals to pursue new insights into the progression of diseases such as Alzheimer’s. These genetically modified monkeys are referred to as chimeras, which are named after a mythical animal that consists of parts taken from various animals.

A resource guide on the science and ethics of chimeras written by Yale University researchers suggests that it is time to “cautiously” explore the creation of human-monkey chimeras.

“The search for a better animal model to stimulate human disease has been a ‘holy grail’ of biomedical research for decades,” the Yale team wrote in Chimera Research: Ethics and Protocols. “Realizing the promise of human-monkey chimera research in an ethically and scientifically appropriate manner will require a coordinated approach.”

A team of experts led by Dr. Douglas Munoz of Queen’s University has been studying the onset of Alzheimer’s disease in monkeys by using injections of beta-amyloid. The accumulation of this protein in the brain is believed to kill nerve cells and initiate the degenerative process.

The info is here.

Wednesday, June 26, 2019

The evolution of human cooperation

Coren Apicella and Joan Silk

Current Biology, Volume 29 (11), pp 447-450.

Darwin viewed cooperation as a perplexing challenge to his theory of natural selection. Natural selection generally favors the evolution of behaviors that enhance the fitness of individuals. Cooperative behavior, which increases the fitness of a recipient at the expense of the donor, contradicts this logic. William D. Hamilton helped to solve the puzzle when he showed that cooperation can evolve if cooperators direct benefits selectively to other cooperators (i.e. assortment). Kinship, group selection and the previous behavior of social partners all provide mechanisms for assortment (Figure 1), and kin selection and reciprocal altruism are the foundation of the kinds of cooperative behavior observed in many animals. Humans also bias cooperation in favor of kin and reciprocating partners, but the scope, scale, and variability of human cooperation greatly exceed that of other animals. Here, we introduce derived features of human cooperation in the context in which they originally evolved, and discuss the processes that may have shaped the evolution of our remarkable capacity for cooperation. We argue that culturally-evolved norms that specify how people should behave provide an evolutionarily novel mechanism for assortment, and play an important role in sustaining derived properties of cooperation in human groups.

Here is a portion of the Summary

Cooperative foraging and breeding provide the evolutionary backdrop for understanding the evolution of cooperation in humans, as the returns from cooperating in these activities would have been high in our hunter-gatherer ancestors. Still, explaining how our ancestors effectively dealt with the problem of free-riders within this context remains a challenge. Derived features of human cooperation, however, give us some indication of the mechanisms that could lead to assortativity. These derived features include: first, the scope of cooperation — cooperation is observed between unrelated and often short-term interactors; second, the scale of cooperation — cooperation extends beyond pairs to include circumscribed groups that vary in size and identity; and third, variation in cooperation — human cooperation varies in both time and space in accordance with cultural and social norms. We argue that this pattern of findings is best explained by cultural evolutionary processes that generate phenotypic assortment on cooperation via a psychology adapted for cultural learning, norm sensitivity and group-mindedness.

The info is here.

Current Biology, Volume 29 (11), pp 447-450.

Darwin viewed cooperation as a perplexing challenge to his theory of natural selection. Natural selection generally favors the evolution of behaviors that enhance the fitness of individuals. Cooperative behavior, which increases the fitness of a recipient at the expense of the donor, contradicts this logic. William D. Hamilton helped to solve the puzzle when he showed that cooperation can evolve if cooperators direct benefits selectively to other cooperators (i.e. assortment). Kinship, group selection and the previous behavior of social partners all provide mechanisms for assortment (Figure 1), and kin selection and reciprocal altruism are the foundation of the kinds of cooperative behavior observed in many animals. Humans also bias cooperation in favor of kin and reciprocating partners, but the scope, scale, and variability of human cooperation greatly exceed that of other animals. Here, we introduce derived features of human cooperation in the context in which they originally evolved, and discuss the processes that may have shaped the evolution of our remarkable capacity for cooperation. We argue that culturally-evolved norms that specify how people should behave provide an evolutionarily novel mechanism for assortment, and play an important role in sustaining derived properties of cooperation in human groups.

Here is a portion of the Summary

Cooperative foraging and breeding provide the evolutionary backdrop for understanding the evolution of cooperation in humans, as the returns from cooperating in these activities would have been high in our hunter-gatherer ancestors. Still, explaining how our ancestors effectively dealt with the problem of free-riders within this context remains a challenge. Derived features of human cooperation, however, give us some indication of the mechanisms that could lead to assortativity. These derived features include: first, the scope of cooperation — cooperation is observed between unrelated and often short-term interactors; second, the scale of cooperation — cooperation extends beyond pairs to include circumscribed groups that vary in size and identity; and third, variation in cooperation — human cooperation varies in both time and space in accordance with cultural and social norms. We argue that this pattern of findings is best explained by cultural evolutionary processes that generate phenotypic assortment on cooperation via a psychology adapted for cultural learning, norm sensitivity and group-mindedness.

The info is here.

Tuesday, October 2, 2018

For the first time, researchers will release genetically engineered mosquitoes in Africa

Ike Swetlitz

www.statnews.com

Originally posted September 5, 2018

The government of Burkina Faso granted scientists permission to release genetically engineered mosquitoes anytime this year or next, researchers announced Wednesday. It’s a key step in the broader efforts to use bioengineering to eliminate malaria in the region.

The release, which scientists are hoping to execute this month, will be the first time that any genetically engineered animal is released into the wild in Africa. While these particular mosquitoes won’t have any mutations related to malaria transmission, researchers are hoping their release, and the work that led up to it, will help improve the perception of the research and trust in the science among regulators and locals alike. It will also inform future releases.

Teams in three African countries — Burkina Faso, Mali, and Uganda — are building the groundwork to eventually let loose “gene drive” mosquitoes, which would contain a mutation that would significantly and quickly reduce the mosquito population. Genetically engineered mosquitoes have already been released in places like Brazil and the Cayman Islands, though animals with gene drives have never been released in the wild.

The info is here.

www.statnews.com

Originally posted September 5, 2018

The government of Burkina Faso granted scientists permission to release genetically engineered mosquitoes anytime this year or next, researchers announced Wednesday. It’s a key step in the broader efforts to use bioengineering to eliminate malaria in the region.

The release, which scientists are hoping to execute this month, will be the first time that any genetically engineered animal is released into the wild in Africa. While these particular mosquitoes won’t have any mutations related to malaria transmission, researchers are hoping their release, and the work that led up to it, will help improve the perception of the research and trust in the science among regulators and locals alike. It will also inform future releases.

Teams in three African countries — Burkina Faso, Mali, and Uganda — are building the groundwork to eventually let loose “gene drive” mosquitoes, which would contain a mutation that would significantly and quickly reduce the mosquito population. Genetically engineered mosquitoes have already been released in places like Brazil and the Cayman Islands, though animals with gene drives have never been released in the wild.

The info is here.

Thursday, September 20, 2018

Man-made human 'minibrains' spark debate on ethics and morality

Carolyn Y. Johnson

www.iol.za

Originally posted September 3, 2018

Here is an excerpt:

Five years ago, an ethical debate about organoids seemed to many scientists to be premature. The organoids were exciting because they were similar to the developing brain, and yet they were incredibly rudimentary. They were constrained in how big they could get before cells in the core started dying, because they weren't suffused with blood vessels or supplied with nutrients and oxygen by a beating heart. They lacked key cell types.

Still, there was something different about brain organoids compared with routine biomedical research. Song recalled that one of the amazing but also unsettling things about the early organoids was that they weren't as targeted to develop into specific regions of the brain, so it was possible to accidentally get retinal cells.

"It's difficult to see the eye in a dish," Song said.

Now, researchers are succeeding at keeping organoids alive for longer periods of time. At a talk, Hyun recalled one researcher joking that the lab had sung "Happy Birthday" to an organoid when it was a year old. Some researchers are implanting organoids into rodent brains, where they can stay alive longer and grow more mature. Others are building multiple organoids representing different parts of the brain, such as the hippocampus, which is involved in memory, or the cerebral cortex - the seat of cognition - and fusing them together into larger "assembloids."

Even as scientists express scepticism that brain organoids will ever come close to sentience, they're the ones calling for a broad discussion, and perhaps more oversight. The questions range from the practical to the fantastical. Should researchers make sure that people who donate their cells for organoid research are informed that they could be used to make a tiny replica of parts of their brain? If organoids became sophisticated enough, should they be granted greater protections, like the rules that govern animal research? Without a consensus on what consciousness or pain would even look like in the brain, how will scientists know when they're nearing the limit?

The info is here.

www.iol.za

Originally posted September 3, 2018

Here is an excerpt:

Five years ago, an ethical debate about organoids seemed to many scientists to be premature. The organoids were exciting because they were similar to the developing brain, and yet they were incredibly rudimentary. They were constrained in how big they could get before cells in the core started dying, because they weren't suffused with blood vessels or supplied with nutrients and oxygen by a beating heart. They lacked key cell types.

Still, there was something different about brain organoids compared with routine biomedical research. Song recalled that one of the amazing but also unsettling things about the early organoids was that they weren't as targeted to develop into specific regions of the brain, so it was possible to accidentally get retinal cells.

"It's difficult to see the eye in a dish," Song said.

Now, researchers are succeeding at keeping organoids alive for longer periods of time. At a talk, Hyun recalled one researcher joking that the lab had sung "Happy Birthday" to an organoid when it was a year old. Some researchers are implanting organoids into rodent brains, where they can stay alive longer and grow more mature. Others are building multiple organoids representing different parts of the brain, such as the hippocampus, which is involved in memory, or the cerebral cortex - the seat of cognition - and fusing them together into larger "assembloids."

Even as scientists express scepticism that brain organoids will ever come close to sentience, they're the ones calling for a broad discussion, and perhaps more oversight. The questions range from the practical to the fantastical. Should researchers make sure that people who donate their cells for organoid research are informed that they could be used to make a tiny replica of parts of their brain? If organoids became sophisticated enough, should they be granted greater protections, like the rules that govern animal research? Without a consensus on what consciousness or pain would even look like in the brain, how will scientists know when they're nearing the limit?

The info is here.

Tuesday, July 3, 2018

What does a portrait of Erica the android tell us about being human?

Nigel Warburton

The Guardian

Originally posted September 9, 2017

Here are two excerpts:

Another traditional answer to the question of what makes us so different, popular for millennia, has been that humans have a non-physical soul, one that inhabits the body but is distinct from it, an ethereal ghostly wisp that floats free at death to enjoy an after-life which may include reunion with other souls, or perhaps a new body to inhabit. To many of us, this is wishful thinking on an industrial scale. It is no surprise that survey results published last week indicate that a clear majority of Britons (53%) describe themselves as non-religious, with a higher percentage of younger people taking this enlightened attitude. In contrast, 70% of Americans still describe themselves as Christians, and a significant number of those have decidedly unscientific views about human origins. Many, along with St Augustine, believe that Adam and Eve were literally the first humans, and that everything was created in seven days.

(cut)

Today a combination of evolutionary biology and neuroscience gives us more plausible accounts of what we are than Descartes did. These accounts are not comforting. They reverse the priority and emphasise that we are animals and provide no evidence for our non-physical existence. Far from it. Nor are they in any sense complete, though there has been great progress. Since Charles Darwin disabused us of the notion that human beings are radically different in kind from other apes by outlining in broad terms the probable mechanics of evolution, evolutionary psychologists have been refining their hypotheses about how we became this kind of animal and not another, why we were able to surpass other species in our use of tools, communication through language and images, and ability to pass on our cultural discoveries from generation to generation.

The article is here.

The Guardian

Originally posted September 9, 2017

Here are two excerpts:

Another traditional answer to the question of what makes us so different, popular for millennia, has been that humans have a non-physical soul, one that inhabits the body but is distinct from it, an ethereal ghostly wisp that floats free at death to enjoy an after-life which may include reunion with other souls, or perhaps a new body to inhabit. To many of us, this is wishful thinking on an industrial scale. It is no surprise that survey results published last week indicate that a clear majority of Britons (53%) describe themselves as non-religious, with a higher percentage of younger people taking this enlightened attitude. In contrast, 70% of Americans still describe themselves as Christians, and a significant number of those have decidedly unscientific views about human origins. Many, along with St Augustine, believe that Adam and Eve were literally the first humans, and that everything was created in seven days.

(cut)

Today a combination of evolutionary biology and neuroscience gives us more plausible accounts of what we are than Descartes did. These accounts are not comforting. They reverse the priority and emphasise that we are animals and provide no evidence for our non-physical existence. Far from it. Nor are they in any sense complete, though there has been great progress. Since Charles Darwin disabused us of the notion that human beings are radically different in kind from other apes by outlining in broad terms the probable mechanics of evolution, evolutionary psychologists have been refining their hypotheses about how we became this kind of animal and not another, why we were able to surpass other species in our use of tools, communication through language and images, and ability to pass on our cultural discoveries from generation to generation.

The article is here.

Tuesday, May 29, 2018

Ethics debate as pig brains kept alive without a body

Pallab Ghosh

BBC.com

Originally published April 27, 2018

Researchers at Yale University have restored circulation to the brains of decapitated pigs, and kept the organs alive for several hours.

Their aim is to develop a way of studying intact human brains in the lab for medical research.

Although there is no evidence that the animals were aware, there is concern that some degree of consciousness might have remained.

Details of the study were presented at a brain science ethics meeting held at the National Institutes of Health (NIH) in Bethesda in Maryland on 28 March.

The work, by Prof Nenad Sestan of Yale University, was discussed as part of an NIH investigation of ethical issues arising from neuroscience research in the US.

Prof Sestan explained that he and his team experimented on more than 100 pig brains.

The information is here.

BBC.com

Originally published April 27, 2018

Researchers at Yale University have restored circulation to the brains of decapitated pigs, and kept the organs alive for several hours.

Their aim is to develop a way of studying intact human brains in the lab for medical research.

Although there is no evidence that the animals were aware, there is concern that some degree of consciousness might have remained.

Details of the study were presented at a brain science ethics meeting held at the National Institutes of Health (NIH) in Bethesda in Maryland on 28 March.

The work, by Prof Nenad Sestan of Yale University, was discussed as part of an NIH investigation of ethical issues arising from neuroscience research in the US.

Prof Sestan explained that he and his team experimented on more than 100 pig brains.

The information is here.

Tuesday, February 27, 2018

Artificial neurons compute faster than the human brain

Sara Reardon

Nature

Originally published January 26, 2018

Superconducting computing chips modelled after neurons can process information faster and more efficiently than the human brain. That achievement, described in Science Advances on 26 January, is a key benchmark in the development of advanced computing devices designed to mimic biological systems. And it could open the door to more natural machine-learning software, although many hurdles remain before it could be used commercially.

Artificial intelligence software has increasingly begun to imitate the brain. Algorithms such as Google’s automatic image-classification and language-learning programs use networks of artificial neurons to perform complex tasks. But because conventional computer hardware was not designed to run brain-like algorithms, these machine-learning tasks require orders of magnitude more computing power than the human brain does.

“There must be a better way to do this, because nature has figured out a better way to do this,” says Michael Schneider, a physicist at the US National Institute of Standards and Technology (NIST) in Boulder, Colorado, and a co-author of the study.

The article is here.

Nature

Originally published January 26, 2018

Superconducting computing chips modelled after neurons can process information faster and more efficiently than the human brain. That achievement, described in Science Advances on 26 January, is a key benchmark in the development of advanced computing devices designed to mimic biological systems. And it could open the door to more natural machine-learning software, although many hurdles remain before it could be used commercially.

Artificial intelligence software has increasingly begun to imitate the brain. Algorithms such as Google’s automatic image-classification and language-learning programs use networks of artificial neurons to perform complex tasks. But because conventional computer hardware was not designed to run brain-like algorithms, these machine-learning tasks require orders of magnitude more computing power than the human brain does.

“There must be a better way to do this, because nature has figured out a better way to do this,” says Michael Schneider, a physicist at the US National Institute of Standards and Technology (NIST) in Boulder, Colorado, and a co-author of the study.

The article is here.

Wednesday, February 21, 2018

The Federal Right to Try Act of 2017—A Wrong Turn for Access to Investigational Drugs and the Path Forward

Alison Bateman-House and Christopher T. Robertson

JAMA Intern Med. Published online January 22, 2018.

In 2017, President Trump said that “one thing that’s always disturbed”1 him is that the US Food and Drug Administration (FDA) denies access to experimental drugs even “for a patient who’s terminal…[who] is not going to live more than four weeks [anyway.]” Fueled by emotionally charged anecdotes recirculated by libertarian political activists, 38 states have passed Right to Try laws. In 2017, the US Senate approved a bill that would create a national law (Box). As of December 2017, the US House of Representatives was considering the bill.

The article is here.

JAMA Intern Med. Published online January 22, 2018.

In 2017, President Trump said that “one thing that’s always disturbed”1 him is that the US Food and Drug Administration (FDA) denies access to experimental drugs even “for a patient who’s terminal…[who] is not going to live more than four weeks [anyway.]” Fueled by emotionally charged anecdotes recirculated by libertarian political activists, 38 states have passed Right to Try laws. In 2017, the US Senate approved a bill that would create a national law (Box). As of December 2017, the US House of Representatives was considering the bill.

The article is here.

Friday, February 2, 2018

Has Technology Lost Society's Trust?

Mustafa Suleyman

The RSA.org

Originally published January 8, 2018

Has technology lost society's trust? Mustafa Suleyman, co-founder and Head of Applied AI at DeepMind, considers what tech companies have got wrong, how to fix it and how technology companies can change the world for the better. (7 minute video)

The RSA.org

Originally published January 8, 2018

Has technology lost society's trust? Mustafa Suleyman, co-founder and Head of Applied AI at DeepMind, considers what tech companies have got wrong, how to fix it and how technology companies can change the world for the better. (7 minute video)

Wednesday, January 31, 2018

I Believe In Intelligent Design....For Robots

Matt Simon

Wired Magazine

Originally published January 3, 2018

Here is an excerpt:

Roboticists are honing their robots by essentially mimicking natural selection. Keep what works, throw out what doesn’t, to optimally adapt a robot to a particular job. “If we want to scrap something totally, we can do that,” says Nick Gravish, who studies the intersection of robotics and biology at UC San Diego. “Or we can take the best pieces from some design and put them in a new design and get rid of the things we don't need.” Think of it, then, like intelligent design—that follows the principles of natural selection.

Roboticists are honing their robots by essentially mimicking natural selection. Keep what works, throw out what doesn’t, to optimally adapt a robot to a particular job. “If we want to scrap something totally, we can do that,” says Nick Gravish, who studies the intersection of robotics and biology at UC San Diego. “Or we can take the best pieces from some design and put them in a new design and get rid of the things we don't need.” Think of it, then, like intelligent design—that follows the principles of natural selection.

The caveat being, biology is rather more inflexible than what roboticists are doing. After all, you can give your biped robot two extra limbs and turn it into a quadruped fairly quickly, while animals change their features—cave-dwelling species might lose their eyes, for instance—over thousands of years. “Evolution is as much a trap as a means to advance,” says Gerald Loeb, CEO and co-founder of SynTouch, which is giving robots the power to feel. “Because you get locked into a lot of hardware that worked well in previous iterations and now can't be changed because you've built your whole embryology on it.”

Evolution can still be rather explosive, though. 550 million years ago the Cambrian Explosion kicked off, giving birth to an incredible array of complex organisms. Before that, life was relatively squishier, relatively calmer. But then boom, predators a plenty, scrapping like hell to gain an edge.

The article is here.

Wired Magazine

Originally published January 3, 2018

Here is an excerpt:

Roboticists are honing their robots by essentially mimicking natural selection. Keep what works, throw out what doesn’t, to optimally adapt a robot to a particular job. “If we want to scrap something totally, we can do that,” says Nick Gravish, who studies the intersection of robotics and biology at UC San Diego. “Or we can take the best pieces from some design and put them in a new design and get rid of the things we don't need.” Think of it, then, like intelligent design—that follows the principles of natural selection.

Roboticists are honing their robots by essentially mimicking natural selection. Keep what works, throw out what doesn’t, to optimally adapt a robot to a particular job. “If we want to scrap something totally, we can do that,” says Nick Gravish, who studies the intersection of robotics and biology at UC San Diego. “Or we can take the best pieces from some design and put them in a new design and get rid of the things we don't need.” Think of it, then, like intelligent design—that follows the principles of natural selection.The caveat being, biology is rather more inflexible than what roboticists are doing. After all, you can give your biped robot two extra limbs and turn it into a quadruped fairly quickly, while animals change their features—cave-dwelling species might lose their eyes, for instance—over thousands of years. “Evolution is as much a trap as a means to advance,” says Gerald Loeb, CEO and co-founder of SynTouch, which is giving robots the power to feel. “Because you get locked into a lot of hardware that worked well in previous iterations and now can't be changed because you've built your whole embryology on it.”

Evolution can still be rather explosive, though. 550 million years ago the Cambrian Explosion kicked off, giving birth to an incredible array of complex organisms. Before that, life was relatively squishier, relatively calmer. But then boom, predators a plenty, scrapping like hell to gain an edge.

The article is here.

Saturday, January 27, 2018

Evolving Morality

Joshua Greene

Aspen Ideas Festival

2017

Human morality is a set of cognitive devices designed to solve social problems. The original moral problem is the problem of cooperation, the “tragedy of the commons” — me vs. us. But modern moral problems are often different, involving what Harvard psychology professor Joshua Greene calls “the tragedy of commonsense morality,” or the problem of conflicting values and interests across social groups — us vs. them. Our moral intuitions handle the first kind of problem reasonably well, but often fail miserably with the second kind. The rise of artificial intelligence compounds and extends these modern moral problems, requiring us to formulate our values in more precise ways and adapt our moral thinking to unprecedented circumstances. Can self-driving cars be programmed to behave morally? Should autonomous weapons be banned? How can we organize a society in which machines do most of the work that humans do now? And should we be worried about creating machines that are smarter than us? Understanding the strengths and limitations of human morality can help us answer these questions.

The one-hour talk on SoundCloud is here.

Aspen Ideas Festival

2017

Human morality is a set of cognitive devices designed to solve social problems. The original moral problem is the problem of cooperation, the “tragedy of the commons” — me vs. us. But modern moral problems are often different, involving what Harvard psychology professor Joshua Greene calls “the tragedy of commonsense morality,” or the problem of conflicting values and interests across social groups — us vs. them. Our moral intuitions handle the first kind of problem reasonably well, but often fail miserably with the second kind. The rise of artificial intelligence compounds and extends these modern moral problems, requiring us to formulate our values in more precise ways and adapt our moral thinking to unprecedented circumstances. Can self-driving cars be programmed to behave morally? Should autonomous weapons be banned? How can we organize a society in which machines do most of the work that humans do now? And should we be worried about creating machines that are smarter than us? Understanding the strengths and limitations of human morality can help us answer these questions.

The one-hour talk on SoundCloud is here.

Tuesday, November 14, 2017

Facial recognition may reveal things we’d rather not tell the world. Are we ready?

Amitha Kalaichandran

The Boston Globe

Originally published October 27, 2017

Here is an excerpt:

Could someone use a smartphone snapshot, for example, to diagnose another person’s child at the playground? The Face2Gene app is currently limited to clinicians; while anyone can download it from the App Store on an iPhone, it can only be used after the user’s healthcare credentials are verified. “If the technology is widespread,” says Lin, “do I see people taking photos of others for diagnosis? That would be unusual, but people take photos of others all the time, so maybe it’s possible. I would obviously worry about the invasion of privacy and misuse if that happened.”

Humans are pre-wired to discriminate against others based on physical characteristics, and programmers could easily manipulate AI programming to mimic human bias. That’s what concerns Anjan Chatterjee, a neuroscientist who specializes in neuroesthetics, the study of what our brains find pleasing. He has found that, relying on baked-in prejudices, we often quickly infer character just from seeing a person’s face. In a paper slated for publication in Psychology of Aesthetics, Creativity, and the Arts, Chatterjee reports that a person’s appearance — and our interpretation of that appearance — can have broad ramifications in professional and personal settings. This conclusion has serious implications for artificial intelligence.

“We need to distinguish between classification and evaluation,” he says. “Classification would be, for instance, using it for identification purposes like fingerprint recognition. . . which was once a privacy concern but seems to have largely faded away. Using the technology for evaluation would include discerning someone’s sexual orientation or for medical diagnostics.” The latter raises serious ethical questions, he says. One day, for example, health insurance companies could use this information to adjust premiums based on a predisposition to a condition.

The article is here.

The Boston Globe

Originally published October 27, 2017

Here is an excerpt:

Could someone use a smartphone snapshot, for example, to diagnose another person’s child at the playground? The Face2Gene app is currently limited to clinicians; while anyone can download it from the App Store on an iPhone, it can only be used after the user’s healthcare credentials are verified. “If the technology is widespread,” says Lin, “do I see people taking photos of others for diagnosis? That would be unusual, but people take photos of others all the time, so maybe it’s possible. I would obviously worry about the invasion of privacy and misuse if that happened.”

Humans are pre-wired to discriminate against others based on physical characteristics, and programmers could easily manipulate AI programming to mimic human bias. That’s what concerns Anjan Chatterjee, a neuroscientist who specializes in neuroesthetics, the study of what our brains find pleasing. He has found that, relying on baked-in prejudices, we often quickly infer character just from seeing a person’s face. In a paper slated for publication in Psychology of Aesthetics, Creativity, and the Arts, Chatterjee reports that a person’s appearance — and our interpretation of that appearance — can have broad ramifications in professional and personal settings. This conclusion has serious implications for artificial intelligence.

“We need to distinguish between classification and evaluation,” he says. “Classification would be, for instance, using it for identification purposes like fingerprint recognition. . . which was once a privacy concern but seems to have largely faded away. Using the technology for evaluation would include discerning someone’s sexual orientation or for medical diagnostics.” The latter raises serious ethical questions, he says. One day, for example, health insurance companies could use this information to adjust premiums based on a predisposition to a condition.

The article is here.

Subscribe to:

Posts (Atom)