Maya Miller and Robin Fields

ProPublic.org

Originally published 16, NOV 23

Here is an excerpt:

State insurance departments are responsible for enforcing these laws, but many are ill-equipped to do so, researchers, consumer advocates and even some regulators say. These agencies oversee all types of insurance, including plans covering cars, homes and people’s health. Yet they employed less people last year than they did a decade ago. Their first priority is making sure plans remain solvent; protecting consumers from unlawful denials often takes a backseat.

“They just honestly don’t have the resources to do the type of auditing that we would need,” said Sara McMenamin, an associate professor of public health at the University of California, San Diego, who has been studying the implementation of state mandates.

Agencies often don’t investigate health insurance denials unless policyholders or their families complain. But denials can arrive at the worst moments of people’s lives, when they have little energy to wrangle with bureaucracy. People with plans purchased on HealthCare.gov appealed less than 1% of the time, one study found.

ProPublica surveyed every state’s insurance agency and identified just 45 enforcement actions since 2018 involving denials that have violated coverage mandates. Regulators sometimes treat consumer complaints as one-offs, forcing an insurer to pay for that individual’s treatment without addressing whether a broader group has faced similar wrongful denials.

When regulators have decided to dig deeper, they’ve found that a single complaint is emblematic of a systemic issue impacting thousands of people.

In 2017, a woman complained to Maine’s insurance regulator, saying her carrier, Aetna, broke state law by incorrectly processing claims and overcharging her for services related to the birth of her child. After being contacted by the state, Aetna acknowledged the mistake and issued a refund.

Here's my take:

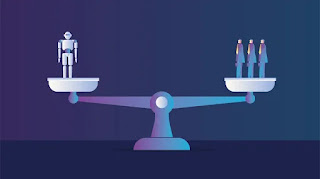

The article explores the ethical issues surrounding health insurance denials and the violation of state laws. The investigation reveals a pattern of health insurance companies systematically denying coverage for medically necessary treatments, even when such denials directly contravene state laws designed to protect patients. The unethical practices extend to various states, indicating a systemic problem within the industry. Patients are often left in precarious situations, facing financial burdens and health risks due to the denial of essential medical services, raising questions about the industry's commitment to prioritizing patient well-being over profit margins.

The article underscores the need for increased regulatory scrutiny and enforcement to hold health insurance companies accountable for violating state laws and compromising patient care. It highlights the ethical imperative for insurers to prioritize their fundamental responsibility to provide coverage for necessary medical treatments and adhere to the legal frameworks in place to safeguard patient rights. The investigation sheds light on the intersection of profit motives and ethical considerations within the health insurance industry, emphasizing the urgency of addressing these systemic issues to ensure that patients receive the care they require without undue financial or health-related consequences.