Lily Frank and Sven Nyholm

Artificial Intelligence and Law

September 2017, Volume 25, Issue 3, pp 305–323

Abstract

The development of highly humanoid sex robots is on the technological horizon. If sex robots are integrated into the legal community as “electronic persons”, the issue of sexual consent arises, which is essential for legally and morally permissible sexual relations between human persons. This paper explores whether it is conceivable, possible, and desirable that humanoid robots should be designed such that they are capable of consenting to sex. We consider reasons for giving both “no” and “yes” answers to these three questions by examining the concept of consent in general, as well as critiques of its adequacy in the domain of sexual ethics; the relationship between consent and free will; and the relationship between consent and consciousness. Additionally we canvass the most influential existing literature on the ethics of sex with robots.

The article is here.

Welcome to the Nexus of Ethics, Psychology, Morality, Philosophy and Health Care

Welcome to the nexus of ethics, psychology, morality, technology, health care, and philosophy

Showing posts with label Consent. Show all posts

Showing posts with label Consent. Show all posts

Tuesday, April 10, 2018

Saturday, March 24, 2018

Facebook employs psychologist whose firm sold data to Cambridge Analytica

Paul Lewis and Julia Carrie Wong

The Guardian

Originally published March 18, 2018

Here are two excerpts:

The co-director of a company that harvested data from tens of millions of Facebook users before selling it to the controversial data analytics firms Cambridge Analytica is currently working for the tech giant as an in-house psychologist.

Joseph Chancellor was one of two founding directors of Global Science Research (GSR), the company that harvested Facebook data using a personality app under the guise of academic research and later shared the data with Cambridge Analytica.

He was hired to work at Facebook as a quantitative social psychologist around November 2015, roughly two months after leaving GSR, which had by then acquired data on millions of Facebook users.

Chancellor is still working as a researcher at Facebook’s Menlo Park headquarters in California, where psychologists frequently conduct research and experiments using the company’s vast trove of data on more than 2 billion users.

(cut)

In the months that followed the creation of GSR, the company worked in collaboration with Cambridge Analytica to pay hundreds of thousands of users to take the test as part of an agreement in which they agreed for their data to be collected for academic use.

However, the app also collected the information of the test-takers’ Facebook friends, leading to the accumulation of a data pool tens of millions strong.

That data sold to Cambridge Analytica as part of a commercial agreement.

Facebook’s “platform policy” allowed only collection of friends’ data to improve user experience in the app and barred it being sold on or used for advertising.

The information is here.

The Guardian

Originally published March 18, 2018

Here are two excerpts:

The co-director of a company that harvested data from tens of millions of Facebook users before selling it to the controversial data analytics firms Cambridge Analytica is currently working for the tech giant as an in-house psychologist.

Joseph Chancellor was one of two founding directors of Global Science Research (GSR), the company that harvested Facebook data using a personality app under the guise of academic research and later shared the data with Cambridge Analytica.

He was hired to work at Facebook as a quantitative social psychologist around November 2015, roughly two months after leaving GSR, which had by then acquired data on millions of Facebook users.

Chancellor is still working as a researcher at Facebook’s Menlo Park headquarters in California, where psychologists frequently conduct research and experiments using the company’s vast trove of data on more than 2 billion users.

(cut)

In the months that followed the creation of GSR, the company worked in collaboration with Cambridge Analytica to pay hundreds of thousands of users to take the test as part of an agreement in which they agreed for their data to be collected for academic use.

However, the app also collected the information of the test-takers’ Facebook friends, leading to the accumulation of a data pool tens of millions strong.

That data sold to Cambridge Analytica as part of a commercial agreement.

Facebook’s “platform policy” allowed only collection of friends’ data to improve user experience in the app and barred it being sold on or used for advertising.

The information is here.

Wednesday, March 7, 2018

The Squishy Ethics of Sex With Robots

Adam Rogers

Wired.com

Originally published February 2, 2018

Here is an excerpt:

Most of the world is ready to accept algorithm-enabled, internet-connected, virtual-reality-optimized sex machines with open arms (arms! I said arms!). The technology is evolving fast, which means two inbound waves of problems. Privacy and security, sure, but even solving those won’t answer two very hard questions: Can a robot consent to having sex with you? Can you consent to sex with it?

Most of the world is ready to accept algorithm-enabled, internet-connected, virtual-reality-optimized sex machines with open arms (arms! I said arms!). The technology is evolving fast, which means two inbound waves of problems. Privacy and security, sure, but even solving those won’t answer two very hard questions: Can a robot consent to having sex with you? Can you consent to sex with it?

One thing that is unquestionable: There is a market. Either through licensing the teledildonics patent or risking lawsuits, several companies have tried to build sex technology that takes advantage of Bluetooth and the internet. “Remote connectivity allows people on opposite ends of the world to control each other’s dildo or sleeve device,” says Maxine Lynn, a patent attorney who writes the blog Unzipped: Sex, Tech, and the Law. “Then there’s also bidirectional control, which is going to be huge in the future. That’s when one sex toy controls the other sex toy and vice versa.”

Vibease, for example, makes a wearable that pulsates in time to synchronized digital books or a partner controlling an app. We-vibe makes vibrators that a partner can control, or set preset patterns. And so on.

The article is here.

Wired.com

Originally published February 2, 2018

Here is an excerpt:

Most of the world is ready to accept algorithm-enabled, internet-connected, virtual-reality-optimized sex machines with open arms (arms! I said arms!). The technology is evolving fast, which means two inbound waves of problems. Privacy and security, sure, but even solving those won’t answer two very hard questions: Can a robot consent to having sex with you? Can you consent to sex with it?

Most of the world is ready to accept algorithm-enabled, internet-connected, virtual-reality-optimized sex machines with open arms (arms! I said arms!). The technology is evolving fast, which means two inbound waves of problems. Privacy and security, sure, but even solving those won’t answer two very hard questions: Can a robot consent to having sex with you? Can you consent to sex with it?One thing that is unquestionable: There is a market. Either through licensing the teledildonics patent or risking lawsuits, several companies have tried to build sex technology that takes advantage of Bluetooth and the internet. “Remote connectivity allows people on opposite ends of the world to control each other’s dildo or sleeve device,” says Maxine Lynn, a patent attorney who writes the blog Unzipped: Sex, Tech, and the Law. “Then there’s also bidirectional control, which is going to be huge in the future. That’s when one sex toy controls the other sex toy and vice versa.”

Vibease, for example, makes a wearable that pulsates in time to synchronized digital books or a partner controlling an app. We-vibe makes vibrators that a partner can control, or set preset patterns. And so on.

The article is here.

Wednesday, January 10, 2018

Failing better

Erik Angner

BPP Blog, the companion blog to the new journal Behavioural Public Policy

Originally posted June 2, 2017

Cass R. Sunstein’s ‘Nudges That Fail’ explores why some nudges work, why some fail, and what should be done in the face of failure. It’s a useful contribution in part because it reminds us that nudging – roughly speaking, the effort to improve people’s welfare by helping them make better choices without interfering with their liberty or autonomy – is harder than it might seem. When people differ in beliefs, values, and preferences, or when they differ in their responses to behavioral interventions, for example, it may be difficult to design a nudge that benefits at least some without violating anyone’s liberty or autonomy. But the paper is a useful contribution also because it suggests concrete, positive steps that may be taken to help us get better simultaneously at enhancing welfare and at respecting liberty and autonomy.

(cut)

Moreover, even if a nudge is on the net welfare enhancing and doesn’t violate any other values, it does not follow that it should be implemented. As economists are fond of telling you, everything has an opportunity cost, and so do nudges. If whatever resources would be used in the implementation of the nudge could be put to better use elsewhere, we would have reason not to implement it. If we did anyway, we would be guilty of the Econ 101 fallacy of ignoring opportunity costs, which would be embarrassing.

The blog post is here.

BPP Blog, the companion blog to the new journal Behavioural Public Policy

Originally posted June 2, 2017

Cass R. Sunstein’s ‘Nudges That Fail’ explores why some nudges work, why some fail, and what should be done in the face of failure. It’s a useful contribution in part because it reminds us that nudging – roughly speaking, the effort to improve people’s welfare by helping them make better choices without interfering with their liberty or autonomy – is harder than it might seem. When people differ in beliefs, values, and preferences, or when they differ in their responses to behavioral interventions, for example, it may be difficult to design a nudge that benefits at least some without violating anyone’s liberty or autonomy. But the paper is a useful contribution also because it suggests concrete, positive steps that may be taken to help us get better simultaneously at enhancing welfare and at respecting liberty and autonomy.

(cut)

Moreover, even if a nudge is on the net welfare enhancing and doesn’t violate any other values, it does not follow that it should be implemented. As economists are fond of telling you, everything has an opportunity cost, and so do nudges. If whatever resources would be used in the implementation of the nudge could be put to better use elsewhere, we would have reason not to implement it. If we did anyway, we would be guilty of the Econ 101 fallacy of ignoring opportunity costs, which would be embarrassing.

The blog post is here.

Monday, December 18, 2017

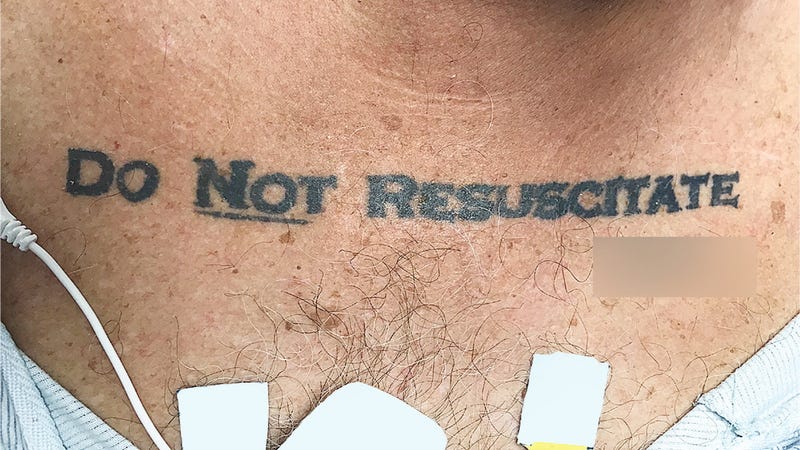

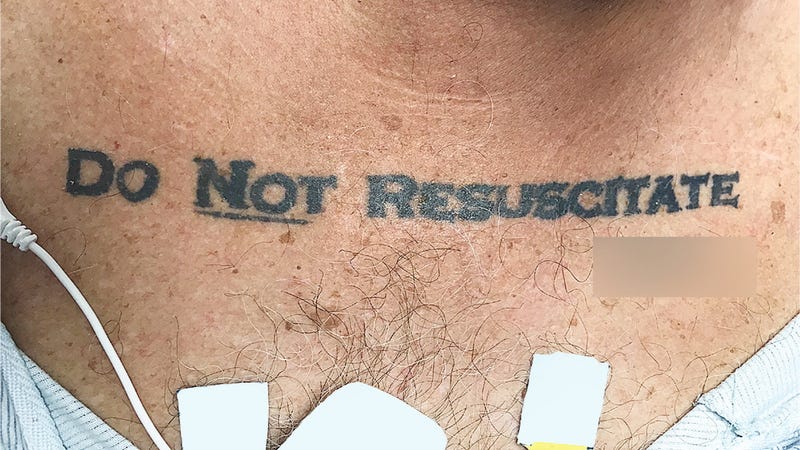

Unconscious Patient With 'Do Not Resuscitate' Tattoo Causes Ethical Conundrum at Hospital

George Dvorsky

Gizmodo

Originally published November 30, 2017

When an unresponsive patient arrived at a Florida hospital ER, the medical staff was taken aback upon discovering the words “DO NOT RESUSCITATE” tattooed onto the man’s chest—with the word “NOT” underlined and with his signature beneath it. Confused and alarmed, the medical staff chose to ignore the apparent DNR request—but not without alerting the hospital’s ethics team, who had a different take on the matter.

When an unresponsive patient arrived at a Florida hospital ER, the medical staff was taken aback upon discovering the words “DO NOT RESUSCITATE” tattooed onto the man’s chest—with the word “NOT” underlined and with his signature beneath it. Confused and alarmed, the medical staff chose to ignore the apparent DNR request—but not without alerting the hospital’s ethics team, who had a different take on the matter.

But with the “DO NOT RESUSCITATE” tattoo glaring back at them, the ICU team was suddenly confronted with a serious dilemma. The patient arrived at the hospital without ID, the medical staff was unable to contact next of kin, and efforts to revive or communicate with the patient were futile. The medical staff had no way of knowing if the tattoo was representative of the man’s true end-of-life wishes, so they decided to play it safe and ignore it.

The article is here.

Gizmodo

Originally published November 30, 2017

When an unresponsive patient arrived at a Florida hospital ER, the medical staff was taken aback upon discovering the words “DO NOT RESUSCITATE” tattooed onto the man’s chest—with the word “NOT” underlined and with his signature beneath it. Confused and alarmed, the medical staff chose to ignore the apparent DNR request—but not without alerting the hospital’s ethics team, who had a different take on the matter.

When an unresponsive patient arrived at a Florida hospital ER, the medical staff was taken aback upon discovering the words “DO NOT RESUSCITATE” tattooed onto the man’s chest—with the word “NOT” underlined and with his signature beneath it. Confused and alarmed, the medical staff chose to ignore the apparent DNR request—but not without alerting the hospital’s ethics team, who had a different take on the matter.But with the “DO NOT RESUSCITATE” tattoo glaring back at them, the ICU team was suddenly confronted with a serious dilemma. The patient arrived at the hospital without ID, the medical staff was unable to contact next of kin, and efforts to revive or communicate with the patient were futile. The medical staff had no way of knowing if the tattoo was representative of the man’s true end-of-life wishes, so they decided to play it safe and ignore it.

The article is here.

Monday, November 27, 2017

Social Media Channels in Health Care Research and Rising Ethical Issues

Samy A. Azer

AMA Journal of Ethics. November 2017, Volume 19, Number 11: 1061-1069.

Abstract

Social media channels such as Twitter, Facebook, and LinkedIn have been used as tools in health care research, opening new horizons for research on health-related topics (e.g., the use of mobile social networking in weight loss programs). While there have been efforts to develop ethical guidelines for internet-related research, researchers still face unresolved ethical challenges. This article investigates some of the risks inherent in social media research and discusses how researchers should handle challenges related to confidentiality, privacy, and consent when social media tools are used in health-related research.

Here is an excerpt:

Social Media Websites and Ethical Challenges

While one may argue that regardless of the design and purpose of social media websites (channels) all information conveyed through social media should be considered public and therefore usable in research, such a generalization is incorrect and does not reflect the principles we follow in other types of research. The distinction between public and private online spaces can blur, and in some situations it is difficult to draw a line. Moreover, as discussed later, social media channels operate under different rules than research, and thus using these tools in research may raise a number of ethical concerns, particularly in health-related research. Good research practice fortifies high-quality science; ethical standards, including integrity; and the professionalism of those conducting the research. Importantly, it ensures the confidentiality and privacy of information collected from individuals participating in the research. Yet, in social media research, there are challenges to ensuring confidentiality, privacy, and informed consent.

The article is here.

AMA Journal of Ethics. November 2017, Volume 19, Number 11: 1061-1069.

Abstract

Social media channels such as Twitter, Facebook, and LinkedIn have been used as tools in health care research, opening new horizons for research on health-related topics (e.g., the use of mobile social networking in weight loss programs). While there have been efforts to develop ethical guidelines for internet-related research, researchers still face unresolved ethical challenges. This article investigates some of the risks inherent in social media research and discusses how researchers should handle challenges related to confidentiality, privacy, and consent when social media tools are used in health-related research.

Here is an excerpt:

Social Media Websites and Ethical Challenges

While one may argue that regardless of the design and purpose of social media websites (channels) all information conveyed through social media should be considered public and therefore usable in research, such a generalization is incorrect and does not reflect the principles we follow in other types of research. The distinction between public and private online spaces can blur, and in some situations it is difficult to draw a line. Moreover, as discussed later, social media channels operate under different rules than research, and thus using these tools in research may raise a number of ethical concerns, particularly in health-related research. Good research practice fortifies high-quality science; ethical standards, including integrity; and the professionalism of those conducting the research. Importantly, it ensures the confidentiality and privacy of information collected from individuals participating in the research. Yet, in social media research, there are challenges to ensuring confidentiality, privacy, and informed consent.

The article is here.

Tuesday, November 7, 2017

Inside a Secretive Group Where Women Are Branded

Barry Meier

The New York Times

Originally published October 17, 2017

Here are two excerpts:

Both Nxivm and Mr. Raniere, 57, have long attracted controversy. Former members have depicted him as a man who manipulated his adherents, had sex with them and urged women to follow near-starvation diets to achieve the type of physique he found appealing.

Now, as talk about the secret sisterhood and branding has circulated within Nxivm, scores of members are leaving. Interviews with a dozen of them portray a group spinning more deeply into disturbing practices. Many members said they feared that confessions about indiscretions would be used to blackmail them.

(cut)

In July, Ms. Edmondson filed a complaint with the New York State Department of Health against Danielle Roberts, a licensed osteopath and follower of Mr. Raniere, who performed the branding, according to Ms. Edmondson and another woman. In a letter, the agency said it would not look into Dr. Roberts because she was not acting as Ms. Edmondson’s doctor when the branding is said to have happened.

Separately, a state police investigator told Ms. Edmondson and two other women that officials would not pursue their criminal complaint against Nxivm because their actions had been consensual, a text message shows.

State medical regulators also declined to act on a complaint filed against another Nxivm-affilated physician, Brandon Porter. Dr. Porter, as part of an “experiment,” showed women graphically violent film clips while a brain-wave machine and video camera recorded their reactions, according to two women who took part.

The women said they were not warned that some of the clips were violent, including footage of four women being murdered and dismembered.

“Please look into this ASAP,” a former Nxivm member, Jennifer Kobelt, stated in her complaint. “This man needs to be stopped.”

In September, regulators told Ms. Kobelt they concluded that the allegations against Dr. Porter did not meet the agency’s definition of “medical misconduct,” their letter shows.

The article is here.

The New York Times

Originally published October 17, 2017

Here are two excerpts:

Both Nxivm and Mr. Raniere, 57, have long attracted controversy. Former members have depicted him as a man who manipulated his adherents, had sex with them and urged women to follow near-starvation diets to achieve the type of physique he found appealing.

Now, as talk about the secret sisterhood and branding has circulated within Nxivm, scores of members are leaving. Interviews with a dozen of them portray a group spinning more deeply into disturbing practices. Many members said they feared that confessions about indiscretions would be used to blackmail them.

(cut)

In July, Ms. Edmondson filed a complaint with the New York State Department of Health against Danielle Roberts, a licensed osteopath and follower of Mr. Raniere, who performed the branding, according to Ms. Edmondson and another woman. In a letter, the agency said it would not look into Dr. Roberts because she was not acting as Ms. Edmondson’s doctor when the branding is said to have happened.

Separately, a state police investigator told Ms. Edmondson and two other women that officials would not pursue their criminal complaint against Nxivm because their actions had been consensual, a text message shows.

State medical regulators also declined to act on a complaint filed against another Nxivm-affilated physician, Brandon Porter. Dr. Porter, as part of an “experiment,” showed women graphically violent film clips while a brain-wave machine and video camera recorded their reactions, according to two women who took part.

The women said they were not warned that some of the clips were violent, including footage of four women being murdered and dismembered.

“Please look into this ASAP,” a former Nxivm member, Jennifer Kobelt, stated in her complaint. “This man needs to be stopped.”

In September, regulators told Ms. Kobelt they concluded that the allegations against Dr. Porter did not meet the agency’s definition of “medical misconduct,” their letter shows.

The article is here.

Thursday, September 28, 2017

What’s Wrong With Voyeurism?

David Boonin

What's Wrong?

Originally posted August 31, 2017

The publication last year of The Voyeur’s Motel, Gay Talese’s controversial account of a Denver area motel owner who purportedly spent several decades secretly observing the intimate lives of his customers, raised a number of difficult ethical questions. Here I want to focus on just one: does the peeping Tom who is never discovered harm his victims?

The peeping Tom profiled in Talese’s book certainly doesn’t think so. In an excerpt that appeared in the New Yorker in advance of the book’s publication, Talese reports that Gerald Foos, the proprietor in question, repeatedly insisted that his behavior was “harmless” on the grounds that his “guests were unaware of it.” Talese himself does not contradict the subject of his account on this point, and Foos’s assertion seems to be grounded in a widely accepted piece of conventional wisdom, one that often takes the form of the adage that “what you don’t know can’t hurt you”. But there’s a problem with this view of harm, and thus a problem with the view that voyeurism, when done successfully, is a harmless vice.

The blog post is here.

What's Wrong?

Originally posted August 31, 2017

The publication last year of The Voyeur’s Motel, Gay Talese’s controversial account of a Denver area motel owner who purportedly spent several decades secretly observing the intimate lives of his customers, raised a number of difficult ethical questions. Here I want to focus on just one: does the peeping Tom who is never discovered harm his victims?

The peeping Tom profiled in Talese’s book certainly doesn’t think so. In an excerpt that appeared in the New Yorker in advance of the book’s publication, Talese reports that Gerald Foos, the proprietor in question, repeatedly insisted that his behavior was “harmless” on the grounds that his “guests were unaware of it.” Talese himself does not contradict the subject of his account on this point, and Foos’s assertion seems to be grounded in a widely accepted piece of conventional wisdom, one that often takes the form of the adage that “what you don’t know can’t hurt you”. But there’s a problem with this view of harm, and thus a problem with the view that voyeurism, when done successfully, is a harmless vice.

The blog post is here.

Tuesday, September 12, 2017

The consent dilemma

Elyn Saks

Politico - The Agenda

Originally published August 9, 2017

Patient consent is an important principle in medicine, but when it comes to mental illness, things get complicated. Other diseases don’t affect a patient’s cognition the way a mental illness can. When the organ with the disease is a patient’s brain, how can it be trusted to make decisions?

That’s one reason that, historically, psychiatric patients were given very little authority to make decisions about their own care. Mental illness and incompetence were considered the same thing. People could be hospitalized and treated against their will if they were considered mentally ill and “in need of treatment.” The presumption was that people with mental illness—essentially by definition—lacked the ability to appreciate their own need for treatment.

In the 1970s, the situation began to change. First, the U.S. Supreme Court ruled that a patient could be hospitalized against his will only if he were dangerous to himself or others, or “gravely disabled,” a decision that led to the de-institutionalization of most mental health care. Second, anti-psychotic medications came into wide use, effectively handing patients the power—on a daily basis—to decide whether to consent to treatment or not, simply by deciding whether or not to take their pills.

The article is here.

Politico - The Agenda

Originally published August 9, 2017

Patient consent is an important principle in medicine, but when it comes to mental illness, things get complicated. Other diseases don’t affect a patient’s cognition the way a mental illness can. When the organ with the disease is a patient’s brain, how can it be trusted to make decisions?

That’s one reason that, historically, psychiatric patients were given very little authority to make decisions about their own care. Mental illness and incompetence were considered the same thing. People could be hospitalized and treated against their will if they were considered mentally ill and “in need of treatment.” The presumption was that people with mental illness—essentially by definition—lacked the ability to appreciate their own need for treatment.

In the 1970s, the situation began to change. First, the U.S. Supreme Court ruled that a patient could be hospitalized against his will only if he were dangerous to himself or others, or “gravely disabled,” a decision that led to the de-institutionalization of most mental health care. Second, anti-psychotic medications came into wide use, effectively handing patients the power—on a daily basis—to decide whether to consent to treatment or not, simply by deciding whether or not to take their pills.

The article is here.

Wednesday, September 6, 2017

The Nuremberg Code 70 Years Later

Jonathan D. Moreno, Ulf Schmidt, and Steve Joffe

JAMA. Published online August 17, 2017.

Seventy years ago, on August 20, 1947, the International Medical Tribunal in Nuremberg, Germany, delivered its verdict in the trial of 23 doctors and bureaucrats accused of war crimes and crimes against humanity for their roles in cruel and often lethal concentration camp medical experiments. As part of its judgment, the court articulated a 10-point set of rules for the conduct of human experiments that has come to be known as the Nuremberg Code. Among other requirements, the code called for the “voluntary consent” of the human research subject, an assessment of risks and benefits, and assurances of competent investigators. These concepts have become an important reference point for the ethical conduct of medical research. Yet, there has in the past been considerable debate among scholars about the code’s authorship, scope, and legal standing in both civilian and military science. Nonetheless, the Nuremberg Code has undoubtedly been a milestone in the history of biomedical research ethics.1- 3

Writings on medical ethics, laws, and regulations in a number of jurisdictions and countries, including a detailed and sophisticated set of guidelines from the Reich Ministry of the Interior in 1931, set the stage for the code. The same focus on voluntariness and risk that characterizes the code also suffuses these guidelines. What distinguishes the code is its context. As lead prosecutor Telford Taylor emphasized, although the Doctors’ Trial was at its heart a murder trial, it clearly implicated the ethical practices of medical experimenters and, by extension, the medical profession’s relationship to the state understood as an organized community living under a particular political structure. The embrace of Nazi ideology by German physicians, and the subsequent participation of some of their most distinguished leaders in the camp experiments, demonstrates the importance of professional independence from and resistance to the ideological and geopolitical ambitions of the authoritarian state.

The article is here.

JAMA. Published online August 17, 2017.

Seventy years ago, on August 20, 1947, the International Medical Tribunal in Nuremberg, Germany, delivered its verdict in the trial of 23 doctors and bureaucrats accused of war crimes and crimes against humanity for their roles in cruel and often lethal concentration camp medical experiments. As part of its judgment, the court articulated a 10-point set of rules for the conduct of human experiments that has come to be known as the Nuremberg Code. Among other requirements, the code called for the “voluntary consent” of the human research subject, an assessment of risks and benefits, and assurances of competent investigators. These concepts have become an important reference point for the ethical conduct of medical research. Yet, there has in the past been considerable debate among scholars about the code’s authorship, scope, and legal standing in both civilian and military science. Nonetheless, the Nuremberg Code has undoubtedly been a milestone in the history of biomedical research ethics.1- 3

Writings on medical ethics, laws, and regulations in a number of jurisdictions and countries, including a detailed and sophisticated set of guidelines from the Reich Ministry of the Interior in 1931, set the stage for the code. The same focus on voluntariness and risk that characterizes the code also suffuses these guidelines. What distinguishes the code is its context. As lead prosecutor Telford Taylor emphasized, although the Doctors’ Trial was at its heart a murder trial, it clearly implicated the ethical practices of medical experimenters and, by extension, the medical profession’s relationship to the state understood as an organized community living under a particular political structure. The embrace of Nazi ideology by German physicians, and the subsequent participation of some of their most distinguished leaders in the camp experiments, demonstrates the importance of professional independence from and resistance to the ideological and geopolitical ambitions of the authoritarian state.

The article is here.

Thursday, August 3, 2017

The Trouble With Sex Robots

By Laura Bates

The New York Times

Originally posted

Here is an excerpt:

One of the authors of the Foundation for Responsible Robotics report, Noel Sharkey, a professor of artificial intelligence and robotics at the University of Sheffield, England, said there are ethical arguments within the field about sex robots with “frigid” settings.

“The idea is robots would resist your sexual advances so that you could rape them,” Professor Sharkey said. “Some people say it’s better they rape robots than rape real people. There are other people saying this would just encourage rapists more.”

Like the argument that women-only train compartments are an answer to sexual harassment and assault, the notion that sex robots could reduce rape is deeply flawed. It suggests that male violence against women is innate and inevitable, and can be only mitigated, not prevented. This is not only insulting to a vast majority of men, but it also entirely shifts responsibility for dealing with these crimes onto their victims — women, and society at large — while creating impunity for perpetrators.

Rape is not an act of sexual passion. It is a violent crime. We should no more be encouraging rapists to find a supposedly safe outlet for it than we should facilitate murderers by giving them realistic, blood-spurting dummies to stab. Since that suggestion sounds ridiculous, why does the idea of providing sexual abusers with lifelike robotic victims sound feasible to some?

The article is here.

The New York Times

Originally posted

Here is an excerpt:

One of the authors of the Foundation for Responsible Robotics report, Noel Sharkey, a professor of artificial intelligence and robotics at the University of Sheffield, England, said there are ethical arguments within the field about sex robots with “frigid” settings.

“The idea is robots would resist your sexual advances so that you could rape them,” Professor Sharkey said. “Some people say it’s better they rape robots than rape real people. There are other people saying this would just encourage rapists more.”

Like the argument that women-only train compartments are an answer to sexual harassment and assault, the notion that sex robots could reduce rape is deeply flawed. It suggests that male violence against women is innate and inevitable, and can be only mitigated, not prevented. This is not only insulting to a vast majority of men, but it also entirely shifts responsibility for dealing with these crimes onto their victims — women, and society at large — while creating impunity for perpetrators.

Rape is not an act of sexual passion. It is a violent crime. We should no more be encouraging rapists to find a supposedly safe outlet for it than we should facilitate murderers by giving them realistic, blood-spurting dummies to stab. Since that suggestion sounds ridiculous, why does the idea of providing sexual abusers with lifelike robotic victims sound feasible to some?

The article is here.

Tuesday, June 6, 2017

Some Social Scientists Are Tired of Asking for Permission

Kate Murphy

The New York Times

Originally published May 22, 2017

Who gets to decide whether the experimental protocol — what subjects are asked to do and disclose — is appropriate and ethical? That question has been roiling the academic community since the Department of Health and Human Services’s Office for Human Research Protections revised its rules in January.

The revision exempts from oversight studies involving “benign behavioral interventions.” This was welcome news to economists, psychologists and sociologists who have long complained that they need not receive as much scrutiny as, say, a medical researcher.

The change received little notice until a March opinion article in The Chronicle of Higher Education went viral. The authors of the article, a professor of human development and a professor of psychology, interpreted the revision as a license to conduct research without submitting it for approval by an institutional review board.

That is, social science researchers ought to be able to decide on their own whether or not their studies are harmful to human subjects.

The Federal Policy for the Protection of Human Subjects (known as the Common Rule) was published in 1991 after a long history of exploitation of human subjects in federally funded research — notably, the Tuskegee syphilis study and a series of radiation experiments that took place over three decades after World War II.

The remedial policy mandated that all institutions, academic or otherwise, establish a review board to ensure that federally funded researchers conducted ethical studies.

The article is here.

The New York Times

Originally published May 22, 2017

Who gets to decide whether the experimental protocol — what subjects are asked to do and disclose — is appropriate and ethical? That question has been roiling the academic community since the Department of Health and Human Services’s Office for Human Research Protections revised its rules in January.

The revision exempts from oversight studies involving “benign behavioral interventions.” This was welcome news to economists, psychologists and sociologists who have long complained that they need not receive as much scrutiny as, say, a medical researcher.

The change received little notice until a March opinion article in The Chronicle of Higher Education went viral. The authors of the article, a professor of human development and a professor of psychology, interpreted the revision as a license to conduct research without submitting it for approval by an institutional review board.

That is, social science researchers ought to be able to decide on their own whether or not their studies are harmful to human subjects.

The Federal Policy for the Protection of Human Subjects (known as the Common Rule) was published in 1991 after a long history of exploitation of human subjects in federally funded research — notably, the Tuskegee syphilis study and a series of radiation experiments that took place over three decades after World War II.

The remedial policy mandated that all institutions, academic or otherwise, establish a review board to ensure that federally funded researchers conducted ethical studies.

The article is here.

Monday, March 20, 2017

When Evidence Says No, But Doctors Say Yes

David Epstein

ProPublica

Originally published February 22, 2017

Here is an excerpt:

When you visit a doctor, you probably assume the treatment you receive is backed by evidence from medical research. Surely, the drug you’re prescribed or the surgery you’ll undergo wouldn’t be so common if it didn’t work, right?

For all the truly wondrous developments of modern medicine — imaging technologies that enable precision surgery, routine organ transplants, care that transforms premature infants into perfectly healthy kids, and remarkable chemotherapy treatments, to name a few — it is distressingly ordinary for patients to get treatments that research has shown are ineffective or even dangerous. Sometimes doctors simply haven’t kept up with the science. Other times doctors know the state of play perfectly well but continue to deliver these treatments because it’s profitable — or even because they’re popular and patients demand them. Some procedures are implemented based on studies that did not prove whether they really worked in the first place. Others were initially supported by evidence but then were contradicted by better evidence, and yet these procedures have remained the standards of care for years, or decades.

The article is here.

ProPublica

Originally published February 22, 2017

Here is an excerpt:

When you visit a doctor, you probably assume the treatment you receive is backed by evidence from medical research. Surely, the drug you’re prescribed or the surgery you’ll undergo wouldn’t be so common if it didn’t work, right?

For all the truly wondrous developments of modern medicine — imaging technologies that enable precision surgery, routine organ transplants, care that transforms premature infants into perfectly healthy kids, and remarkable chemotherapy treatments, to name a few — it is distressingly ordinary for patients to get treatments that research has shown are ineffective or even dangerous. Sometimes doctors simply haven’t kept up with the science. Other times doctors know the state of play perfectly well but continue to deliver these treatments because it’s profitable — or even because they’re popular and patients demand them. Some procedures are implemented based on studies that did not prove whether they really worked in the first place. Others were initially supported by evidence but then were contradicted by better evidence, and yet these procedures have remained the standards of care for years, or decades.

The article is here.

Friday, March 10, 2017

Why genetic testing for genes for criminality is morally required

Julian Savulescu

Princeton Journal of Bioethics [2001, 4:79-97]

Abstract

This paper argues for a Principle of Procreative Beneficence, that couples (or single reproducers) should select the child, of the possible children they could have, who is expected to have the best life, or at least as good a life as the others. If there are a number of different variants of a given gene, then we have most reason to select embryos which have those variants which are associated with the best lives, that is, those lives with the highest levels of well-being. It is possible that in the future some genes are identified which make it more likely that a person will engage in criminal behaviour. If that criminal behaviour makes that person's life go worse (as it plausibly would), and if those genes do not have other good effects in terms of promoting well-being, then we have a strong reason to encourage couples to test their embryos with the most favourable genetic profile. This paper was derived from a talk given as a part of the Decamp Seminar Series at the Princeton University Center for Human Values, October 4, 2000.

The article is here.

Princeton Journal of Bioethics [2001, 4:79-97]

Abstract

This paper argues for a Principle of Procreative Beneficence, that couples (or single reproducers) should select the child, of the possible children they could have, who is expected to have the best life, or at least as good a life as the others. If there are a number of different variants of a given gene, then we have most reason to select embryos which have those variants which are associated with the best lives, that is, those lives with the highest levels of well-being. It is possible that in the future some genes are identified which make it more likely that a person will engage in criminal behaviour. If that criminal behaviour makes that person's life go worse (as it plausibly would), and if those genes do not have other good effects in terms of promoting well-being, then we have a strong reason to encourage couples to test their embryos with the most favourable genetic profile. This paper was derived from a talk given as a part of the Decamp Seminar Series at the Princeton University Center for Human Values, October 4, 2000.

The article is here.

Thursday, March 9, 2017

Why You Should Donate Your Medical Data When You Die

By David Martin Shaw, J. Valérie Gross, Thomas C. Erren

The Conversation on February 16, 2017

Here is an excerpt:

But organs aren’t the only thing that you can donate once you’re dead. What about donating your medical data?

Data might not seem important in the way that organs are. People need organs just to stay alive, or to avoid being on dialysis for several hours a day. But medical data are also very valuable—even if they are not going to save someone’s life immediately. Why? Because medical research cannot take place without medical data, and the sad fact is that most people’s medical data are inaccessible for research once they are dead.

For example, working in shifts can be disruptive to one’s circadian rhythms. This is now thought by some to probably cause cancer. A large cohort study involving tens or hundreds of thousands of individuals could help us to investigate different aspects of shift work, including chronobiology, sleep impairment, cancer biology and premature aging. The results of such research could be very important for cancer prevention. However, any such study could currently be hamstrung by the inability to access and analyze participants’ data after they die.

The article is here.

The Conversation on February 16, 2017

Here is an excerpt:

But organs aren’t the only thing that you can donate once you’re dead. What about donating your medical data?

Data might not seem important in the way that organs are. People need organs just to stay alive, or to avoid being on dialysis for several hours a day. But medical data are also very valuable—even if they are not going to save someone’s life immediately. Why? Because medical research cannot take place without medical data, and the sad fact is that most people’s medical data are inaccessible for research once they are dead.

For example, working in shifts can be disruptive to one’s circadian rhythms. This is now thought by some to probably cause cancer. A large cohort study involving tens or hundreds of thousands of individuals could help us to investigate different aspects of shift work, including chronobiology, sleep impairment, cancer biology and premature aging. The results of such research could be very important for cancer prevention. However, any such study could currently be hamstrung by the inability to access and analyze participants’ data after they die.

The article is here.

Saturday, October 15, 2016

Should non-disclosures be considered as morally equivalent to lies within the doctor–patient relationship?

Caitriona L Cox and Zoe Fritz

J Med Ethics 2016;42:632-635

doi:10.1136/medethics-2015-103014

Abstract

In modern practice, doctors who outright lie to their patients are often condemned, yet those who employ non-lying deceptions tend to be judged less critically. Some areas of non-disclosure have recently been challenged: not telling patients about resuscitation decisions; inadequately informing patients about risks of alternative procedures and withholding information about medical errors. Despite this, there remain many areas of clinical practice where non-disclosures of information are accepted, where lies about such information would not be. Using illustrative hypothetical situations, all based on common clinical practice, we explore the extent to which we should consider other deceptive practices in medicine to be morally equivalent to lying. We suggest that there is no significant moral difference between lying to a patient and intentionally withholding relevant information: non-disclosures could be subjected to Bok's ‘Test of Publicity’ to assess permissibility in the same way that lies are. The moral equivalence of lying and relevant non-disclosure is particularly compelling when the agent's motivations, and the consequences of the actions (from the patient's perspectives), are the same. We conclude that it is arbitrary to claim that there is anything inherently worse about lying to a patient to mislead them than intentionally deceiving them using other methods, such as euphemism or non-disclosure. We should question our intuition that non-lying deceptive practices in clinical practice are more permissible and should thus subject non-disclosures to the same scrutiny we afford to lies.

The article is here.

J Med Ethics 2016;42:632-635

doi:10.1136/medethics-2015-103014

Abstract

In modern practice, doctors who outright lie to their patients are often condemned, yet those who employ non-lying deceptions tend to be judged less critically. Some areas of non-disclosure have recently been challenged: not telling patients about resuscitation decisions; inadequately informing patients about risks of alternative procedures and withholding information about medical errors. Despite this, there remain many areas of clinical practice where non-disclosures of information are accepted, where lies about such information would not be. Using illustrative hypothetical situations, all based on common clinical practice, we explore the extent to which we should consider other deceptive practices in medicine to be morally equivalent to lying. We suggest that there is no significant moral difference between lying to a patient and intentionally withholding relevant information: non-disclosures could be subjected to Bok's ‘Test of Publicity’ to assess permissibility in the same way that lies are. The moral equivalence of lying and relevant non-disclosure is particularly compelling when the agent's motivations, and the consequences of the actions (from the patient's perspectives), are the same. We conclude that it is arbitrary to claim that there is anything inherently worse about lying to a patient to mislead them than intentionally deceiving them using other methods, such as euphemism or non-disclosure. We should question our intuition that non-lying deceptive practices in clinical practice are more permissible and should thus subject non-disclosures to the same scrutiny we afford to lies.

The article is here.

Tuesday, September 13, 2016

New York State Bans Use of Unclaimed Dead as Cadavers Without Consent

By Nina Bernstein

The New York Times

Originally posted August 19, 2016

A bill that Gov. Andrew M. Cuomo of New York signed into law this week concerns the dead as much as the living and signals a big change in public attitudes about what one owes the other.

The law bans the use of unclaimed bodies as cadavers without written consent by a spouse or next of kin, or unless the deceased had registered as a body donor. It ends a 162-year-old system that has required city officials to appropriate unclaimed bodies on behalf of medical schools that teach anatomical dissection and mortuary schools that train embalmers.

The state’s medical schools recently announced that they were withdrawing their opposition to the measure, saying they would meet any shortfall in cadavers by expanding their programs for private body donations.

The article is here.

The New York Times

Originally posted August 19, 2016

The law bans the use of unclaimed bodies as cadavers without written consent by a spouse or next of kin, or unless the deceased had registered as a body donor. It ends a 162-year-old system that has required city officials to appropriate unclaimed bodies on behalf of medical schools that teach anatomical dissection and mortuary schools that train embalmers.

The state’s medical schools recently announced that they were withdrawing their opposition to the measure, saying they would meet any shortfall in cadavers by expanding their programs for private body donations.

The article is here.

Friday, August 19, 2016

'It Just Happened'

By Jake New

Inside Higher Ed

August 2, 2016

Either by choice or when required to do by state legislation, colleges in recent years have moved toward a policy of affirmative consent.

The change moves colleges away from the old “no means no” model of consent -- frequently criticized by victims’ advocates as being too permitting of sexual encounters involving coercion or intoxication -- to one described as “yes means yes.” If the student initiating a sexual encounter does not receive an “enthusiastic yes” from his or her partner, the policies generally state, there is no consent.

Research by two California scholars, however, suggests that students’ understanding of consent is not in line with the new policies and laws. Instead, students often obtain sexual permission through a variety of verbal and nonverbal cues, both nuanced and overt, that do not always meet a strict definition of affirmative consent.

The article is here.

Inside Higher Ed

August 2, 2016

Either by choice or when required to do by state legislation, colleges in recent years have moved toward a policy of affirmative consent.

The change moves colleges away from the old “no means no” model of consent -- frequently criticized by victims’ advocates as being too permitting of sexual encounters involving coercion or intoxication -- to one described as “yes means yes.” If the student initiating a sexual encounter does not receive an “enthusiastic yes” from his or her partner, the policies generally state, there is no consent.

Research by two California scholars, however, suggests that students’ understanding of consent is not in line with the new policies and laws. Instead, students often obtain sexual permission through a variety of verbal and nonverbal cues, both nuanced and overt, that do not always meet a strict definition of affirmative consent.

The article is here.

Thursday, July 14, 2016

Psychologists admit harsh treatment of CIA prisoners but deny torture

By Nicholas K. Geranios

The Associated Press

Originally published June 22, 2016

Two former Air Force psychologists who helped design the CIA’s enhanced interrogation techniques for terrorism suspects acknowledge using waterboarding and other harsh tactics but deny allegations of torture and war crimes leveled by a civil-liberties group, according to new court records.

The American Civil Liberties Union (ACLU) sued consultants James E. Mitchell and John “Bruce” Jessen of Washington state last October on behalf of three former CIA prisoners, including one who died, creating a closely watched case that will likely include classified information.

In response, the pair’s attorneys filed documents this week in which Mitchell and Jessen acknowledge using waterboarding, loud music, confinement, slapping and other harsh methods but refute that they were torture.

“Defendants deny that they committed torture, cruel, inhuman and degrading treatment, nonconsensual human experimentation and/or war crimes,” their lawyers wrote, asking a federal judge in Spokane to throw out the lawsuit and award them court costs.

The article is here.

The Associated Press

Originally published June 22, 2016

Two former Air Force psychologists who helped design the CIA’s enhanced interrogation techniques for terrorism suspects acknowledge using waterboarding and other harsh tactics but deny allegations of torture and war crimes leveled by a civil-liberties group, according to new court records.

The American Civil Liberties Union (ACLU) sued consultants James E. Mitchell and John “Bruce” Jessen of Washington state last October on behalf of three former CIA prisoners, including one who died, creating a closely watched case that will likely include classified information.

In response, the pair’s attorneys filed documents this week in which Mitchell and Jessen acknowledge using waterboarding, loud music, confinement, slapping and other harsh methods but refute that they were torture.

“Defendants deny that they committed torture, cruel, inhuman and degrading treatment, nonconsensual human experimentation and/or war crimes,” their lawyers wrote, asking a federal judge in Spokane to throw out the lawsuit and award them court costs.

The article is here.

Monday, July 11, 2016

Facebook has a new process for discussing ethics. But is it ethical?

Anna Lauren Hoffman

The Guardian

Originally posted Friday 17 June 2016

Here is an excerpt:

Tellingly, Facebook’s descriptions of procedure and process offer little insight into the values and ideals that drive its decision-making. Instead, the authors offer vague, hollow and at times conflicting statements such as noting how its reviewers “consider how the research will improve our society, our community, and Facebook”.

This seemingly innocuous statement raises more ethical questions than it answers. What does Facebook think an “improved” society looks like? Who or what constitutes “our community?” What values inform their ideas of a better society?

Facebook sidesteps this completely by saying that ethical oversight necessarily involves subjectivity and a degree of discretion on the part of reviewers – yet simply noting that subjectivity is unavoidable does not negate the fact that explicit discussion of ethical values is important.

The article is here.

The Guardian

Originally posted Friday 17 June 2016

Here is an excerpt:

Tellingly, Facebook’s descriptions of procedure and process offer little insight into the values and ideals that drive its decision-making. Instead, the authors offer vague, hollow and at times conflicting statements such as noting how its reviewers “consider how the research will improve our society, our community, and Facebook”.

This seemingly innocuous statement raises more ethical questions than it answers. What does Facebook think an “improved” society looks like? Who or what constitutes “our community?” What values inform their ideas of a better society?

Facebook sidesteps this completely by saying that ethical oversight necessarily involves subjectivity and a degree of discretion on the part of reviewers – yet simply noting that subjectivity is unavoidable does not negate the fact that explicit discussion of ethical values is important.

The article is here.

Subscribe to:

Posts (Atom)